Yanbaihui Liu

LAPP: Large Language Model Feedback for Preference-Driven Reinforcement Learning

Apr 21, 2025Abstract:We introduce Large Language Model-Assisted Preference Prediction (LAPP), a novel framework for robot learning that enables efficient, customizable, and expressive behavior acquisition with minimum human effort. Unlike prior approaches that rely heavily on reward engineering, human demonstrations, motion capture, or expensive pairwise preference labels, LAPP leverages large language models (LLMs) to automatically generate preference labels from raw state-action trajectories collected during reinforcement learning (RL). These labels are used to train an online preference predictor, which in turn guides the policy optimization process toward satisfying high-level behavioral specifications provided by humans. Our key technical contribution is the integration of LLMs into the RL feedback loop through trajectory-level preference prediction, enabling robots to acquire complex skills including subtle control over gait patterns and rhythmic timing. We evaluate LAPP on a diverse set of quadruped locomotion and dexterous manipulation tasks and show that it achieves efficient learning, higher final performance, faster adaptation, and precise control of high-level behaviors. Notably, LAPP enables robots to master highly dynamic and expressive tasks such as quadruped backflips, which remain out of reach for standard LLM-generated or handcrafted rewards. Our results highlight LAPP as a promising direction for scalable preference-driven robot learning.

WildFusion: Multimodal Implicit 3D Reconstructions in the Wild

Sep 30, 2024Abstract:We propose WildFusion, a novel approach for 3D scene reconstruction in unstructured, in-the-wild environments using multimodal implicit neural representations. WildFusion integrates signals from LiDAR, RGB camera, contact microphones, tactile sensors, and IMU. This multimodal fusion generates comprehensive, continuous environmental representations, including pixel-level geometry, color, semantics, and traversability. Through real-world experiments on legged robot navigation in challenging forest environments, WildFusion demonstrates improved route selection by accurately predicting traversability. Our results highlight its potential to advance robotic navigation and 3D mapping in complex outdoor terrains.

Human Following Based on Visual Perception in the Context of Warehouse Logistics

Jan 08, 2023

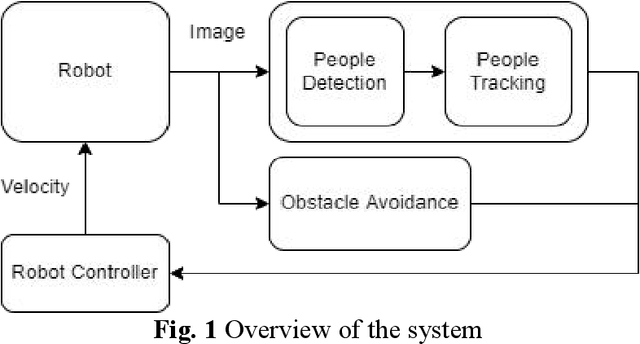

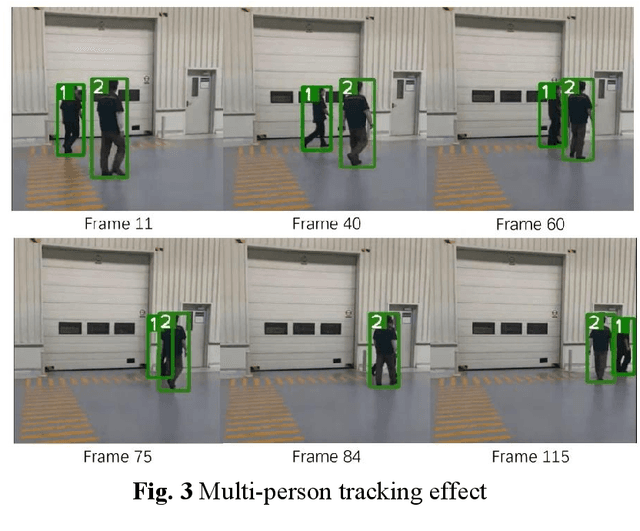

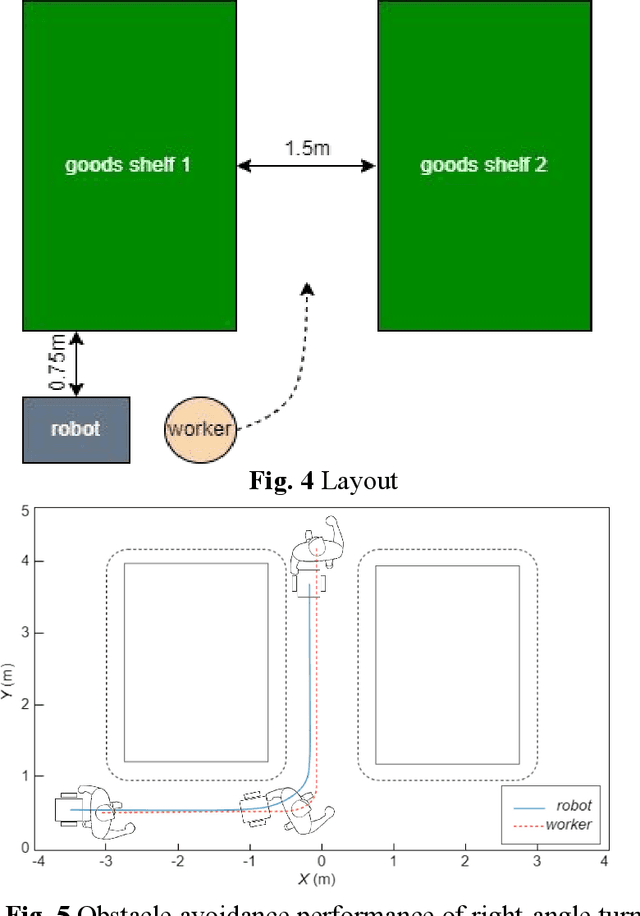

Abstract:Under the background of 5G, Internet, artificial intelligence technol,ogy and robot technology, warehousing, and logistics robot technology has developed rapidly, and products have been widely used. A practical application is to help warehouse personnel pick up or deliver heavy goods at dispersed locations based on dynamic routes. However, programs that can only accept instructions or pre-set by the system do not have more flexibility, but existing human auto-following techniques either cannot accurately identify specific targets or require a combination of lasers and cameras that are cumbersome and do not accomplish obstacle avoidance well. This paper proposed an algorithm that combines DeepSort and a width-based tracking module to track targets and use artificial potential field local path planning to avoid obstacles. The evaluation is performed in a self-designed flat bounded test field and simulated in ROS. Our method achieves the SOTA results on following and successfully reaching the end-point without hitting obstacles.

Localization and Navigation System for Indoor Mobile Robot

Dec 13, 2022Abstract:Visually impaired people usually find it hard to travel independently in many public places such as airports and shopping malls due to the problems of obstacle avoidance and guidance to the desired location. Therefore, in the highly dynamic indoor environment, how to improve indoor navigation robot localization and navigation accuracy so that they guide the visually impaired well becomes a problem. One way is to use visual SLAM. However, typical visual SLAM either assumes a static environment, which may lead to less accurate results in dynamic environments or assumes that the targets are all dynamic and removes all the feature points above, sacrificing computational speed to a large extent with the available computational power. This paper seeks to explore marginal localization and navigation systems for indoor navigation robotics. The proposed system is designed to improve localization and navigation accuracy in highly dynamic environments by identifying and tracking potentially moving objects and using vector field histograms for local path planning and obstacle avoidance. The system has been tested on a public indoor RGB-D dataset, and the results show that the new system improves accuracy and robustness while reducing computation time in highly dynamic indoor scenes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge