Yahav Bechavod

Dynamic Regret Bounds for Online Omniprediction with Long Term Constraints

Oct 08, 2025Abstract:We present an algorithm guaranteeing dynamic regret bounds for online omniprediction with long term constraints. The goal in this recently introduced problem is for a learner to generate a sequence of predictions which are broadcast to a collection of downstream decision makers. Each decision maker has their own utility function, as well as a vector of constraint functions, each mapping their actions and an adversarially selected state to reward or constraint violation terms. The downstream decision makers select actions "as if" the state predictions are correct, and the goal of the learner is to produce predictions such that all downstream decision makers choose actions that give them worst-case utility guarantees while minimizing worst-case constraint violation. Within this framework, we give the first algorithm that obtains simultaneous \emph{dynamic regret} guarantees for all of the agents -- where regret for each agent is measured against a potentially changing sequence of actions across rounds of interaction, while also ensuring vanishing constraint violation for each agent. Our results do not require the agents themselves to maintain any state -- they only solve one-round constrained optimization problems defined by the prediction made at that round.

Monotone Individual Fairness

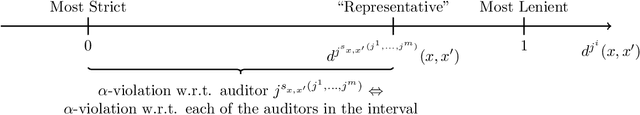

Mar 11, 2024Abstract:We revisit the problem of online learning with individual fairness, where an online learner strives to maximize predictive accuracy while ensuring that similar individuals are treated similarly. We first extend the frameworks of Gillen et al. (2018); Bechavod et al. (2020), which rely on feedback from human auditors regarding fairness violations, as we consider auditing schemes that are capable of aggregating feedback from any number of auditors, using a rich class we term monotone aggregation functions. We then prove a characterization for such auditing schemes, practically reducing the analysis of auditing for individual fairness by multiple auditors to that of auditing by (instance-specific) single auditors. Using our generalized framework, we present an oracle-efficient algorithm achieving an upper bound frontier of $(\mathcal{O}(T^{1/2+2b}),\mathcal{O}(T^{3/4-b}))$ respectively for regret, number of fairness violations, for $0\leq b \leq 1/4$. We then study an online classification setting where label feedback is available for positively-predicted individuals only, and present an oracle-efficient algorithm achieving an upper bound frontier of $(\mathcal{O}(T^{2/3+2b}),\mathcal{O}(T^{5/6-b}))$ for regret, number of fairness violations, for $0\leq b \leq 1/6$. In both settings, our algorithms improve on the best known bounds for oracle-efficient algorithms. Furthermore, our algorithms offer significant improvements in computational efficiency, greatly reducing the number of required calls to an (offline) optimization oracle per round, to $\tilde{\mathcal{O}}(\alpha^{-2})$ in the full information setting, and $\tilde{\mathcal{O}}(\alpha^{-2} + k^2T^{1/3})$ in the partial information setting, where $\alpha$ is the sensitivity for reporting fairness violations, and $k$ is the number of individuals in a round.

Individually Fair Learning with One-Sided Feedback

Jun 09, 2022

Abstract:We consider an online learning problem with one-sided feedback, in which the learner is able to observe the true label only for positively predicted instances. On each round, $k$ instances arrive and receive classification outcomes according to a randomized policy deployed by the learner, whose goal is to maximize accuracy while deploying individually fair policies. We first extend the framework of Bechavod et al. (2020), which relies on the existence of a human fairness auditor for detecting fairness violations, to instead incorporate feedback from dynamically-selected panels of multiple, possibly inconsistent, auditors. We then construct an efficient reduction from our problem of online learning with one-sided feedback and a panel reporting fairness violations to the contextual combinatorial semi-bandit problem (Cesa-Bianchi & Lugosi, 2009, Gy\"{o}rgy et al., 2007). Finally, we show how to leverage the guarantees of two algorithms in the contextual combinatorial semi-bandit setting: Exp2 (Bubeck et al., 2012) and the oracle-efficient Context-Semi-Bandit-FTPL (Syrgkanis et al., 2016), to provide multi-criteria no regret guarantees simultaneously for accuracy and fairness. Our results eliminate two potential sources of bias from prior work: the "hidden outcomes" that are not available to an algorithm operating in the full information setting, and human biases that might be present in any single human auditor, but can be mitigated by selecting a well chosen panel.

Information Discrepancy in Strategic Learning

Mar 03, 2021

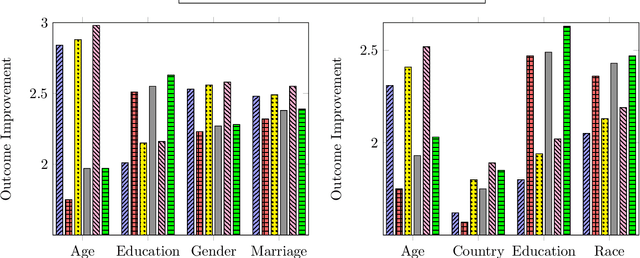

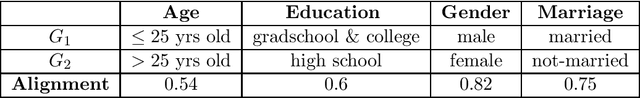

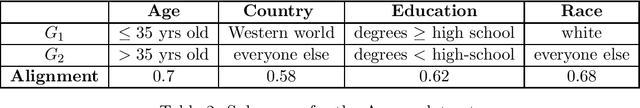

Abstract:We study a decision-making model where a principal deploys a scoring rule and the agents strategically invest effort to improve their scores. Unlike existing work in the strategic learning literature, we do not assume that the principal's scoring rule is fully known to the agents, and agents may form different estimates of the scoring rule based on their own sources of information. We focus on disparities in outcomes that stem from information discrepancies in our model. To do so, we consider a population of agents who belong to different subgroups, which determine their knowledge about the deployed scoring rule. Agents within each subgroup observe the past scores received by their peers, which allow them to construct an estimate of the deployed scoring rule and to invest their efforts accordingly. The principal, taking into account the agents' behaviors, deploys a scoring rule that maximizes the social welfare of the whole population. We provide a collection of theoretical results that characterize the impact of the welfare-maximizing scoring rules on the strategic effort investments across different subgroups. In particular, we identify sufficient and necessary conditions for when the deployed scoring rule incentivizes optimal strategic investment across all groups for different notions of optimality. Finally, we complement and validate our theoretical analysis with experimental results on the real-world datasets Taiwan-Credit and Adult.

Metric-Free Individual Fairness in Online Learning

Feb 17, 2020

Abstract:We study an online learning problem subject to the constraint of individual fairness, which requires that similar individuals are treated similarly. Unlike prior work on individual fairness, we do not assume the similarity measure among individuals is known, nor do we assume that such measure takes a certain parametric form. Instead, we leverage the existence of an auditor who detects fairness violations without enunciating the quantitative measure. In each round, the auditor examines the learner's decisions and attempts to identify a pair of individuals that are treated unfairly by the learner. We provide a general reduction framework that reduces online classification in our model to standard online classification, which allows us to leverage existing online learning algorithms to achieve sub-linear regret and number of fairness violations. Surprisingly, in the stochastic setting where the data are drawn independently from a distribution, we are also able to establish PAC-style fairness and accuracy generalization guarantees (Yona and Rothblum [2018]), despite only having access to a very restricted form of fairness feedback. Our fairness generalization bound qualitatively matches the uniform convergence bound of Yona and Rothblum [2018], while also providing a meaningful accuracy generalization guarantee. Our results resolve an open question by Gillen et al. [2018] by showing that online learning under an unknown individual fairness constraint is possible even without assuming a strong parametric form of the underlying similarity measure.

Causal Feature Discovery through Strategic Modification

Feb 17, 2020Abstract:We consider an online regression setting in which individuals adapt to the regression model: arriving individuals may access the model throughout the process, and invest strategically in modifying their own features so as to improve their assigned score. We find that this strategic manipulation may help a learner recover the causal variables, in settings where an agent can invest in improving impactful features that also improve his true label. We show that even simple behavior on the learner's part (i.e., periodically updating her model based on the observed data so far, via least-square regression) allows her to simultaneously i) accurately recover which features have an impact on an agent's true label, provided they have been invested in significantly, and ii) incentivize agents to invest in these impactful features, rather than in features that have no effect on their true label.

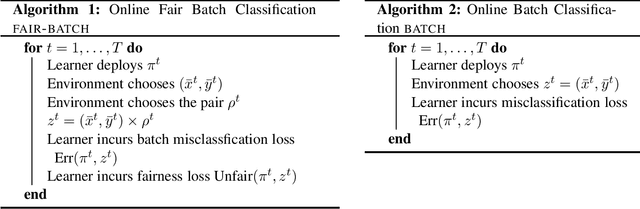

Equal Opportunity in Online Classification with Partial Feedback

Feb 06, 2019Abstract:We study an online classification problem with partial feedback in which individuals arrive one at a time from a fixed but unknown distribution, and must be classified as positive or negative. Our algorithm only observes the true label of an individual if they are given a positive classification. This setting captures many classification problems for which fairness is a concern: for example, in criminal recidivism prediction, recidivism is only observed if the inmate is released; in lending applications, loan repayment is only observed if the loan is granted. We require that our algorithms satisfy common statistical fairness constraints (such as equalizing false positive or negative rates --- introduced as "equal opportunity" in Hardt et al. (2016)) at every round, with respect to the underlying distribution. We give upper and lower bounds characterizing the cost of this constraint in terms of the regret rate (and show that it is mild), and give an oracle efficient algorithm that achieves the upper bound.

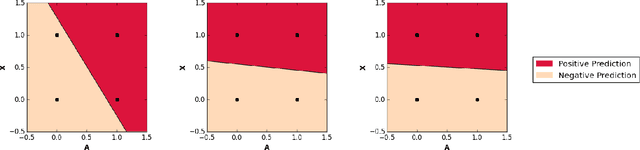

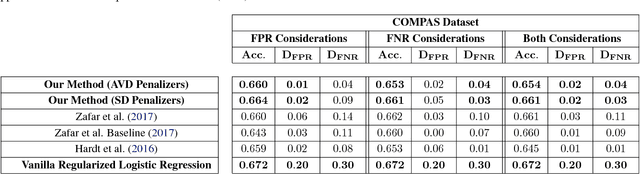

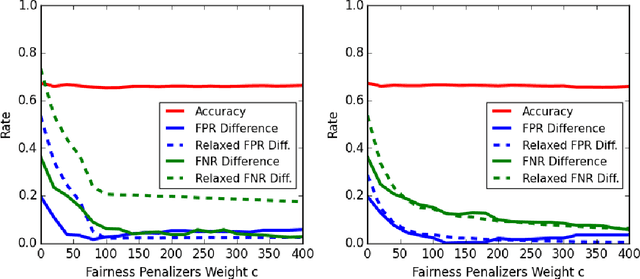

Penalizing Unfairness in Binary Classification

Mar 08, 2018

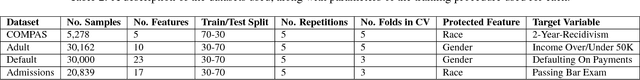

Abstract:We present a new approach for mitigating unfairness in learned classifiers. In particular, we focus on binary classification tasks over individuals from two populations, where, as our criterion for fairness, we wish to achieve similar false positive rates in both populations, and similar false negative rates in both populations. As a proof of concept, we implement our approach and empirically evaluate its ability to achieve both fairness and accuracy, using datasets from the fields of criminal risk assessment, credit, lending, and college admissions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge