Xuanting Hao

Masked Image Modeling Boosting Semi-Supervised Semantic Segmentation

Nov 14, 2024

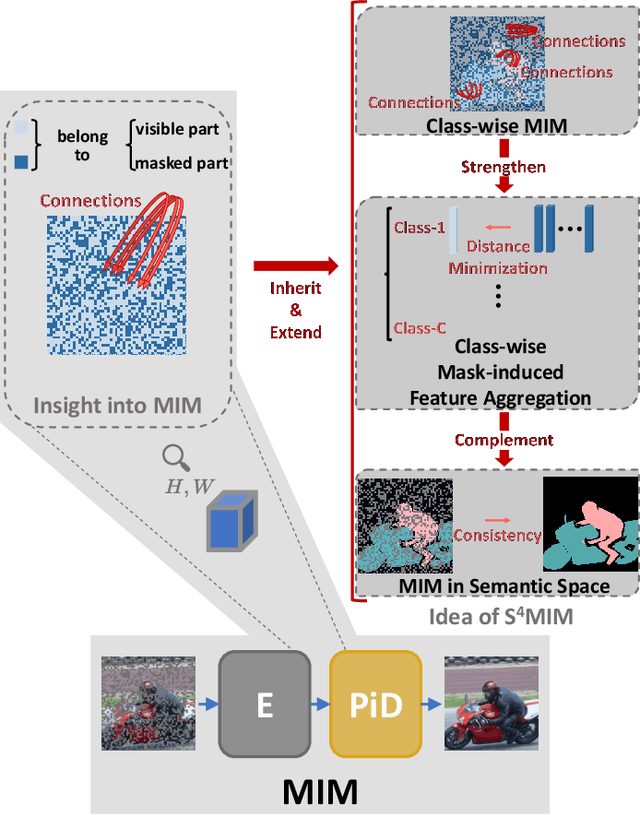

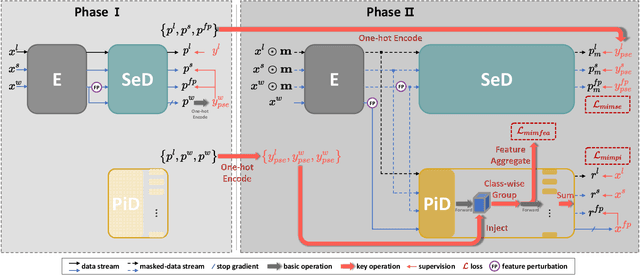

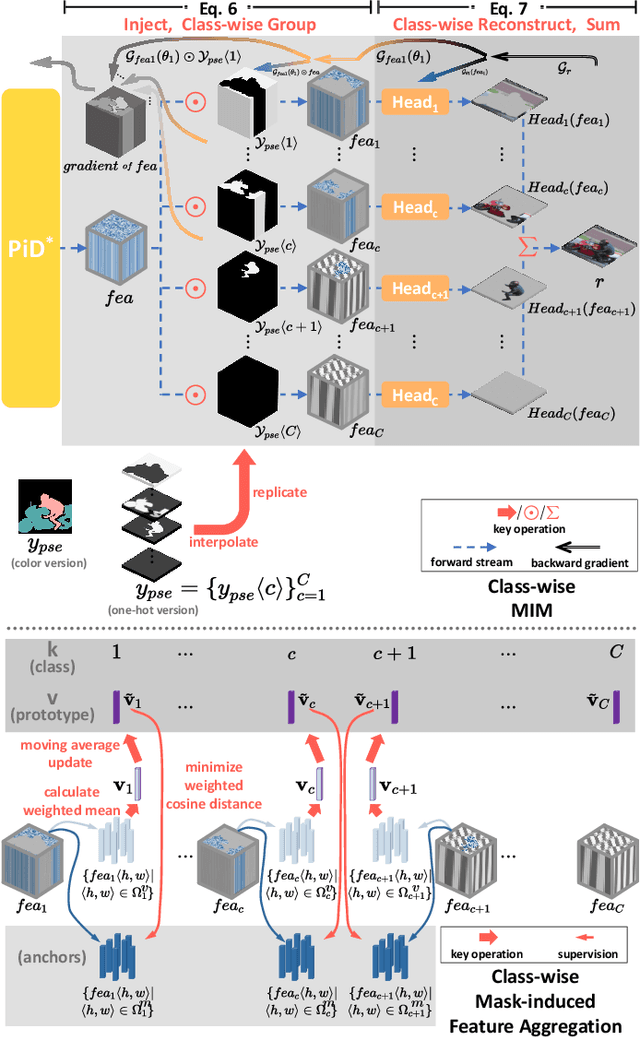

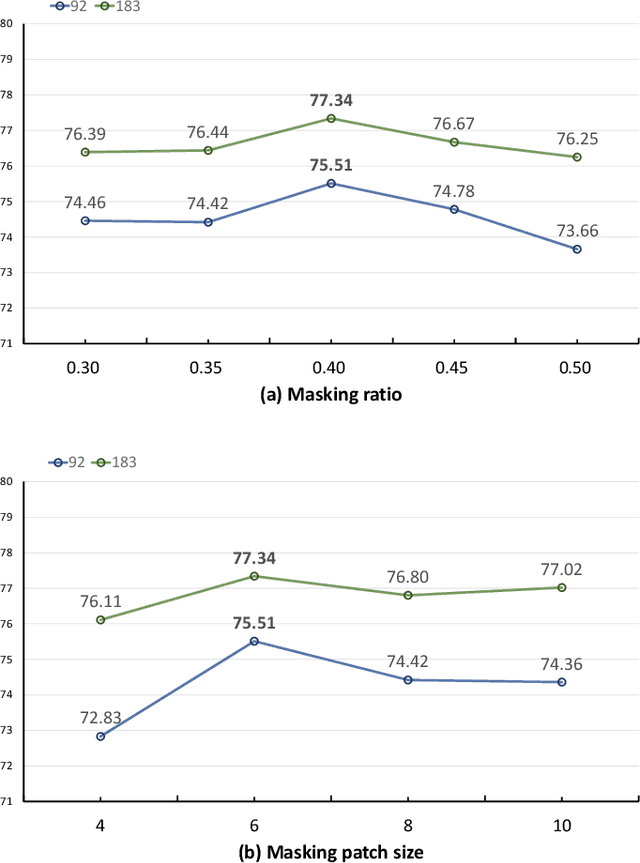

Abstract:In view of the fact that semi- and self-supervised learning share a fundamental principle, effectively modeling knowledge from unlabeled data, various semi-supervised semantic segmentation methods have integrated representative self-supervised learning paradigms for further regularization. However, the potential of the state-of-the-art generative self-supervised paradigm, masked image modeling, has been scarcely studied. This paradigm learns the knowledge through establishing connections between the masked and visible parts of masked image, during the pixel reconstruction process. By inheriting and extending this insight, we successfully leverage masked image modeling to boost semi-supervised semantic segmentation. Specifically, we introduce a novel class-wise masked image modeling that independently reconstructs different image regions according to their respective classes. In this way, the mask-induced connections are established within each class, mitigating the semantic confusion that arises from plainly reconstructing images in basic masked image modeling. To strengthen these intra-class connections, we further develop a feature aggregation strategy that minimizes the distances between features corresponding to the masked and visible parts within the same class. Additionally, in semantic space, we explore the application of masked image modeling to enhance regularization. Extensive experiments conducted on well-known benchmarks demonstrate that our approach achieves state-of-the-art performance. The code will be available at https://github.com/haoxt/S4MIM.

Parameter Decoupling Strategy for Semi-supervised 3D Left Atrium Segmentation

Sep 20, 2021

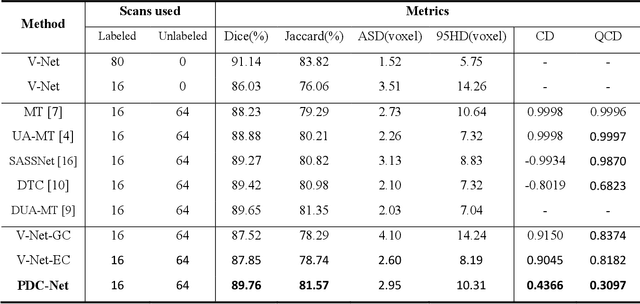

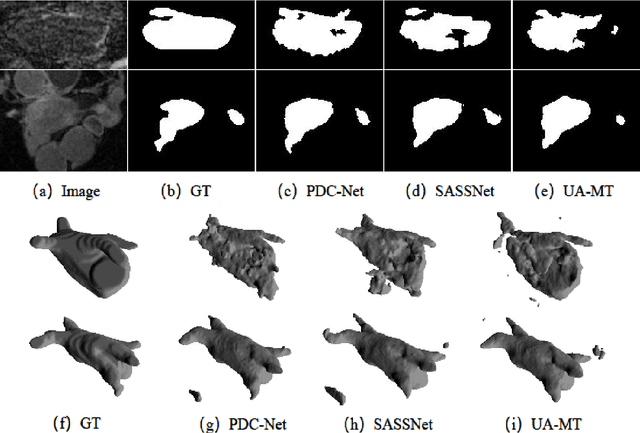

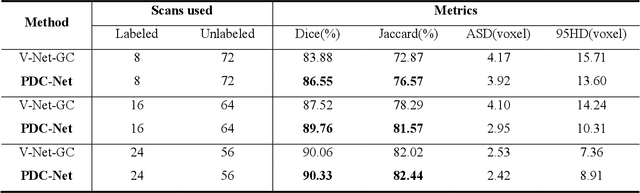

Abstract:Consistency training has proven to be an advanced semi-supervised framework and achieved promising results in medical image segmentation tasks through enforcing an invariance of the predictions over different views of the inputs. However, with the iterative updating of model parameters, the models would tend to reach a coupled state and eventually lose the ability to exploit unlabeled data. To address the issue, we present a novel semi-supervised segmentation model based on parameter decoupling strategy to encourage consistent predictions from diverse views. Specifically, we first adopt a two-branch network to simultaneously produce predictions for each image. During the training process, we decouple the two prediction branch parameters by quadratic cosine distance to construct different views in latent space. Based on this, the feature extractor is constrained to encourage the consistency of probability maps generated by classifiers under diversified features. In the overall training process, the parameters of feature extractor and classifiers are updated alternately by consistency regularization operation and decoupling operation to gradually improve the generalization performance of the model. Our method has achieved a competitive result over the state-of-the-art semi-supervised methods on the Atrial Segmentation Challenge dataset, demonstrating the effectiveness of our framework. Code is available at https://github.com/BX0903/PDC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge