Xuan Liang

On the Subbagging Estimation for Massive Data

Feb 28, 2021

Abstract:This article introduces subbagging (subsample aggregating) estimation approaches for big data analysis with memory constraints of computers. Specifically, for the whole dataset with size $N$, $m_N$ subsamples are randomly drawn, and each subsample with a subsample size $k_N\ll N$ to meet the memory constraint is sampled uniformly without replacement. Aggregating the estimators of $m_N$ subsamples can lead to subbagging estimation. To analyze the theoretical properties of the subbagging estimator, we adapt the incomplete $U$-statistics theory with an infinite order kernel to allow overlapping drawn subsamples in the sampling procedure. Utilizing this novel theoretical framework, we demonstrate that via a proper hyperparameter selection of $k_N$ and $m_N$, the subbagging estimator can achieve $\sqrt{N}$-consistency and asymptotic normality under the condition $(k_Nm_N)/N\to \alpha \in (0,\infty]$. Compared to the full sample estimator, we theoretically show that the $\sqrt{N}$-consistent subbagging estimator has an inflation rate of $1/\alpha$ in its asymptotic variance. Simulation experiments are presented to demonstrate the finite sample performances. An American airline dataset is analyzed to illustrate that the subbagging estimate is numerically close to the full sample estimate, and can be computationally fast under the memory constraint.

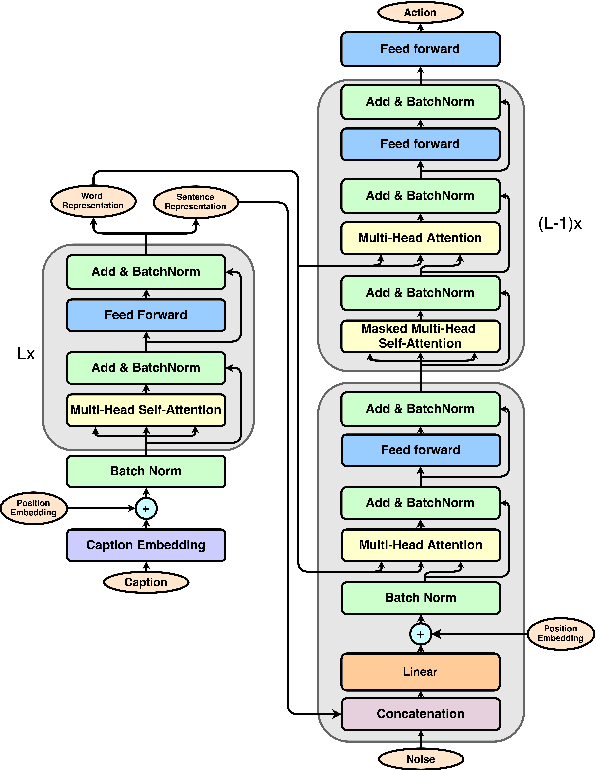

Actions Generation from Captions

Feb 14, 2019

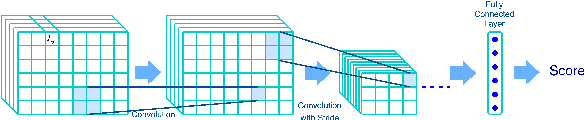

Abstract:Sequence transduction models have been widely explored in many natural language processing tasks. However, the target sequence usually consists of discrete tokens which represent word indices in a given vocabulary. We barely see the case where target sequence is composed of continuous vectors, where each vector is an element of a time series taken successively in a temporal domain. In this work, we introduce a new data set, named Action Generation Data Set (AGDS) which is specifically designed to carry out the task of caption-to-action generation. This data set contains caption-action pairs. The caption is comprised of a sequence of words describing the interactive movement between two people, and the action is a captured sequence of poses representing the movement. This data set is introduced to study the ability of generating continuous sequences through sequence transduction models. We also propose a model to innovatively combine Multi-Head Attention (MHA) and Generative Adversarial Network (GAN) together. In our model, we have one generator to generate actions from captions and three discriminators where each of them is designed to carry out a unique functionality: caption-action consistency discriminator, pose discriminator and pose transition discriminator. This novel design allowed us to achieve plausible generation performance which is demonstrated in the experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge