Xirui Ke

Diversifying Question Generation over Knowledge Base via External Natural Questions

Sep 23, 2023

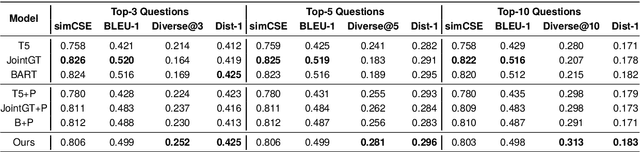

Abstract:Previous methods on knowledge base question generation (KBQG) primarily focus on enhancing the quality of a single generated question. Recognizing the remarkable paraphrasing ability of humans, we contend that diverse texts should convey the same semantics through varied expressions. The above insights make diversifying question generation an intriguing task, where the first challenge is evaluation metrics for diversity. Current metrics inadequately assess the above diversity since they calculate the ratio of unique n-grams in the generated question itself, which leans more towards measuring duplication rather than true diversity. Accordingly, we devise a new diversity evaluation metric, which measures the diversity among top-k generated questions for each instance while ensuring their relevance to the ground truth. Clearly, the second challenge is how to enhance diversifying question generation. To address this challenge, we introduce a dual model framework interwoven by two selection strategies to generate diverse questions leveraging external natural questions. The main idea of our dual framework is to extract more diverse expressions and integrate them into the generation model to enhance diversifying question generation. Extensive experiments on widely used benchmarks for KBQG demonstrate that our proposed approach generates highly diverse questions and improves the performance of question answering tasks.

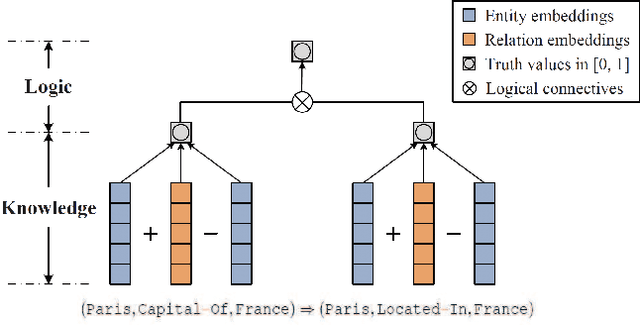

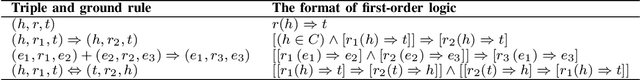

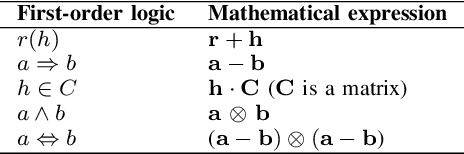

Neural-Symbolic Reasoning on Knowledge Graphs

Oct 29, 2020

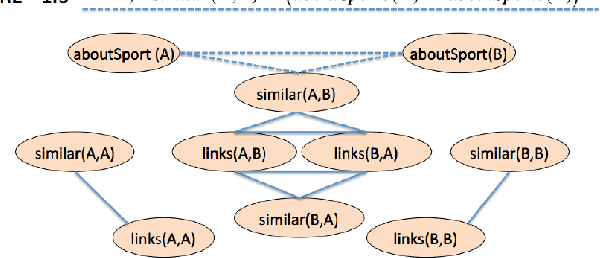

Abstract:Knowledge graph reasoning is the fundamental component to support machine learning applications such as information extraction, information retrieval and recommendation. Since knowledge graph can be viewed as the discrete symbolic representations of knowledge, reasoning on knowledge graphs can naturally leverage the symbolic techniques. However, symbolic reasoning is intolerant of the ambiguous and noisy data. On the contrary, the recent advances of deep learning promote neural reasoning on knowledge graphs, which is robust to the ambiguous and noisy data, but lacks interpretability compared to symbolic reasoning. Considering the advantages and disadvantages of both methodologies, recent efforts have been made on combining the two reasoning methods. In this survey, we take a thorough look at the development of the symbolic reasoning, neural reasoning and the neural-symbolic reasoning on knowledge graphs. We survey two specific reasoning tasks, knowledge graph completion and question answering on knowledge graphs, and explain them in a unified reasoning framework. We also briefly discuss the future directions for knowledge graph reasoning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge