Xingpeng Li

Constraints and Variables Reduction for Optimal Power Flow Using Hierarchical Graph Neural Networks with Virtual Node-Splitting

Nov 09, 2024Abstract:Power system networks are often modeled as homogeneous graphs, which limits the ability of graph neural network (GNN) to capture individual generator features at the same nodes. By introducing the proposed virtual node-splitting strategy, generator-level attributes like costs, limits, and ramp rates can be fully captured by GNN models, improving GNN's learning capacity and prediction accuracy. Optimal power flow (OPF) problem is used for real-time grid operations. Limited timeframe motivates studies to create size-reduced OPF (ROPF) models to relieve the computational complexity. In this paper, with virtual node-splitting, a novel two-stage adaptive hierarchical GNN is developed to (i) predict critical lines that would be congested, and then (ii) predict base generators that would operate at the maximum capacity. This will substantially reduce the constraints and variables needed for OPF, creating the proposed ROPFLG model with reduced monitor lines and reduced generator-specific variables and constraints. Two ROPF models, ROPFL and ROPFG, with just reduced lines or generators respectively, are also implemented as additional benchmark models. Case studies show that the proposed ROPFLG consistently outperforms the benchmark full OPF (FOPF) and the other two ROPF methods, achieving significant computational time savings while reliably finding optimal solutions.

Graph Neural Network-Accelerated Network-Reconfigured Optimal Power Flow

Oct 22, 2024Abstract:Optimal power flow (OPF) has been used for real-time grid operations. Prior efforts demonstrated that utilizing flexibility from dynamic topologies will improve grid efficiency. However, this will convert the linear OPF into a mixed-integer linear programming network-reconfigured OPF (NR-OPF) problem, substantially increasing the computing time. Thus, a machine learning (ML)-based approach, particularly utilizing graph neural network (GNN), is proposed to accelerate the solution process. The GNN model is trained offline to predict the best topology before entering the optimization stage. In addition, this paper proposes an offline pre-ML filter layer to reduce GNN model size and training time while improving its accuracy. A fast online post-ML selection layer is also proposed to analyze GNN predictions and then select a subset of predicted NR solutions with high confidence. Case studies have demonstrated superior performance of the proposed GNN-accelerated NR-OPF method augmented with the proposed pre-ML and post-ML layers.

N-1 Reduced Optimal Power Flow Using Augmented Hierarchical Graph Neural Network

Feb 09, 2024Abstract:Optimal power flow (OPF) is used to perform generation redispatch in power system real-time operations. N-1 OPF can ensure safe grid operations under diverse contingency scenarios. For large and intricate power networks with numerous variables and constraints, achieving an optimal solution for real-time N-1 OPF necessitates substantial computational resources. To mitigate this challenge, machine learning (ML) is introduced as an additional tool for predicting congested or heavily loaded lines dynamically. In this paper, an advanced ML model known as the augmented hierarchical graph neural network (AHGNN) was proposed to predict critical congested lines and create N-1 reduced OPF (N-1 ROPF). The proposed AHGNN-enabled N-1 ROPF can result in a remarkable reduction in computing time while retaining the solution quality. Several variations of GNN-based ML models are also implemented as benchmark to demonstrate effectiveness of the proposed AHGNN approach. Case studies prove the proposed AHGNN and the associated N-1 ROPF are highly effective in reducing computation time while preserving solution quality, highlighting the promising potential of ML, particularly GNN in enhancing power system operations.

Analysis of Weather and Time Features in Machine Learning-aided ERCOT Load Forecasting

Oct 13, 2023

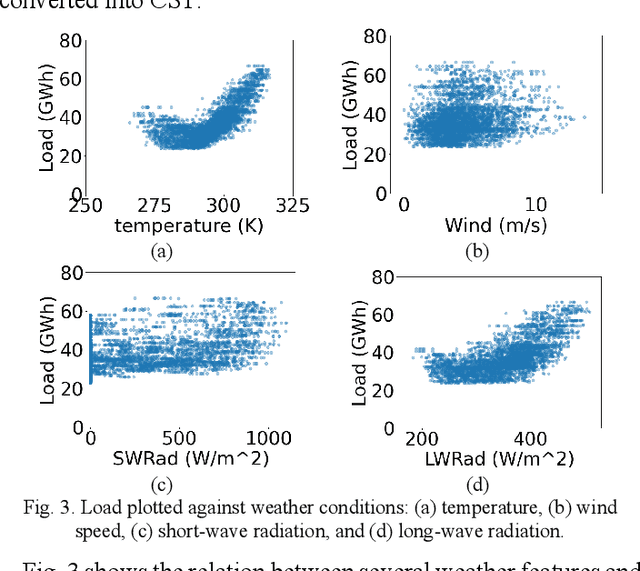

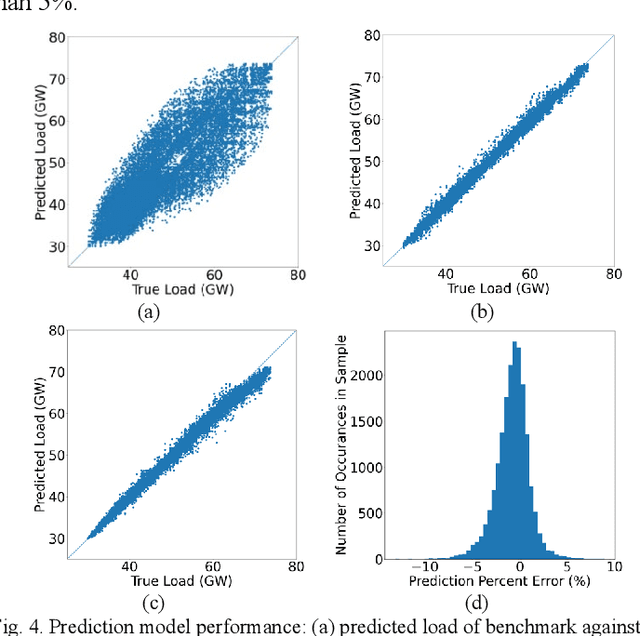

Abstract:Accurate load forecasting is critical for efficient and reliable operations of the electric power system. A large part of electricity consumption is affected by weather conditions, making weather information an important determinant of electricity usage. Personal appliances and industry equipment also contribute significantly to electricity demand with temporal patterns, making time a useful factor to consider in load forecasting. This work develops several machine learning (ML) models that take various time and weather information as part of the input features to predict the short-term system-wide total load. Ablation studies were also performed to investigate and compare the impacts of different weather factors on the prediction accuracy. Actual load and historical weather data for the same region were processed and then used to train the ML models. It is interesting to observe that using all available features, each of which may be correlated to the load, is unlikely to achieve the best forecasting performance; features with redundancy may even decrease the inference capabilities of ML models. This indicates the importance of feature selection for ML models. Overall, case studies demonstrated the effectiveness of ML models trained with different weather and time input features for ERCOT load forecasting.

Linearization of ReLU Activation Function for Neural Network-Embedded Optimization:Optimal Day-Ahead Energy Scheduling

Oct 03, 2023

Abstract:Neural networks have been widely applied in the power system area. They can be used for better predicting input information and modeling system performance with increased accuracy. In some applications such as battery degradation neural network-based microgrid day-ahead energy scheduling, the input features of the trained learning model are variables to be solved in optimization models that enforce limits on the output of the same learning model. This will create a neural network-embedded optimization problem; the use of nonlinear activation functions in the neural network will make such problems extremely hard to solve if not unsolvable. To address this emerging challenge, this paper investigated different methods for linearizing the nonlinear activation functions with a particular focus on the widely used rectified linear unit (ReLU) function. Four linearization methods tailored for the ReLU activation function are developed, analyzed and compared in this paper. Each method employs a set of linear constraints to replace the ReLU function, effectively linearizing the optimization problem, which can overcome the computational challenges associated with the nonlinearity of the neural network model. These proposed linearization methods provide valuable tools for effectively solving optimization problems that integrate neural network models with ReLU activation functions.

Graph Neural Network-based Power Flow Model

Jul 05, 2023

Abstract:Power flow analysis plays a crucial role in examining the electricity flow within a power system network. By performing power flow calculations, the system's steady-state variables, including voltage magnitude, phase angle at each bus, active/reactive power flow across branches, can be determined. While the widely used DC power flow model offers speed and robustness, it may yield inaccurate line flow results for certain transmission lines. This issue becomes more critical when dealing with renewable energy sources such as wind farms, which are often located far from the main grid. Obtaining precise line flow results for these critical lines is vital for next operations. To address these challenges, data-driven approaches leverage historical grid profiles. In this paper, a graph neural network (GNN) model is trained using historical power system data to predict power flow outcomes. The GNN model enables rapid estimation of line flows. A comprehensive performance analysis is conducted, comparing the proposed GNN-based power flow model with the traditional DC power flow model, as well as deep neural network (DNN) and convolutional neural network (CNN). The results on test systems demonstrate that the proposed GNN-based power flow model provides more accurate solutions with high efficiency comparing to benchmark models.

Spatio-Temporal Deep Learning-Assisted Reduced Security-Constrained Unit Commitment

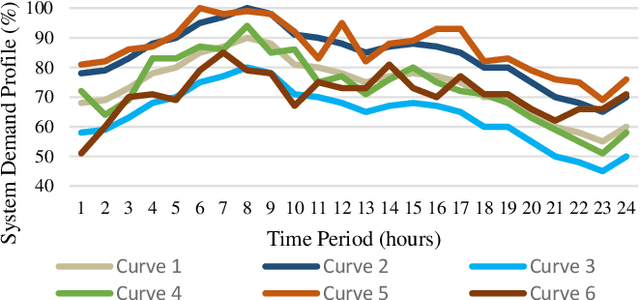

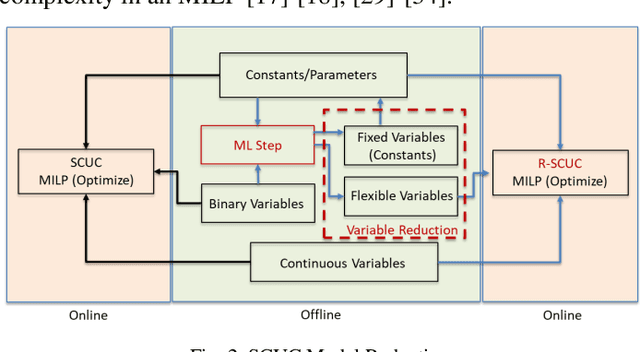

Jun 02, 2023Abstract:Security-constrained unit commitment (SCUC) is a computationally complex process utilized in power system day-ahead scheduling and market clearing. SCUC is run daily and requires state-of-the-art algorithms to speed up the process. The constraints and data associated with SCUC are both geographically and temporally correlated to ensure the reliability of the solution, which further increases the complexity. In this paper, an advanced machine learning (ML) model is used to study the patterns in power system historical data, which inherently considers both spatial and temporal (ST) correlations in constraints. The ST-correlated ML model is trained to understand spatial correlation by considering graph neural networks (GNN) whereas temporal sequences are studied using long short-term memory (LSTM) networks. The proposed approach is validated on several test systems namely, IEEE 24-Bus system, IEEE-73 Bus system, IEEE 118-Bus system, and synthetic South-Carolina (SC) 500-Bus system. Moreover, B-{\theta} and power transfer distribution factor (PTDF) based SCUC formulations were considered in this research. Simulation results demonstrate that the ST approach can effectively predict generator commitment schedule and classify critical and non-critical lines in the system which are utilized for model reduction of SCUC to obtain computational enhancement without loss in solution quality

Short-Term Aggregated Residential Load Forecasting using BiLSTM and CNN-BiLSTM

Feb 10, 2023

Abstract:Higher penetration of renewable and smart home technologies at the residential level challenges grid stability as utility-customer interactions add complexity to power system operations. In response, short-term residential load forecasting has become an increasing area of focus. However, forecasting at the residential level is challenging due to the higher uncertainties involved. Recently deep neural networks have been leveraged to address this issue. This paper investigates the capabilities of a bidirectional long short-term memory (BiLSTM) and a convolutional neural network-based BiLSTM (CNN-BiLSTM) to provide a day ahead (24 hr.) forecasting at an hourly resolution while minimizing the root mean squared error (RMSE) between the actual and predicted load demand. Using a publicly available dataset consisting of 38 homes, the BiLSTM and CNN-BiLSTM models are trained to forecast the aggregated active power demand for each hour within a 24 hr. span, given the previous 24 hr. load data. The BiLSTM model achieved the lowest RMSE of 1.4842 for the overall daily forecast. In addition, standard LSTM and CNN-LSTM models are trained and compared with the BiLSTM architecture. The RMSE of BiLSTM is 5.60%, 2.85% and 2.60% lower than the LSTM, CNN-LSTM and CNN-BiLSTM models respectively. The source code of this work is available at https://github.com/Varat7v2/STLF-BiLSTM-CNNBiLSTM.git.

* This article has been accepted for publication in 2022 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT). This preprint is for personal use - that is solely for the purpose of research, but republication/redistribution requires IEEE permission. Please check IEEE website for more information

Feasibility Layer Aided Machine Learning Approach for Day-Ahead Operations

Aug 13, 2022

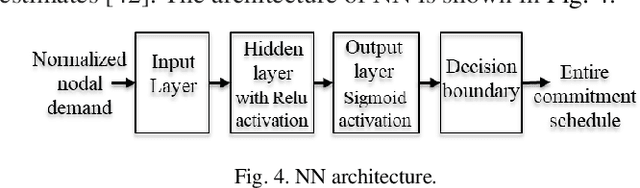

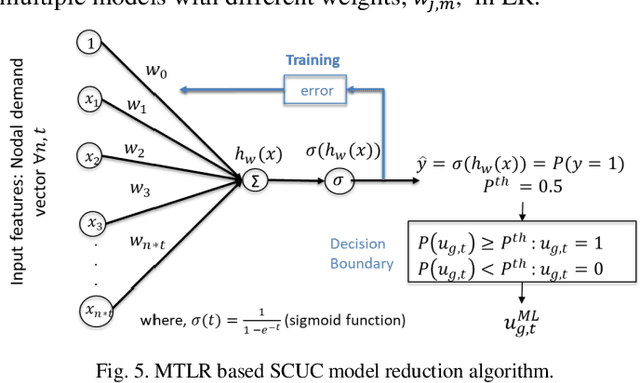

Abstract:Day-ahead operations involves a complex and computationally intensive optimization process to determine the generator commitment schedule and dispatch. The optimization process is a mixed-integer linear program (MILP) also known as security-constrained unit commitment (SCUC). Independent system operators (ISOs) run SCUC daily and require state-of-the-art algorithms to speed up the process. Existing patterns in historical information can be leveraged for model reduction of SCUC, which can provide significant time savings. In this paper, machine learning (ML) based classification approaches, namely logistic regression, neural networks, random forest and K-nearest neighbor, were studied for model reduction of SCUC. The ML was then aided with a feasibility layer (FL) and post-process technique to ensure high-quality solutions. The proposed approach is validated on several test systems namely, IEEE 24-Bus system, IEEE-73 Bus system, IEEE 118-Bus system, 500-Bus system, and Polish 2383-Bus system. Moreover, model reduction of a stochastic SCUC (SSCUC) was demonstrated utilizing a modified IEEE 24-Bus system with renewable generation. Simulation results demonstrate a high training accuracy to identify commitment schedule while FL and post-process ensure ML predictions do not lead to infeasible solutions with minimal loss in solution quality.

Reduced Optimal Power Flow Using Graph Neural Network

Jun 27, 2022

Abstract:OPF problems are formulated and solved for power system operations, especially for determining generation dispatch points in real-time. For large and complex power system networks with large numbers of variables and constraints, finding the optimal solution for real-time OPF in a timely manner requires a massive amount of computing power. This paper presents a new method to reduce the number of constraints in the original OPF problem using a graph neural network (GNN). GNN is an innovative machine learning model that utilizes features from nodes, edges, and network topology to maximize its performance. In this paper, we proposed a GNN model to predict which lines would be heavily loaded or congested with given load profiles and generation capacities. Only these critical lines will be monitored in an OPF problem, creating a reduced OPF (ROPF) problem. Significant saving in computing time is expected from the proposed ROPF model. A comprehensive analysis of predictions from the GNN model was also made. It is concluded that the application of GNN for ROPF is able to reduce computing time while retaining solution quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge