Xingming Sun

Exposing Deepfake Face Forgeries with Guided Residuals

May 02, 2022

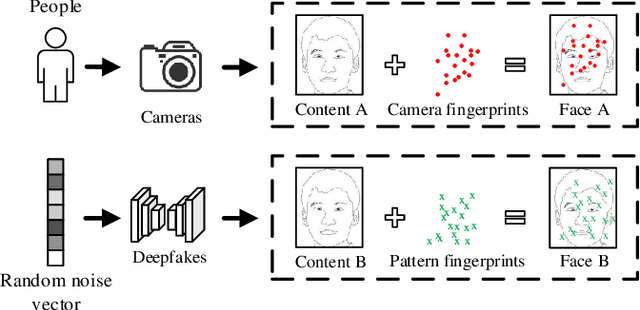

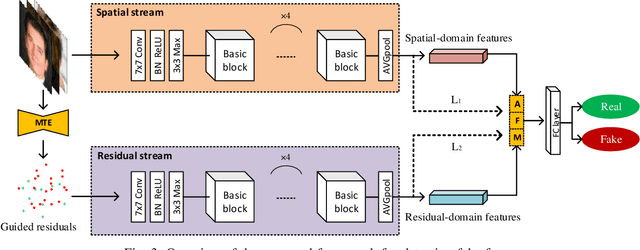

Abstract:Residual-domain feature is very useful for Deepfake detection because it suppresses irrelevant content features and preserves key manipulation traces. However, inappropriate residual prediction will bring side effects on detection accuracy. In addition, residual-domain features are easily affected by image operations such as compression. Most existing works exploit either spatial-domain features or residual-domain features, while neglecting that two types of features are mutually correlated. In this paper, we propose a guided residuals network, namely GRnet, which fuses spatial-domain and residual-domain features in a mutually reinforcing way, to expose face images generated by Deepfake. Different from existing prediction based residual extraction methods, we propose a manipulation trace extractor (MTE) to directly remove the content features and preserve manipulation traces. MTE is a fine-grained method that can avoid the potential bias caused by inappropriate prediction. Moreover, an attention fusion mechanism (AFM) is designed to selectively emphasize feature channel maps and adaptively allocate the weights for two streams. The experimental results show that the proposed GRnet achieves better performances than the state-of-the-art works on four public fake face datasets including HFF, FaceForensics++, DFDC and Celeb-DF. Especially, GRnet achieves an average accuracy of 97.72% on the HFF dataset, which is at least 5.25% higher than the existing works.

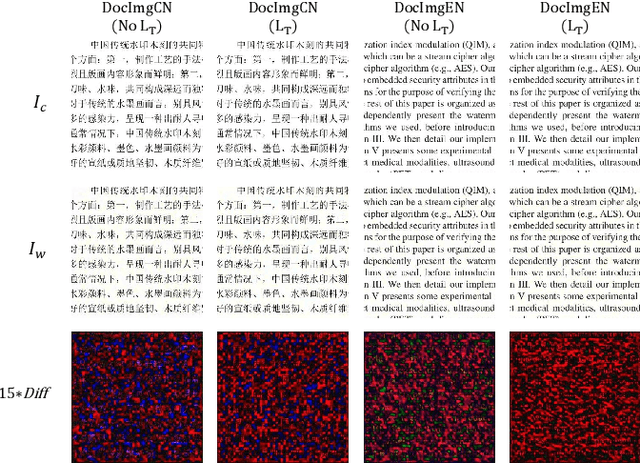

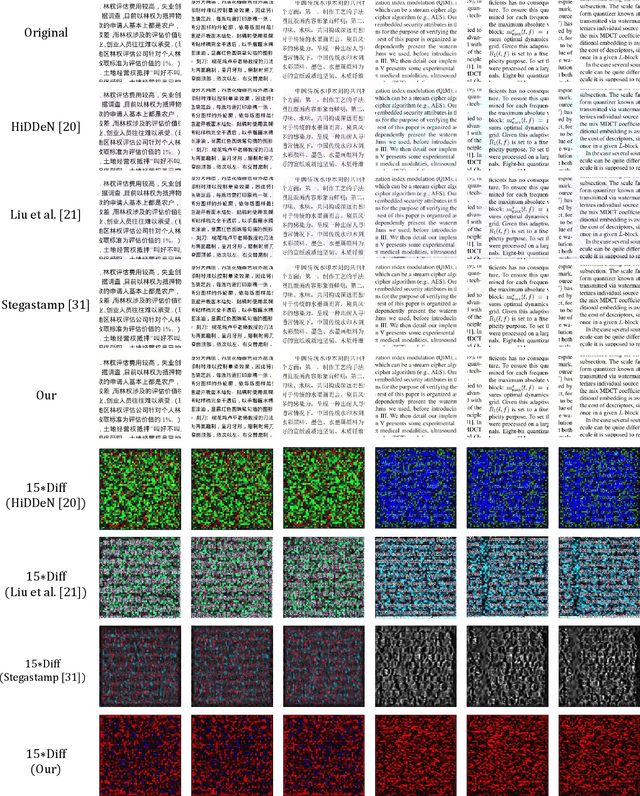

A Robust Document Image Watermarking Scheme using Deep Neural Network

Feb 26, 2022

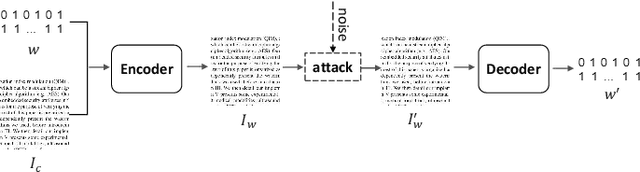

Abstract:Watermarking is an important copyright protection technology which generally embeds the identity information into the carrier imperceptibly. Then the identity can be extracted to prove the copyright from the watermarked carrier even after suffering various attacks. Most of the existing watermarking technologies take the nature images as carriers. Different from the natural images, document images are not so rich in color and texture, and thus have less redundant information to carry watermarks. This paper proposes an end-to-end document image watermarking scheme using the deep neural network. Specifically, an encoder and a decoder are designed to embed and extract the watermark. A noise layer is added to simulate the various attacks that could be encountered in reality, such as the Cropout, Dropout, Gaussian blur, Gaussian noise, Resize, and JPEG Compression. A text-sensitive loss function is designed to limit the embedding modification on characters. An embedding strength adjustment strategy is proposed to improve the quality of watermarked image with little loss of extraction accuracy. Experimental results show that the proposed document image watermarking technology outperforms three state-of-the-arts in terms of the robustness and image quality.

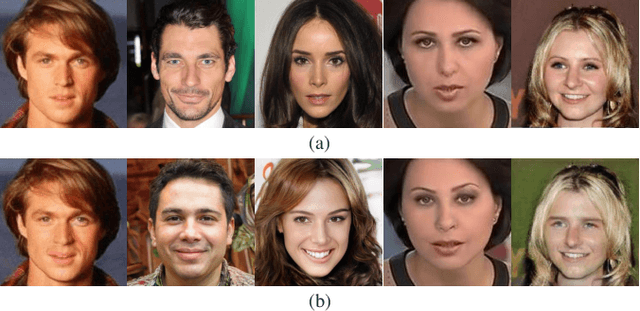

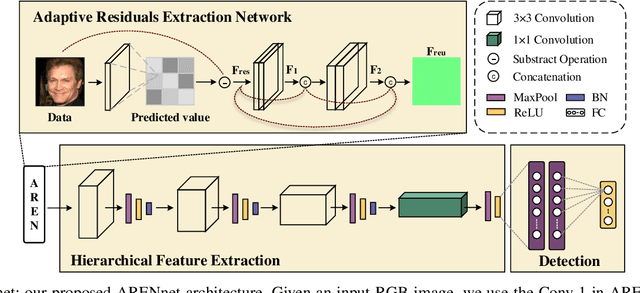

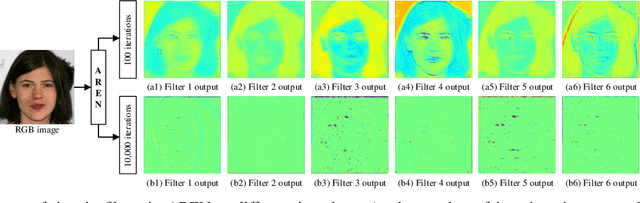

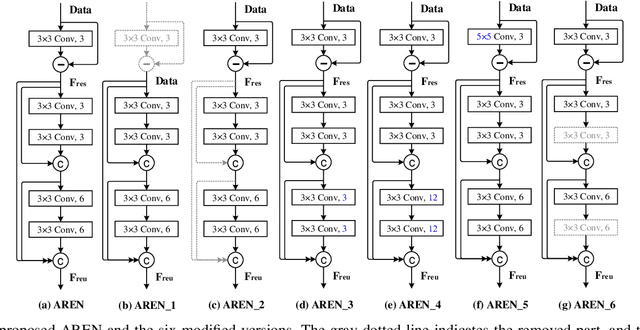

Fake Face Detection via Adaptive Residuals Extraction Network

May 11, 2020

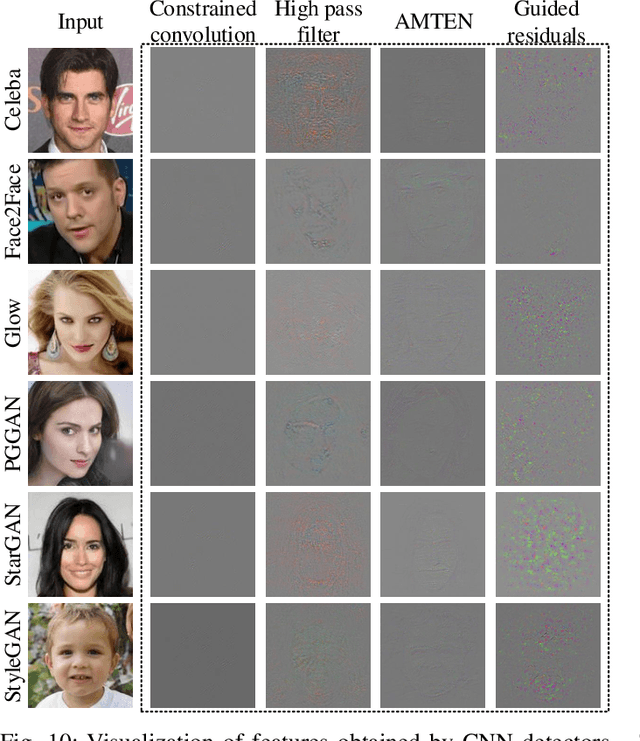

Abstract:With the proliferation of face image manipulation (FIM) techniques such as Face2Face and Deepfake, more fake face images are spreading over the internet, which brings serious challenges to public confidence. Face image forgery detection has made considerable progresses in exposing specific FIM, but it is still in scarcity of a robust fake face detector to expose face image forgeries under complex scenarios. Due to the relatively fixed structure, convolutional neural network (CNN) tends to learn image content representations. However, CNN should learn subtle tampering artifacts for image forensics tasks. We propose an adaptive residuals extraction network (AREN), which serves as pre-processing to suppress image content and highlight tampering artifacts. AREN exploits an adaptive convolution layer to predict image residuals, which are reused in subsequent layers to maximize manipulation artifacts by updating weights during the back-propagation pass. A fake face detector, namely ARENnet, is constructed by integrating AREN with CNN. Experimental results prove that the proposed AREN achieves desirable pre-processing. When detecting fake face images generated by various FIM techniques, ARENnet achieves an average accuracy up to 98.52%, which outperforms the state-of-the-art works. When detecting face images with unknown post-processing operations, the detector also achieves an average accuracy of 95.17%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge