Xingjian Cao

PerFED-GAN: Personalized Federated Learning via Generative Adversarial Networks

Feb 18, 2022

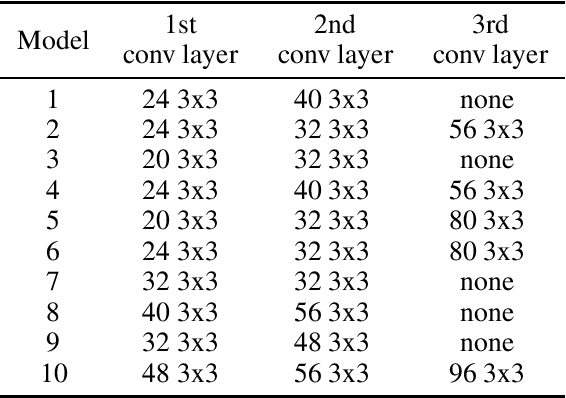

Abstract:Federated learning is gaining popularity as a distributed machine learning method that can be used to deploy AI-dependent IoT applications while protecting client data privacy and security. Due to the differences of clients, a single global model may not perform well on all clients, so the personalized federated learning method, which trains a personalized model for each client that better suits its individual needs, becomes a research hotspot. Most personalized federated learning research, however, focuses on data heterogeneity while ignoring the need for model architecture heterogeneity. Most existing federated learning methods uniformly set the model architecture of all clients participating in federated learning, which is inconvenient for each client's individual model and local data distribution requirements, and also increases the risk of client model leakage. This paper proposes a federated learning method based on co-training and generative adversarial networks(GANs) that allows each client to design its own model to participate in federated learning training independently without sharing any model architecture or parameter information with other clients or a center. In our experiments, the proposed method outperforms the existing methods in mean test accuracy by 42% when the client's model architecture and data distribution vary significantly.

CoFED: Cross-silo Heterogeneous Federated Multi-task Learning via Co-training

Feb 17, 2022

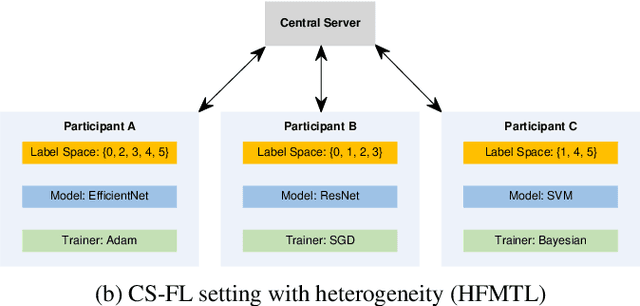

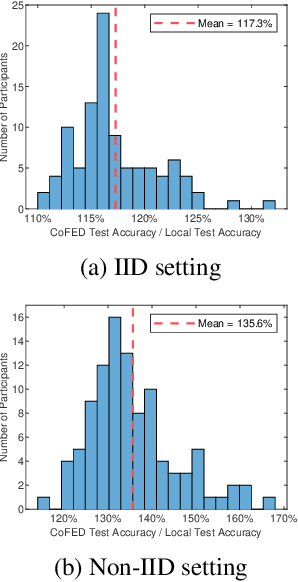

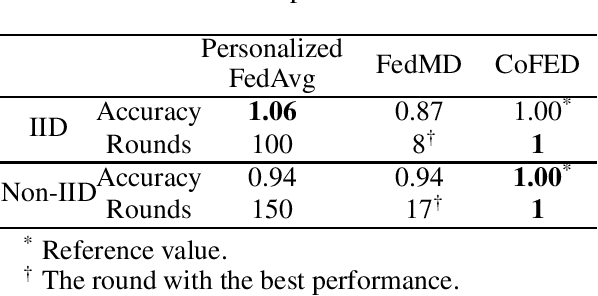

Abstract:Federated Learning (FL) is a machine learning technique that enables participants to train high-quality models collaboratively without exchanging their private data. Participants in cross-silo FL settings are independent organizations with different task needs, and they are concerned not only with data privacy, but also with training independently their unique models due to intellectual property. Most existing FL schemes are incapability for the above scenarios. In this paper, we propose a communication-efficient FL scheme, CoFED, based on pseudo-labeling unlabeled data like co-training. To the best of our knowledge, it is the first FL scheme compatible with heterogeneous tasks, heterogeneous models, and heterogeneous training algorithms simultaneously. Experimental results show that CoFED achieves better performance with a lower communication cost. Especially for the non-IID settings and heterogeneous models, the proposed method improves the performance by 35%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge