CoFED: Cross-silo Heterogeneous Federated Multi-task Learning via Co-training

Paper and Code

Feb 17, 2022

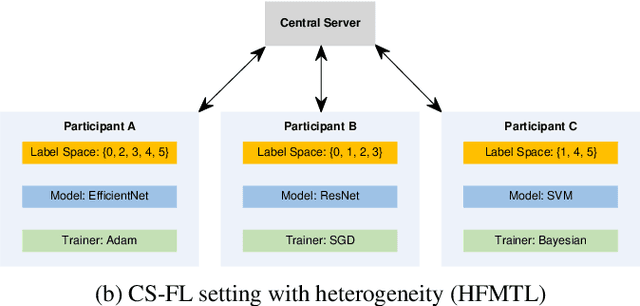

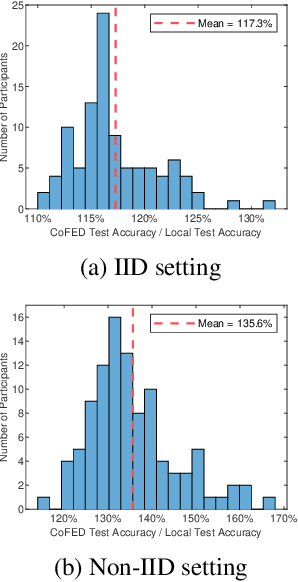

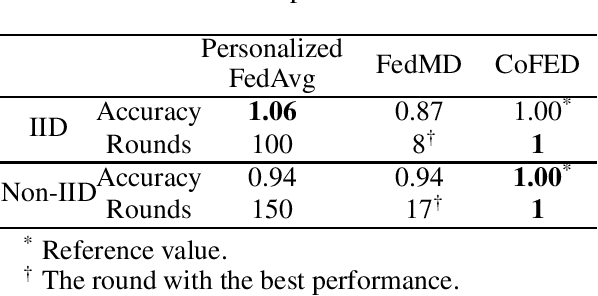

Federated Learning (FL) is a machine learning technique that enables participants to train high-quality models collaboratively without exchanging their private data. Participants in cross-silo FL settings are independent organizations with different task needs, and they are concerned not only with data privacy, but also with training independently their unique models due to intellectual property. Most existing FL schemes are incapability for the above scenarios. In this paper, we propose a communication-efficient FL scheme, CoFED, based on pseudo-labeling unlabeled data like co-training. To the best of our knowledge, it is the first FL scheme compatible with heterogeneous tasks, heterogeneous models, and heterogeneous training algorithms simultaneously. Experimental results show that CoFED achieves better performance with a lower communication cost. Especially for the non-IID settings and heterogeneous models, the proposed method improves the performance by 35%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge