Ximing Qiao

Learning and Compositionality: a Unification Attempt via Connectionist Probabilistic Programming

Aug 26, 2022

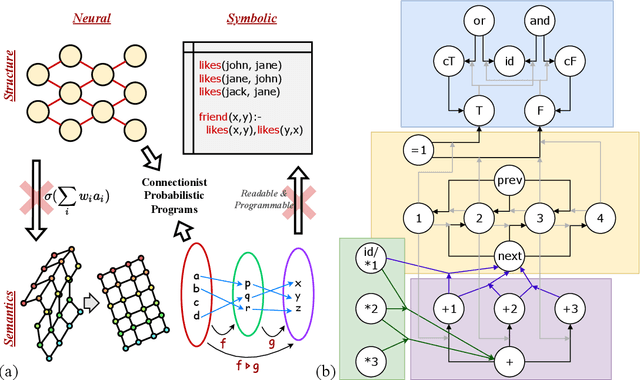

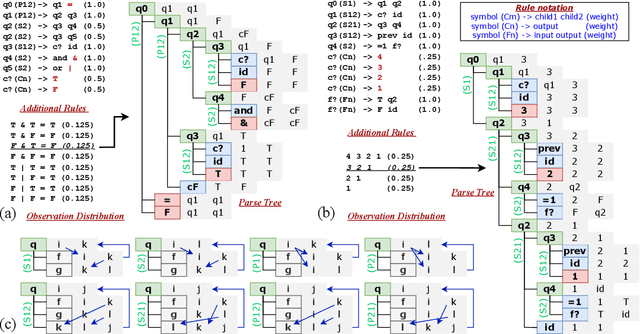

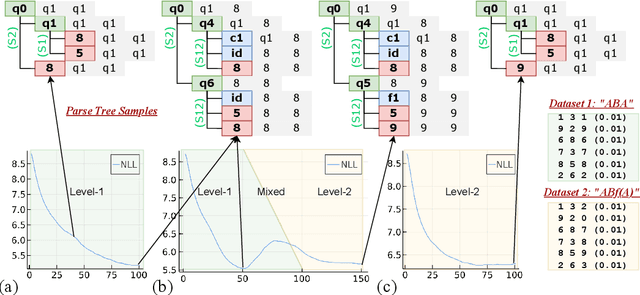

Abstract:We consider learning and compositionality as the key mechanisms towards simulating human-like intelligence. While each mechanism is successfully achieved by neural networks and symbolic AIs, respectively, it is the combination of the two mechanisms that makes human-like intelligence possible. Despite the numerous attempts on building hybrid neuralsymbolic systems, we argue that our true goal should be unifying learning and compositionality, the core mechanisms, instead of neural and symbolic methods, the surface approaches to achieve them. In this work, we review and analyze the strengths and weaknesses of neural and symbolic methods by separating their forms and meanings (structures and semantics), and propose Connectionist Probabilistic Program (CPPs), a framework that connects connectionist structures (for learning) and probabilistic program semantics (for compositionality). Under the framework, we design a CPP extension for small scale sequence modeling and provide a learning algorithm based on Bayesian inference. Although challenges exist in learning complex patterns without supervision, our early results demonstrate CPP's successful extraction of concepts and relations from raw sequential data, an initial step towards compositional learning.

On Provable Backdoor Defense in Collaborative Learning

Jan 19, 2021

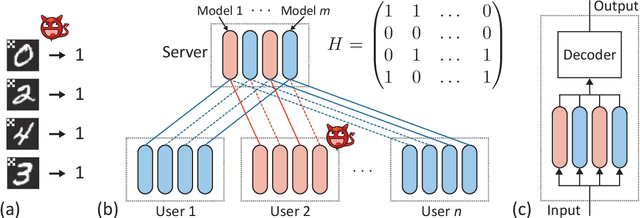

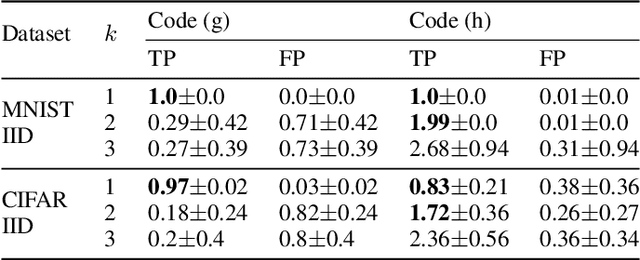

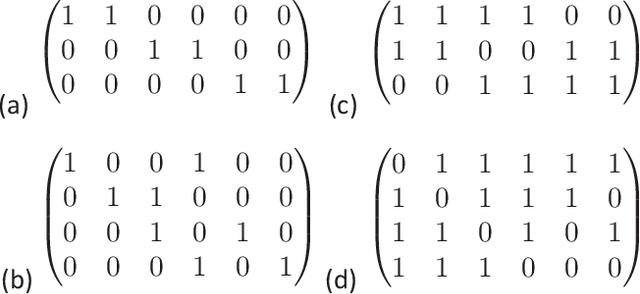

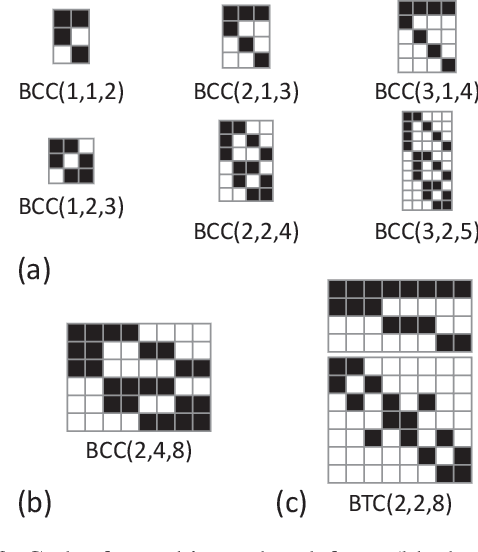

Abstract:As collaborative learning allows joint training of a model using multiple sources of data, the security problem has been a central concern. Malicious users can upload poisoned data to prevent the model's convergence or inject hidden backdoors. The so-called backdoor attacks are especially difficult to detect since the model behaves normally on standard test data but gives wrong outputs when triggered by certain backdoor keys. Although Byzantine-tolerant training algorithms provide convergence guarantee, provable defense against backdoor attacks remains largely unsolved. Methods based on randomized smoothing can only correct a small number of corrupted pixels or labels; methods based on subset aggregation cause a severe drop in classification accuracy due to low data utilization. We propose a novel framework that generalizes existing subset aggregation methods. The framework shows that the subset selection process, a deciding factor for subset aggregation methods, can be viewed as a code design problem. We derive the theoretical bound of data utilization ratio and provide optimal code construction. Experiments on non-IID versions of MNIST and CIFAR-10 show that our method with optimal codes significantly outperforms baselines using non-overlapping partition and random selection. Additionally, integration with existing coding theory results shows that special codes can track the location of the attackers. Such capability provides new countermeasures to backdoor attacks.

Defending Neural Backdoors via Generative Distribution Modeling

Nov 06, 2019

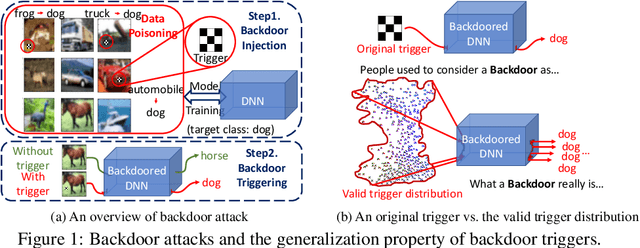

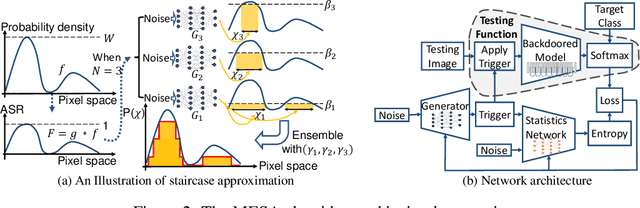

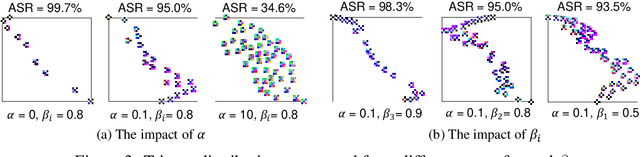

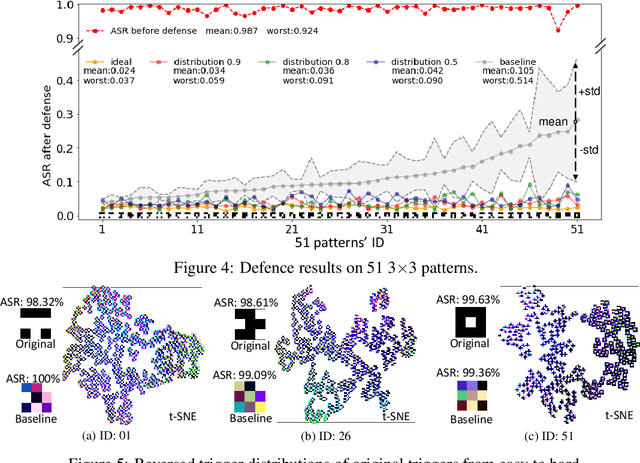

Abstract:Neural backdoor attack is emerging as a severe security threat to deep learning, while the capability of existing defense methods is limited, especially for complex backdoor triggers. In the work, we explore the space formed by the pixel values of all possible backdoor triggers. An original trigger used by an attacker to build the backdoored model represents only a point in the space. It then will be generalized into a distribution of valid triggers, all of which can influence the backdoored model. Thus, previous methods that model only one point of the trigger distribution is not sufficient. Getting the entire trigger distribution, e.g., via generative modeling, is a key to effective defense. However, existing generative modeling techniques for image generation are not applicable to the backdoor scenario as the trigger distribution is completely unknown. In this work, we propose max-entropy staircase approximator (MESA), an algorithm for high-dimensional sampling-free generative modeling and use it to recover the trigger distribution. We also develop a defense technique to remove the triggers from the backdoored model. Our experiments on Cifar10/100 dataset demonstrate the effectiveness of MESA in modeling the trigger distribution and the robustness of the proposed defense method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge