Xiaohua Sun

Video Domain Incremental Learning for Human Action Recognition in Home Environments

Dec 22, 2024Abstract:It is significantly challenging to recognize daily human actions in homes due to the diversity and dynamic changes in unconstrained home environments. It spurs the need to continually adapt to various users and scenes. Fine-tuning current video understanding models on newly encountered domains often leads to catastrophic forgetting, where the models lose their ability to perform well on previously learned scenarios. To address this issue, we formalize the problem of Video Domain Incremental Learning (VDIL), which enables models to learn continually from different domains while maintaining a fixed set of action classes. Existing continual learning research primarily focuses on class-incremental learning, while the domain incremental learning has been largely overlooked in video understanding. In this work, we introduce a novel benchmark of domain incremental human action recognition for unconstrained home environments. We design three domain split types (user, scene, hybrid) to systematically assess the challenges posed by domain shifts in real-world home settings. Furthermore, we propose a baseline learning strategy based on replay and reservoir sampling techniques without domain labels to handle scenarios with limited memory and task agnosticism. Extensive experimental results demonstrate that our simple sampling and replay strategy outperforms most existing continual learning methods across the three proposed benchmarks.

Cocobo: Exploring Large Language Models as the Engine for End-User Robot Programming

Jul 30, 2024Abstract:End-user development allows everyday users to tailor service robots or applications to their needs. One user-friendly approach is natural language programming. However, it encounters challenges such as an expansive user expression space and limited support for debugging and editing, which restrict its application in end-user programming. The emergence of large language models (LLMs) offers promising avenues for the translation and interpretation between human language instructions and the code executed by robots, but their application in end-user programming systems requires further study. We introduce Cocobo, a natural language programming system with interactive diagrams powered by LLMs. Cocobo employs LLMs to understand users' authoring intentions, generate and explain robot programs, and facilitate the conversion between executable code and flowchart representations. Our user study shows that Cocobo has a low learning curve, enabling even users with zero coding experience to customize robot programs successfully.

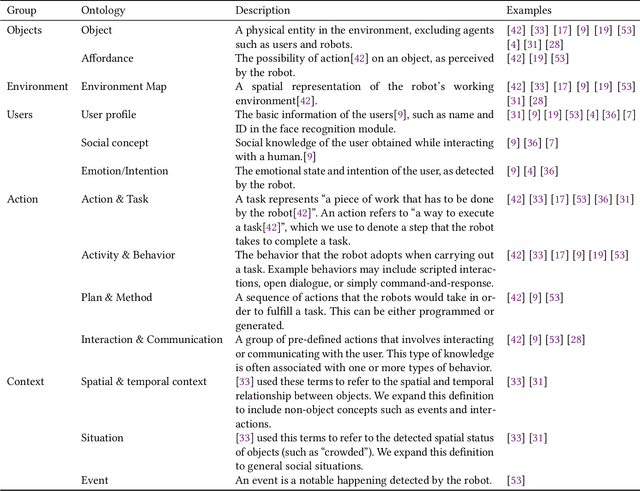

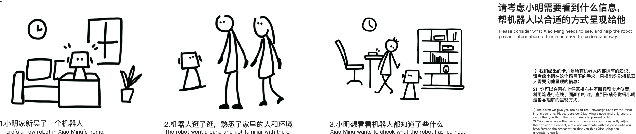

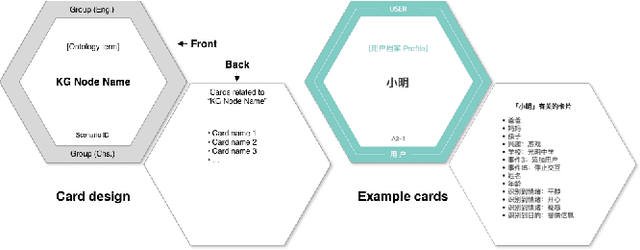

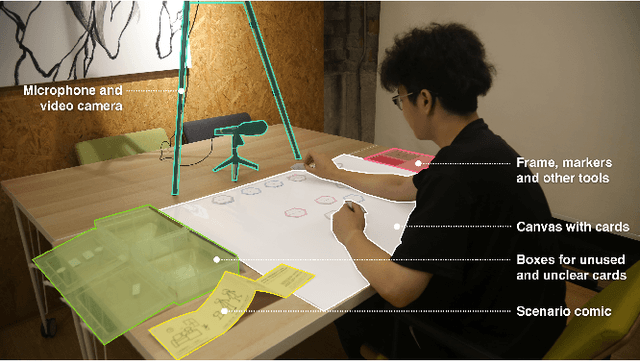

Patterns for Representing Knowledge Graphs to Communicate Situational Knowledge of Service Robots

Jan 26, 2021

Abstract:Service robots are envisioned to be adaptive to their working environment based on situational knowledge. Recent research focused on designing visual representation of knowledge graphs for expert users. However, how to generate an understandable interface for non-expert users remains to be explored. In this paper, we use knowledge graphs (KGs) as a common ground for knowledge exchange and develop a pattern library for designing KG interfaces for non-expert users. After identifying the types of robotic situational knowledge from the literature, we present a formative study in which participants used cards to communicate the knowledge for given scenarios. We iteratively coded the results and identified patterns for representing various types of situational knowledge. To derive design recommendations for applying the patterns, we prototyped a lab service robot and conducted Wizard-of-Oz testing. The patterns and recommendations could provide useful guidance in designing knowledge-exchange interfaces for robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge