Xiaohong Yuan

Visual Heading Prediction for Autonomous Aerial Vehicles

Dec 10, 2025Abstract:The integration of Unmanned Aerial Vehicles (UAVs) and Unmanned Ground Vehicles (UGVs) is increasingly central to the development of intelligent autonomous systems for applications such as search and rescue, environmental monitoring, and logistics. However, precise coordination between these platforms in real-time scenarios presents major challenges, particularly when external localization infrastructure such as GPS or GNSS is unavailable or degraded [1]. This paper proposes a vision-based, data-driven framework for real-time UAV-UGV integration, with a focus on robust UGV detection and heading angle prediction for navigation and coordination. The system employs a fine-tuned YOLOv5 model to detect UGVs and extract bounding box features, which are then used by a lightweight artificial neural network (ANN) to estimate the UAV's required heading angle. A VICON motion capture system was used to generate ground-truth data during training, resulting in a dataset of over 13,000 annotated images collected in a controlled lab environment. The trained ANN achieves a mean absolute error of 0.1506° and a root mean squared error of 0.1957°, offering accurate heading angle predictions using only monocular camera inputs. Experimental evaluations achieve 95% accuracy in UGV detection. This work contributes a vision-based, infrastructure- independent solution that demonstrates strong potential for deployment in GPS/GNSS-denied environments, supporting reliable multi-agent coordination under realistic dynamic conditions. A demonstration video showcasing the system's real-time performance, including UGV detection, heading angle prediction, and UAV alignment under dynamic conditions, is available at: https://github.com/Kooroshraf/UAV-UGV-Integration

Enhancing Deepfake Detection using SE Block Attention with CNN

Jun 12, 2025Abstract:In the digital age, Deepfake present a formidable challenge by using advanced artificial intelligence to create highly convincing manipulated content, undermining information authenticity and security. These sophisticated fabrications surpass traditional detection methods in complexity and realism. To address this issue, we aim to harness cutting-edge deep learning methodologies to engineer an innovative deepfake detection model. However, most of the models designed for deepfake detection are large, causing heavy storage and memory consumption. In this research, we propose a lightweight convolution neural network (CNN) with squeeze and excitation block attention (SE) for Deepfake detection. The SE block module is designed to perform dynamic channel-wise feature recalibration. The SE block allows the network to emphasize informative features and suppress less useful ones, which leads to a more efficient and effective learning module. This module is integrated with a simple sequential model to perform Deepfake detection. The model is smaller in size and it achieves competing accuracy with the existing models for deepfake detection tasks. The model achieved an overall classification accuracy of 94.14% and AUC-ROC score of 0.985 on the Style GAN dataset from the Diverse Fake Face Dataset. Our proposed approach presents a promising avenue for combating the Deepfake challenge with minimal computational resources, developing efficient and scalable solutions for digital content verification.

AI-Cybersecurity Education Through Designing AI-based Cyberharassment Detection Lab

May 16, 2024

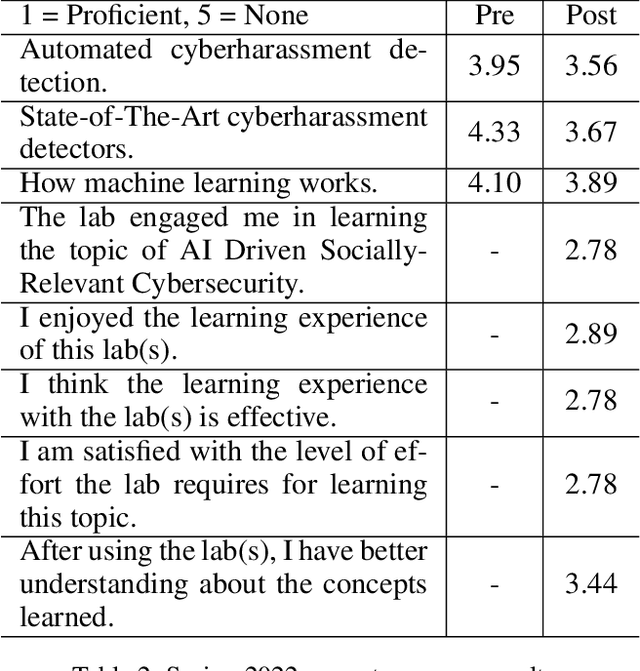

Abstract:Cyberharassment is a critical, socially relevant cybersecurity problem because of the adverse effects it can have on targeted groups or individuals. While progress has been made in understanding cyber-harassment, its detection, attacks on artificial intelligence (AI) based cyberharassment systems, and the social problems in cyberharassment detectors, little has been done in designing experiential learning educational materials that engage students in this emerging social cybersecurity in the era of AI. Experiential learning opportunities are usually provided through capstone projects and engineering design courses in STEM programs such as computer science. While capstone projects are an excellent example of experiential learning, given the interdisciplinary nature of this emerging social cybersecurity problem, it can be challenging to use them to engage non-computing students without prior knowledge of AI. Because of this, we were motivated to develop a hands-on lab platform that provided experiential learning experiences to non-computing students with little or no background knowledge in AI and discussed the lessons learned in developing this lab. In this lab used by social science students at North Carolina A&T State University across two semesters (spring and fall) in 2022, students are given a detailed lab manual and are to complete a set of well-detailed tasks. Through this process, students learn AI concepts and the application of AI for cyberharassment detection. Using pre- and post-surveys, we asked students to rate their knowledge or skills in AI and their understanding of the concepts learned. The results revealed that the students moderately understood the concepts of AI and cyberharassment.

Presentation Attack detection using Wavelet Transform and Deep Residual Neural Net

Nov 23, 2023Abstract:Biometric authentication is becoming more prevalent for secured authentication systems. However, the biometric substances can be deceived by the imposters in several ways. Among other imposter attacks, print attacks, mask attacks, and replay attacks fall under the presentation attack category. The bio-metric images, especially the iris and face, are vulnerable to different presentation attacks. This research applies deep learning approaches to mitigate presentation attacks in a biometric access control system. Our contribution in this paper is two-fold: First, we applied the wavelet transform to extract the features from the biometric images. Second, we modified the deep residual neural net and applied it to the spoof datasets in an attempt to detect the presentation attacks. This research applied the proposed approach to biometric spoof datasets, namely ATVS, CASIA two class, and CASIA cropped image sets. The datasets used in this research contain images that are captured in both a controlled and uncontrolled environment along with different resolutions and sizes. We obtained the best accuracy of 93% on the ATVS Iris datasets. For CASIA two class and CASIA cropped datasets, we achieved test accuracies of 91% and 82%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge