Xiaobing Kang

High-order structure preserving graph neural network for few-shot learning

May 29, 2020

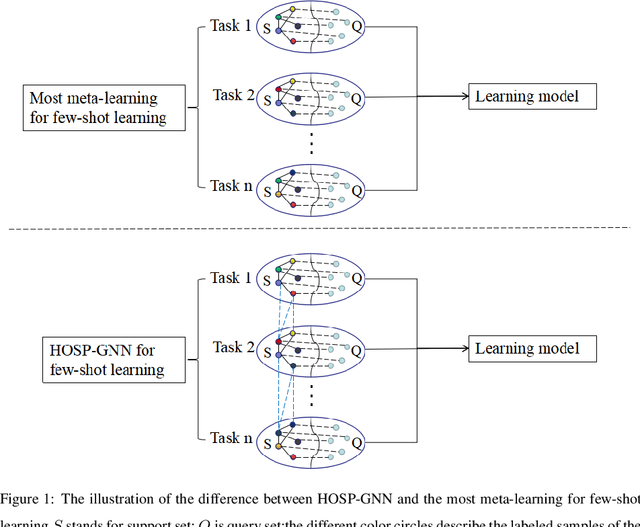

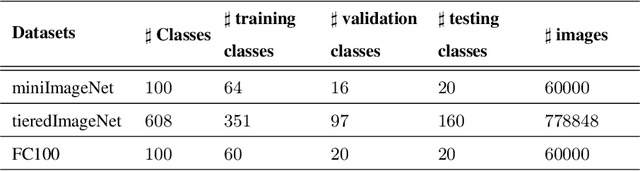

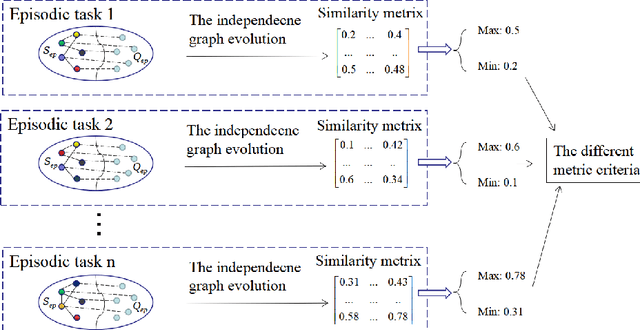

Abstract:Few-shot learning can find the latent structure information between the prior knowledge and the queried data by the similarity metric of meta-learning to construct the discriminative model for recognizing the new categories with the rare labeled samples. Most existing methods try to model the similarity relationship of the samples in the intra tasks, and generalize the model to identify the new categories. However, the relationship of samples between the separated tasks is difficultly considered because of the different metric criterion in the respective tasks. In contrast, the proposed high-order structure preserving graph neural network(HOSP-GNN) can further explore the rich structure of the samples to predict the label of the queried data on graph that enables the structure evolution to explicitly discriminate the categories by iteratively updating the high-order structure relationship (the relative metric in multi-samples,instead of pairwise sample metric) with the manifold structure constraints. HOSP-GNN can not only mine the high-order structure for complementing the relevance between samples that may be divided into the different task in meta-learning, and but also generate the rule of the structure updating by manifold constraint. Furthermore, HOSP-GNN doesn't need retrain the learning model for recognizing the new classes, and HOSP-GNN has the well-generalizable high-order structure for model adaptability. Experiments show that HOSP-GNN outperforms the state-of-the-art methods on supervised and semi-supervised few-shot learning in three benchmark datasets that are miniImageNet, tieredImageNet and FC100.

Deep graph learning for semi-supervised classification

May 29, 2020

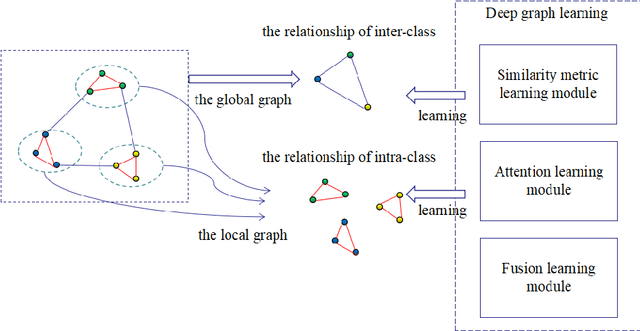

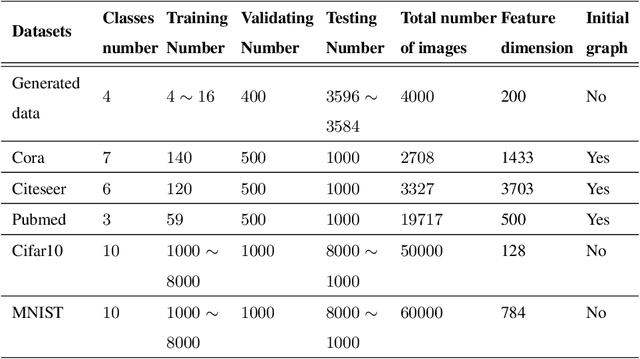

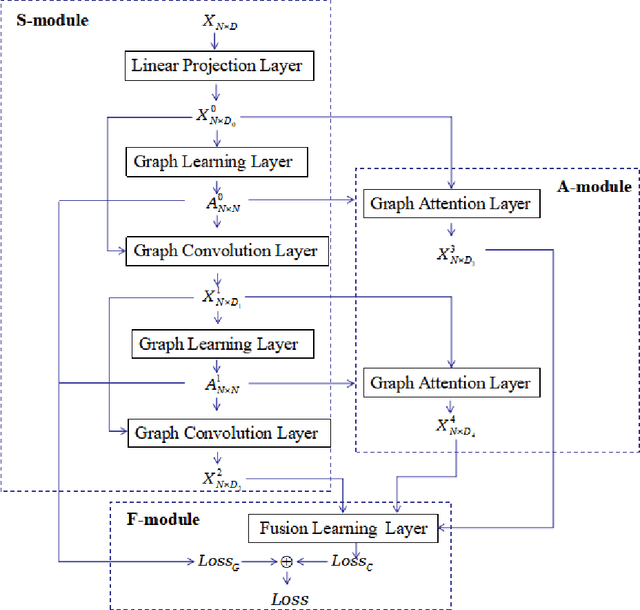

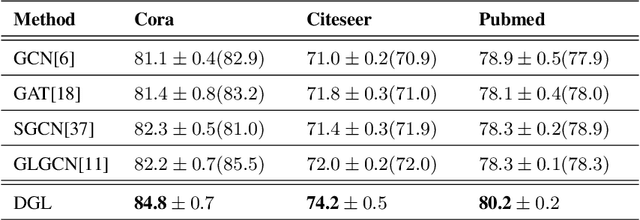

Abstract:Graph learning (GL) can dynamically capture the distribution structure (graph structure) of data based on graph convolutional networks (GCN), and the learning quality of the graph structure directly influences GCN for semi-supervised classification. Existing methods mostly combine the computational layer and the related losses into GCN for exploring the global graph(measuring graph structure from all data samples) or local graph (measuring graph structure from local data samples). Global graph emphasises on the whole structure description of the inter-class data, while local graph trend to the neighborhood structure representation of intra-class data. However, it is difficult to simultaneously balance these graphs of the learning process for semi-supervised classification because of the interdependence of these graphs. To simulate the interdependence, deep graph learning(DGL) is proposed to find the better graph representation for semi-supervised classification. DGL can not only learn the global structure by the previous layer metric computation updating, but also mine the local structure by next layer local weight reassignment. Furthermore, DGL can fuse the different structures by dynamically encoding the interdependence of these structures, and deeply mine the relationship of the different structures by the hierarchical progressive learning for improving the performance of semi-supervised classification. Experiments demonstrate the DGL outperforms state-of-the-art methods on three benchmark datasets (Citeseer,Cora, and Pubmed) for citation networks and two benchmark datasets (MNIST and Cifar10) for images.

Transfer feature generating networks with semantic classes structure for zero-shot learning

Mar 06, 2019

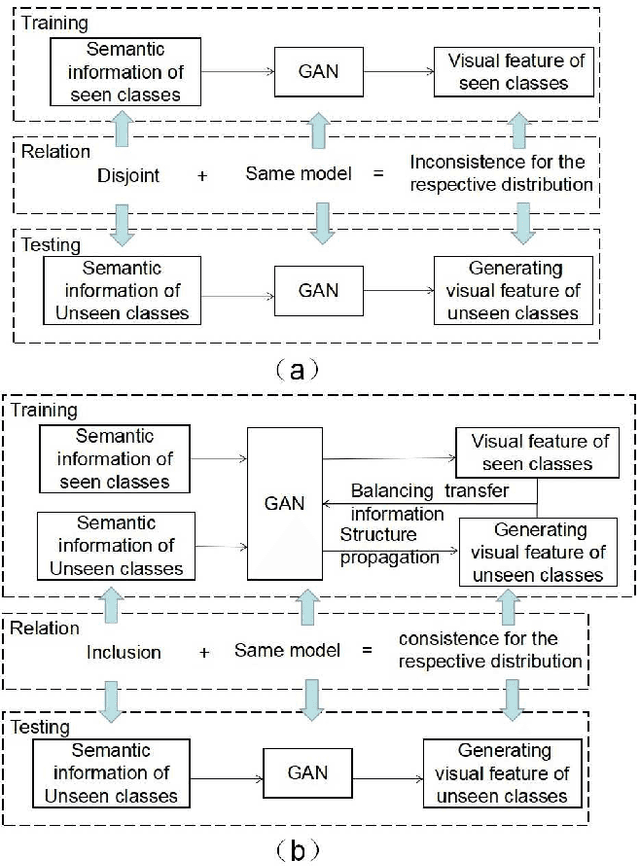

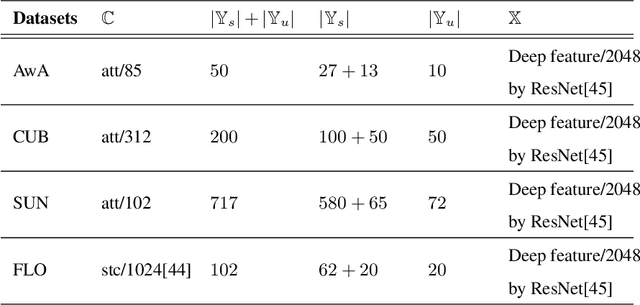

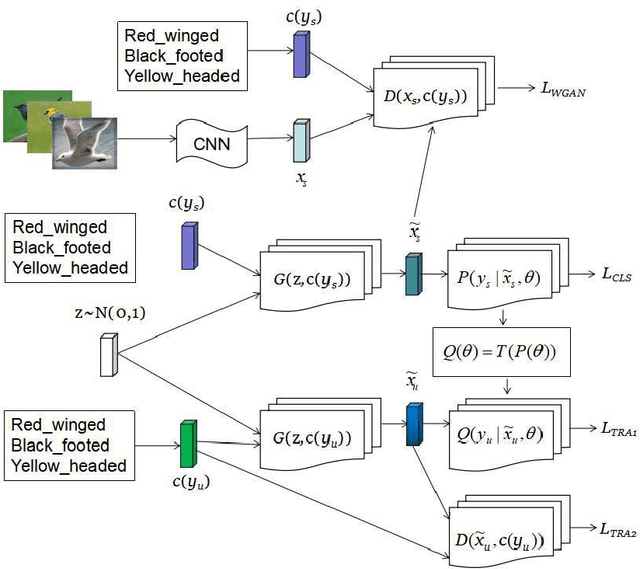

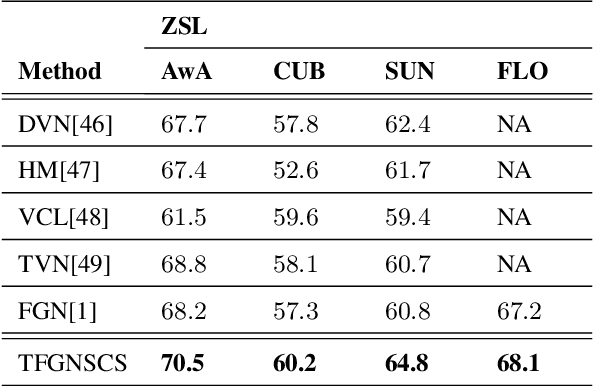

Abstract:Suffering from the generating feature inconsistence of seen classes training model for following the distribution of unseen classes , most of existing feature generating networks difficultly obtain satisfactory performance for the challenging generalization zero-shot learning (GZSL) by adversarial learning the distribution of semantic classes. To alleviate the negative influence of this inconsistence for zero-shot learning (ZSL), transfer feature generating networks with semantic classes structure (TFGNSCS) is proposed to construct networks model for improving the performance of ZSL and GZSL. TFGNSCS can not only consider the semantic structure relationship between seen and unseen classes but also learn the difference of generating features by balancing transfer information between seen and unseen classes in networks. The proposed method can integrate a Wasserstein generative adversarial network with classification loss and transfer loss to generate enough CNN feature, on which softmax classifiers are trained for ZSL and GZSL. Experiments demonstrate that the performance of TFGNSCS outperforms that of the state of the arts on four challenging datasets, which are CUB,FLO,SUN, AWA in GZSL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge