Xianyue Peng

CycLight: learning traffic signal cooperation with a cycle-level strategy

Jan 16, 2024Abstract:This study introduces CycLight, a novel cycle-level deep reinforcement learning (RL) approach for network-level adaptive traffic signal control (NATSC) systems. Unlike most traditional RL-based traffic controllers that focus on step-by-step decision making, CycLight adopts a cycle-level strategy, optimizing cycle length and splits simultaneously using Parameterized Deep Q-Networks (PDQN) algorithm. This cycle-level approach effectively reduces the computational burden associated with frequent data communication, meanwhile enhancing the practicality and safety of real-world applications. A decentralized framework is formulated for multi-agent cooperation, while attention mechanism is integrated to accurately assess the impact of the surroundings on the current intersection. CycLight is tested in a large synthetic traffic grid using the microscopic traffic simulation tool, SUMO. Experimental results not only demonstrate the superiority of CycLight over other state-of-the-art approaches but also showcase its robustness against information transmission delays.

Combat Urban Congestion via Collaboration: Heterogeneous GNN-based MARL for Coordinated Platooning and Traffic Signal Control

Oct 17, 2023Abstract:Over the years, reinforcement learning has emerged as a popular approach to develop signal control and vehicle platooning strategies either independently or in a hierarchical way. However, jointly controlling both in real-time to alleviate traffic congestion presents new challenges, such as the inherent physical and behavioral heterogeneity between signal control and platooning, as well as coordination between them. This paper proposes an innovative solution to tackle these challenges based on heterogeneous graph multi-agent reinforcement learning and traffic theories. Our approach involves: 1) designing platoon and signal control as distinct reinforcement learning agents with their own set of observations, actions, and reward functions to optimize traffic flow; 2) designing coordination by incorporating graph neural networks within multi-agent reinforcement learning to facilitate seamless information exchange among agents on a regional scale. We evaluate our approach through SUMO simulation, which shows a convergent result in terms of various transportation metrics and better performance over sole signal or platooning control.

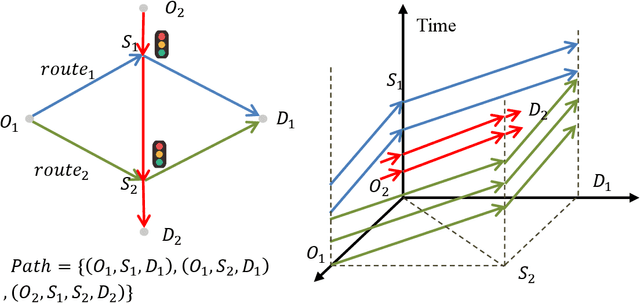

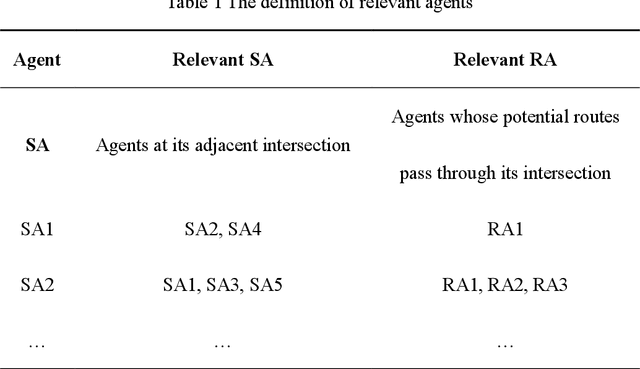

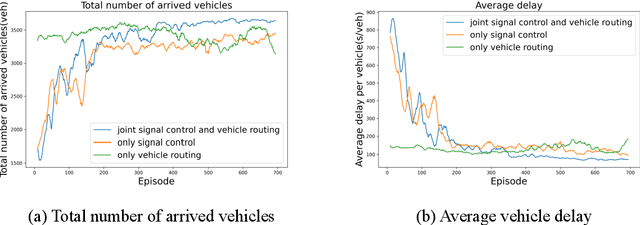

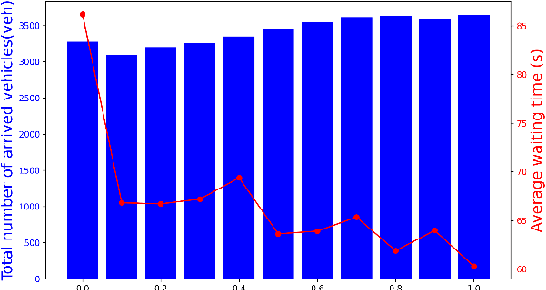

Joint Optimization of Traffic Signal Control and Vehicle Routing in Signalized Road Networks using Multi-Agent Deep Reinforcement Learning

Oct 16, 2023

Abstract:Urban traffic congestion is a critical predicament that plagues modern road networks. To alleviate this issue and enhance traffic efficiency, traffic signal control and vehicle routing have proven to be effective measures. In this paper, we propose a joint optimization approach for traffic signal control and vehicle routing in signalized road networks. The objective is to enhance network performance by simultaneously controlling signal timings and route choices using Multi-Agent Deep Reinforcement Learning (MADRL). Signal control agents (SAs) are employed to establish signal timings at intersections, whereas vehicle routing agents (RAs) are responsible for selecting vehicle routes. By establishing relevance between agents and enabling them to share observations and rewards, interaction and cooperation among agents are fostered, which enhances individual training. The Multi-Agent Advantage Actor-Critic algorithm is used to handle multi-agent environments, and Deep Neural Network (DNN) structures are designed to facilitate the algorithm's convergence. Notably, our work is the first to utilize MADRL in determining the optimal joint policy for signal control and vehicle routing. Numerical experiments conducted on the modified Sioux network demonstrate that our integration of signal control and vehicle routing outperforms controlling signal timings or vehicles' routes alone in enhancing traffic efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge