Xianyi Zeng

GEMTEX

Harnessing the Power of Neural Operators with Automatically Encoded Conservation Laws

Dec 26, 2023

Abstract:Neural operators (NOs) have emerged as effective tools for modeling complex physical systems in scientific machine learning. In NOs, a central characteristic is to learn the governing physical laws directly from data. In contrast to other machine learning applications, partial knowledge is often known a priori about the physical system at hand whereby quantities such as mass, energy and momentum are exactly conserved. Currently, NOs have to learn these conservation laws from data and can only approximately satisfy them due to finite training data and random noise. In this work, we introduce conservation law-encoded neural operators (clawNOs), a suite of NOs that endow inference with automatic satisfaction of such conservation laws. ClawNOs are built with a divergence-free prediction of the solution field, with which the continuity equation is automatically guaranteed. As a consequence, clawNOs are compliant with the most fundamental and ubiquitous conservation laws essential for correct physical consistency. As demonstrations, we consider a wide variety of scientific applications ranging from constitutive modeling of material deformation, incompressible fluid dynamics, to atmospheric simulation. ClawNOs significantly outperform the state-of-the-art NOs in learning efficacy, especially in small-data regimes.

Lightweight Transformer in Federated Setting for Human Activity Recognition

Oct 01, 2021

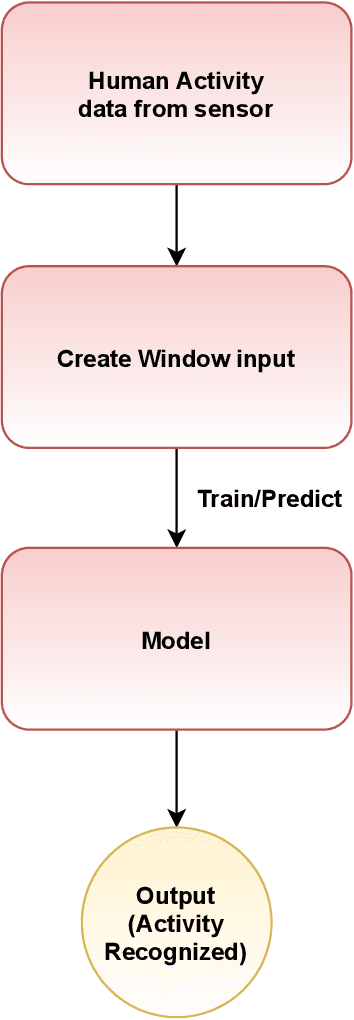

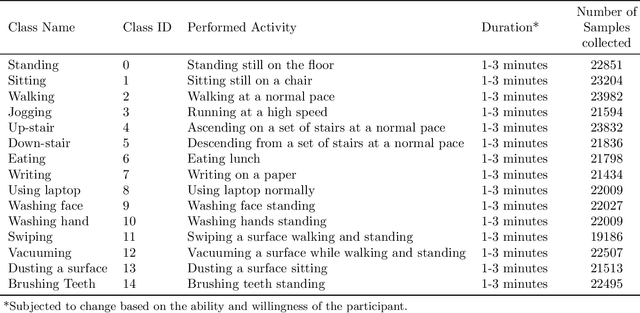

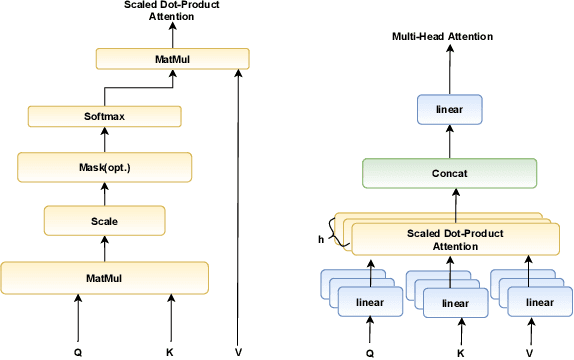

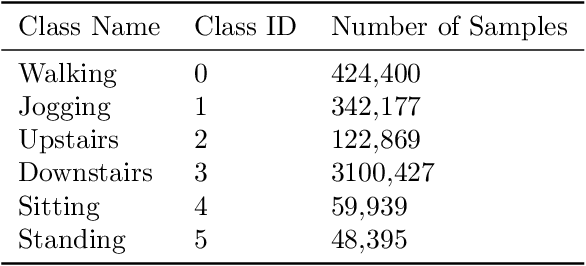

Abstract:Human Activity Recognition (HAR) has been a challenging problem yet it needs to be solved. It will mainly be used for eldercare and healthcare as an assistive technology when ensemble with other technologies like Internet of Things(IoT). HAR can be achieved with the help of sensors, smartphones or images. Deep neural network techniques like artificial neural networks, convolutional neural networks and recurrent neural networks have been used in HAR, both in centralized and federated setting. However, these techniques have certain limitations. RNNs have limitation of parallelization, CNNS have the limitation of sequence length and they are computationally expensive. In this paper, to address the state of art challenges, we present a inertial sensors-based novel one patch transformer which gives the best of both RNNs and CNNs for Human activity recognition. We also design a testbed to collect real-time human activity data. The data collected is further used to train and test the proposed transformer. With the help of experiments, we show that the proposed transformer outperforms the state of art CNN and RNN based classifiers, both in federated and centralized setting. Moreover, the proposed transformer is computationally inexpensive as it uses very few parameter compared to the existing state of art CNN and RNN based classifier. Thus its more suitable for federated learning as it provides less communication and computational cost.

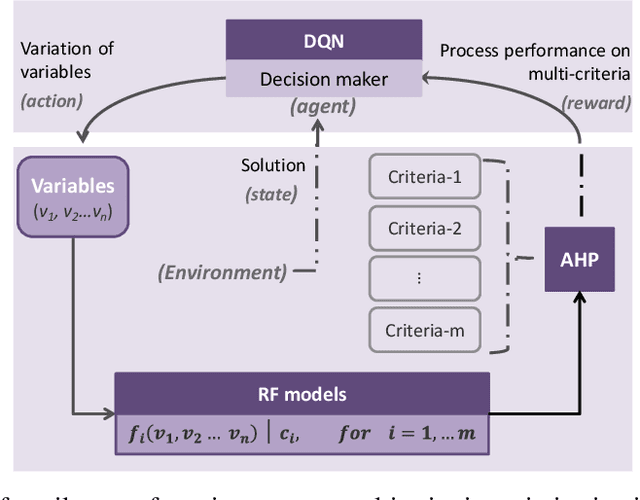

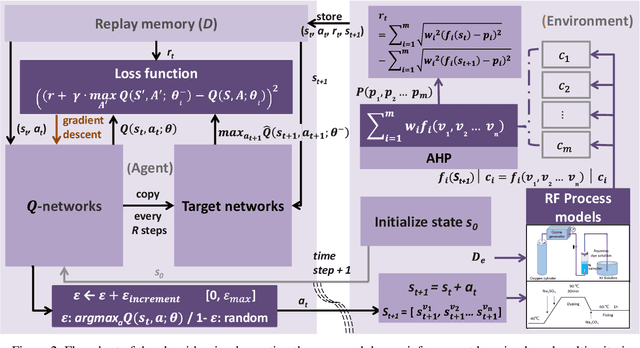

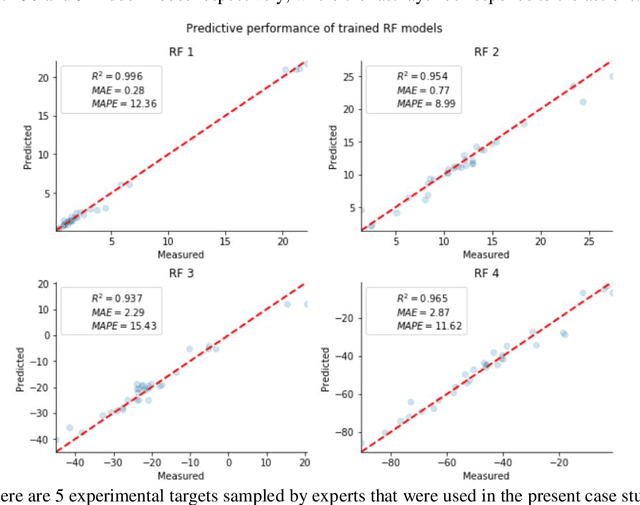

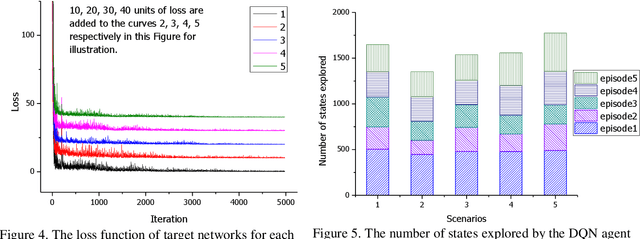

A Deep Reinforcement Learning Based Multi-Criteria Decision Support System for Textile Manufacturing Process Optimization

Dec 29, 2020

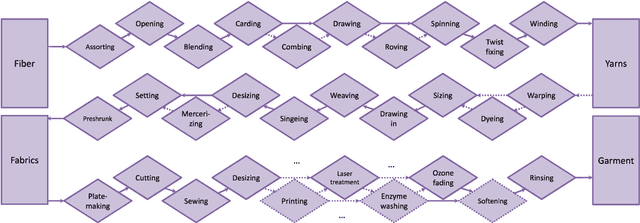

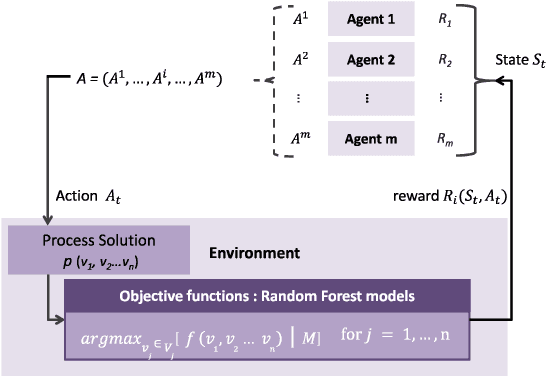

Abstract:Textile manufacturing is a typical traditional industry involving high complexity in interconnected processes with limited capacity on the application of modern technologies. Decision-making in this domain generally takes multiple criteria into consideration, which usually arouses more complexity. To address this issue, the present paper proposes a decision support system that combines the intelligent data-based random forest (RF) models and a human knowledge based analytical hierarchical process (AHP) multi-criteria structure in accordance to the objective and the subjective factors of the textile manufacturing process. More importantly, the textile manufacturing process is described as the Markov decision process (MDP) paradigm, and a deep reinforcement learning scheme, the Deep Q-networks (DQN), is employed to optimize it. The effectiveness of this system has been validated in a case study of optimizing a textile ozonation process, showing that it can better master the challenging decision-making tasks in textile manufacturing processes.

* arXiv admin note: text overlap with arXiv:2012.01101

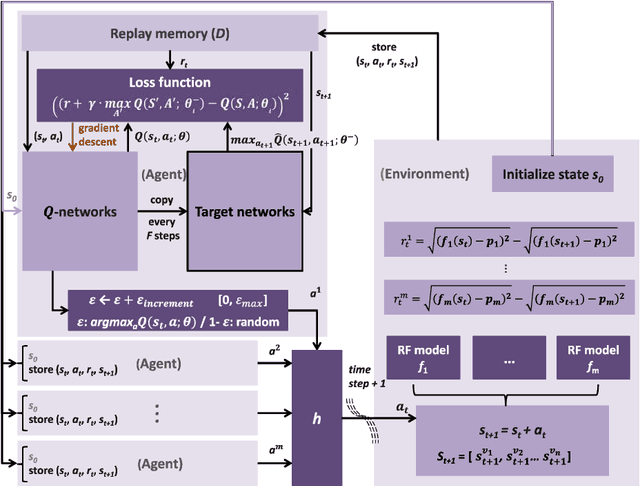

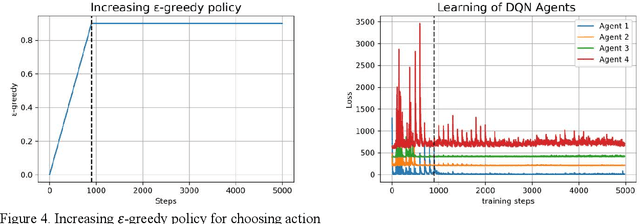

Multi-Objective Optimization of the Textile Manufacturing Process Using Deep-Q-Network Based Multi-Agent Reinforcement Learning

Dec 02, 2020

Abstract:Multi-objective optimization of the textile manufacturing process is an increasing challenge because of the growing complexity involved in the development of the textile industry. The use of intelligent techniques has been often discussed in this domain, although a significant improvement from certain successful applications has been reported, the traditional methods failed to work with high-as well as human intervention. Upon which, this paper proposed a multi-agent reinforcement learning (MARL) framework to transform the optimization process into a stochastic game and introduced the deep Q-networks algorithm to train the multiple agents. A utilitarian selection mechanism was employed in the stochastic game, which (-greedy policy) in each state to avoid the interruption of multiple equilibria and achieve the correlated equilibrium optimal solutions of the optimizing process. The case study result reflects that the proposed MARL system is possible to achieve the optimal solutions for the textile ozonation process and it performs better than the traditional approaches.

A reinforcement learning based decision support system in textile manufacturing process

May 20, 2020Abstract:This paper introduced a reinforcement learning based decision support system in textile manufacturing process. A solution optimization problem of color fading ozonation is discussed and set up as a Markov Decision Process (MDP) in terms of tuple {S, A, P, R}. Q-learning is used to train an agent in the interaction with the setup environment by accumulating the reward R. According to the application result, it is found that the proposed MDP model has well expressed the optimization problem of textile manufacturing process discussed in this paper, therefore the use of reinforcement learning to support decision making in this sector is conducted and proven that is applicable with promising prospects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge