Xianping Tao

Solving the Min-Max Multiple Traveling Salesmen Problem via Learning-Based Path Generation and Optimal Splitting

Aug 23, 2025Abstract:This study addresses the Min-Max Multiple Traveling Salesmen Problem ($m^3$-TSP), which aims to coordinate tours for multiple salesmen such that the length of the longest tour is minimized. Due to its NP-hard nature, exact solvers become impractical under the assumption that $P \ne NP$. As a result, learning-based approaches have gained traction for their ability to rapidly generate high-quality approximate solutions. Among these, two-stage methods combine learning-based components with classical solvers, simplifying the learning objective. However, this decoupling often disrupts consistent optimization, potentially degrading solution quality. To address this issue, we propose a novel two-stage framework named \textbf{Generate-and-Split} (GaS), which integrates reinforcement learning (RL) with an optimal splitting algorithm in a joint training process. The splitting algorithm offers near-linear scalability with respect to the number of cities and guarantees optimal splitting in Euclidean space for any given path. To facilitate the joint optimization of the RL component with the algorithm, we adopt an LSTM-enhanced model architecture to address partial observability. Extensive experiments show that the proposed GaS framework significantly outperforms existing learning-based approaches in both solution quality and transferability.

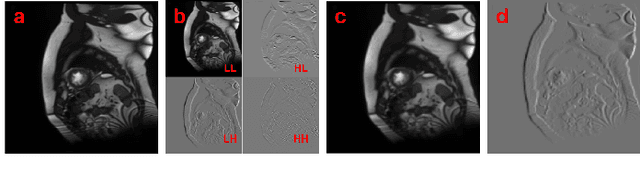

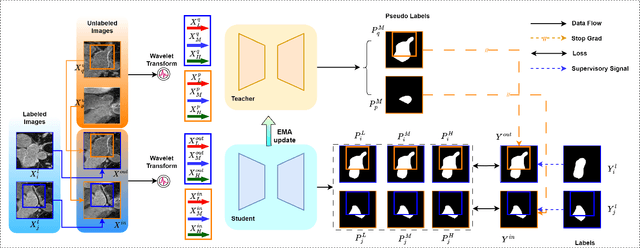

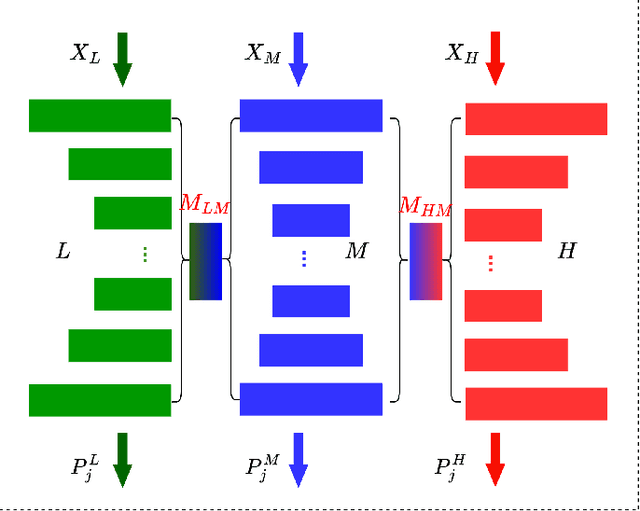

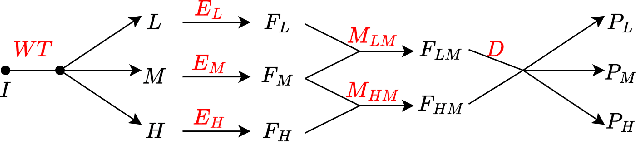

WT-BCP: Wavelet Transform based Bidirectional Copy-Paste for Semi-Supervised Medical Image Segmentation

Apr 20, 2025

Abstract:Semi-supervised medical image segmentation (SSMIS) shows promise in reducing reliance on scarce labeled medical data. However, SSMIS field confronts challenges such as distribution mismatches between labeled and unlabeled data, artificial perturbations causing training biases, and inadequate use of raw image information, especially low-frequency (LF) and high-frequency (HF) components.To address these challenges, we propose a Wavelet Transform based Bidirectional Copy-Paste SSMIS framework, named WT-BCP, which improves upon the Mean Teacher approach. Our method enhances unlabeled data understanding by copying random crops between labeled and unlabeled images and employs WT to extract LF and HF details.We propose a multi-input and multi-output model named XNet-Plus, to receive the fused information after WT. Moreover, consistency training among multiple outputs helps to mitigate learning biases introduced by artificial perturbations. During consistency training, the mixed images resulting from WT are fed into both models, with the student model's output being supervised by pseudo-labels and ground-truth. Extensive experiments conducted on 2D and 3D datasets confirm the effectiveness of our model.Code: https://github.com/simzhangbest/WT-BCP.

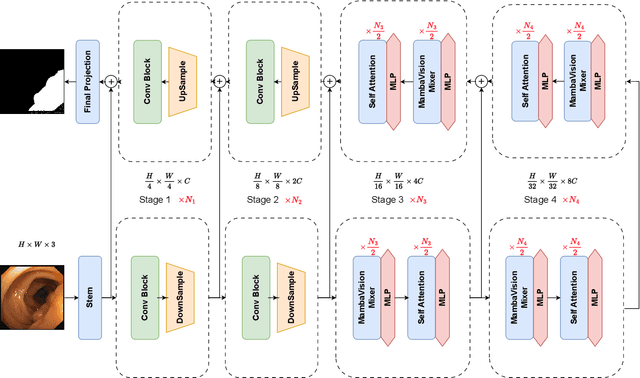

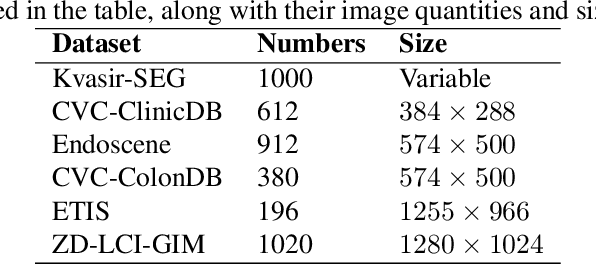

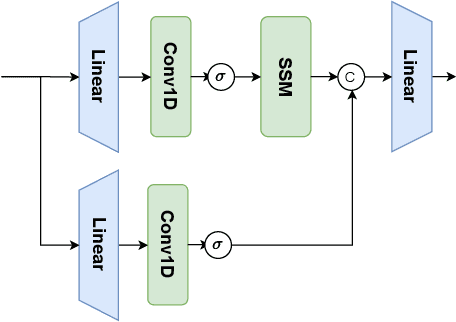

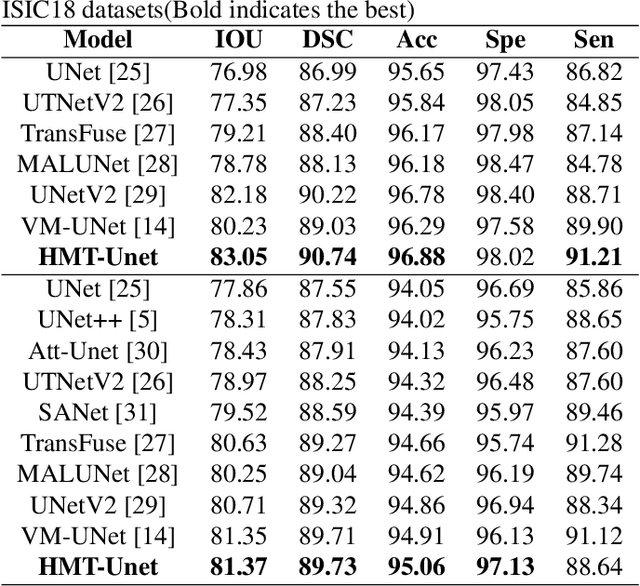

HMT-UNet: A hybird Mamba-Transformer Vision UNet for Medical Image Segmentation

Aug 21, 2024

Abstract:In the field of medical image segmentation, models based on both CNN and Transformer have been thoroughly investigated. However, CNNs have limited modeling capabilities for long-range dependencies, making it challenging to exploit the semantic information within images fully. On the other hand, the quadratic computational complexity poses a challenge for Transformers. State Space Models (SSMs), such as Mamba, have been recognized as a promising method. They not only demonstrate superior performance in modeling long-range interactions, but also preserve a linear computational complexity. The hybrid mechanism of SSM (State Space Model) and Transformer, after meticulous design, can enhance its capability for efficient modeling of visual features. Extensive experiments have demonstrated that integrating the self-attention mechanism into the hybrid part behind the layers of Mamba's architecture can greatly improve the modeling capacity to capture long-range spatial dependencies. In this paper, leveraging the hybrid mechanism of SSM, we propose a U-shape architecture model for medical image segmentation, named Hybird Transformer vision Mamba UNet (HTM-UNet). We conduct comprehensive experiments on the ISIC17, ISIC18, CVC-300, CVC-ClinicDB, Kvasir, CVC-ColonDB, ETIS-Larib PolypDB public datasets and ZD-LCI-GIM private dataset. The results indicate that HTM-UNet exhibits competitive performance in medical image segmentation tasks. Our code is available at https://github.com/simzhangbest/HMT-Unet.

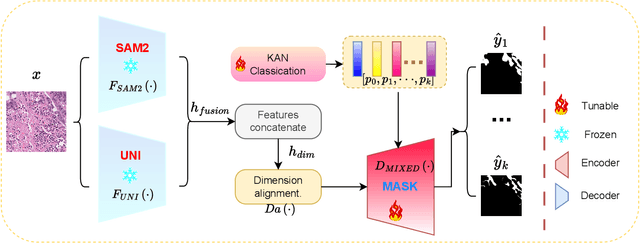

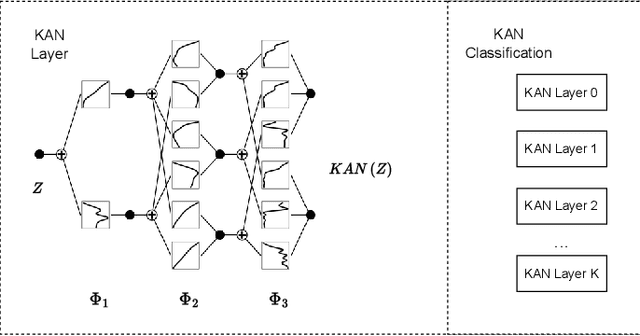

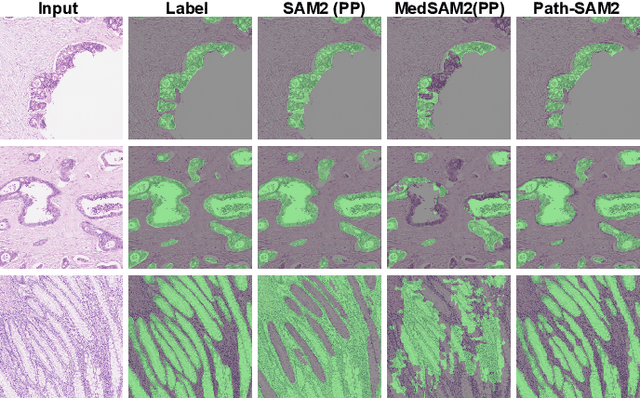

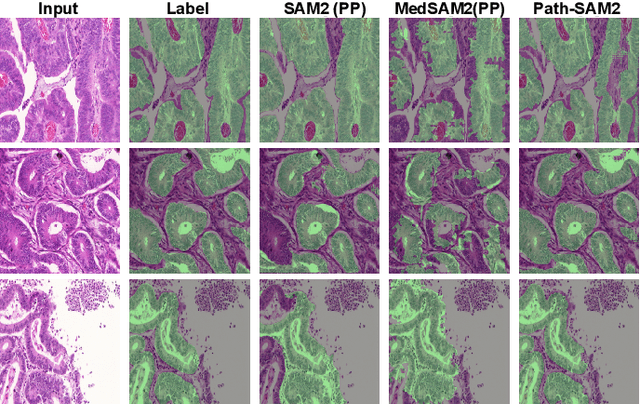

SAM2-PATH: A better segment anything model for semantic segmentation in digital pathology

Aug 07, 2024

Abstract:The semantic segmentation task in pathology plays an indispensable role in assisting physicians in determining the condition of tissue lesions. Foundation models, such as the SAM (Segment Anything Model) and SAM2, exhibit exceptional performance in instance segmentation within everyday natural scenes. SAM-PATH has also achieved impressive results in semantic segmentation within the field of pathology. However, in computational pathology, the models mentioned above still have the following limitations. The pre-trained encoder models suffer from a scarcity of pathology image data; SAM and SAM2 are not suitable for semantic segmentation. In this paper, we have designed a trainable Kolmogorov-Arnold Networks(KAN) classification module within the SAM2 workflow, and we have introduced the largest pretrained vision encoder for histopathology (UNI) to date. Our proposed framework, SAM2-PATH, augments SAM2's capability to perform semantic segmentation in digital pathology autonomously, eliminating the need for human provided input prompts. The experimental results demonstrate that, after fine-tuning the KAN classification module and decoder, Our dataset has achieved competitive results on publicly available pathology data. The code has been open-sourced and can be found at the following address: https://github.com/simzhangbest/SAM2PATH.

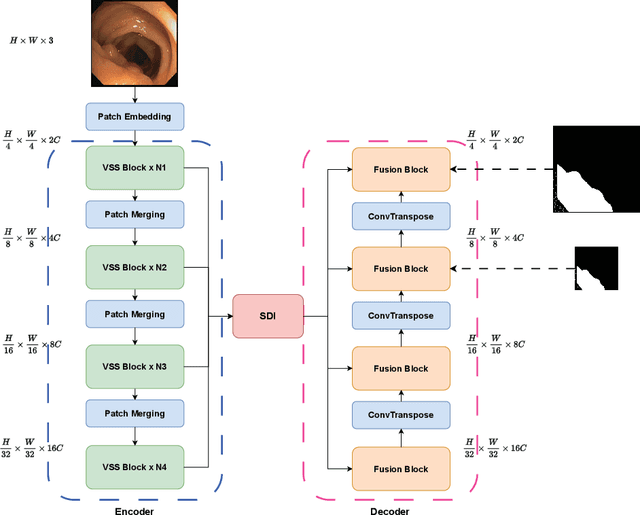

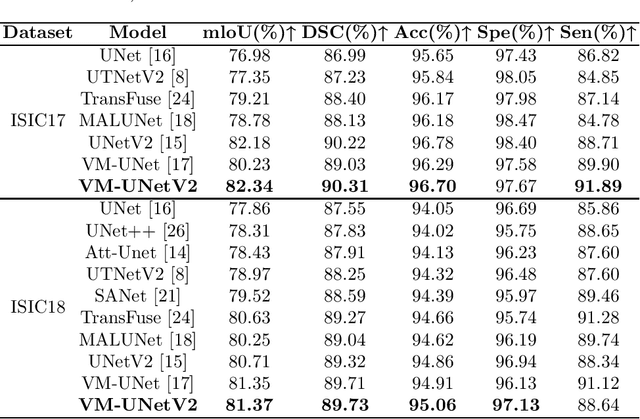

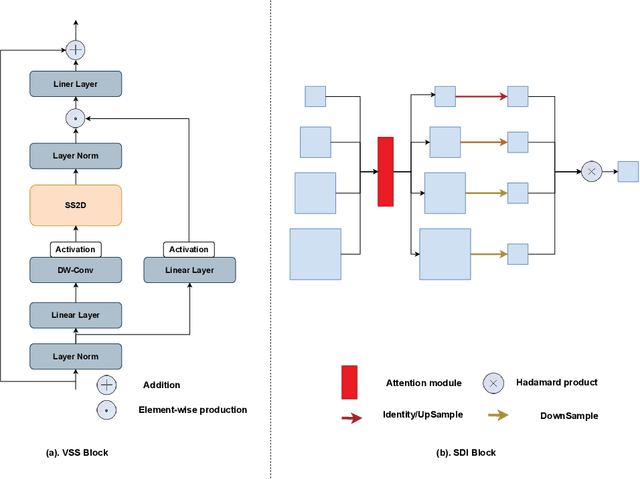

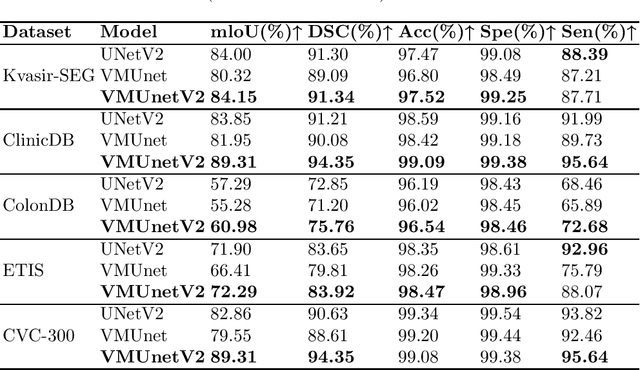

VM-UNET-V2 Rethinking Vision Mamba UNet for Medical Image Segmentation

Mar 14, 2024

Abstract:In the field of medical image segmentation, models based on both CNN and Transformer have been thoroughly investigated. However, CNNs have limited modeling capabilities for long-range dependencies, making it challenging to exploit the semantic information within images fully. On the other hand, the quadratic computational complexity poses a challenge for Transformers. Recently, State Space Models (SSMs), such as Mamba, have been recognized as a promising method. They not only demonstrate superior performance in modeling long-range interactions, but also preserve a linear computational complexity. Inspired by the Mamba architecture, We proposed Vison Mamba-UNetV2, the Visual State Space (VSS) Block is introduced to capture extensive contextual information, the Semantics and Detail Infusion (SDI) is introduced to augment the infusion of low-level and high-level features. We conduct comprehensive experiments on the ISIC17, ISIC18, CVC-300, CVC-ClinicDB, Kvasir, CVC-ColonDB and ETIS-LaribPolypDB public datasets. The results indicate that VM-UNetV2 exhibits competitive performance in medical image segmentation tasks. Our code is available at https://github.com/nobodyplayer1/VM-UNetV2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge