Xiangyang Zhou

Distilling Knowledge from Pre-trained Language Models via Text Smoothing

May 08, 2020

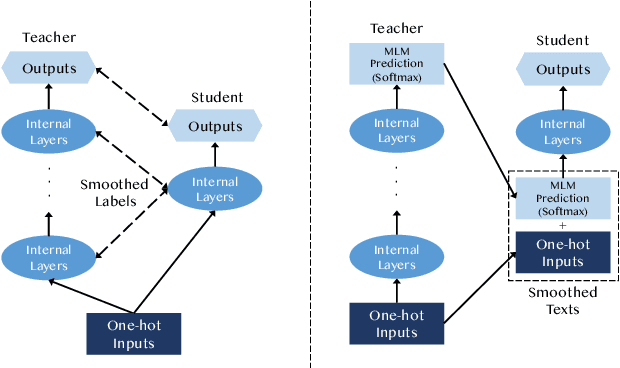

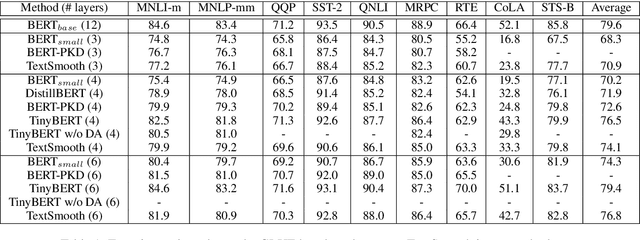

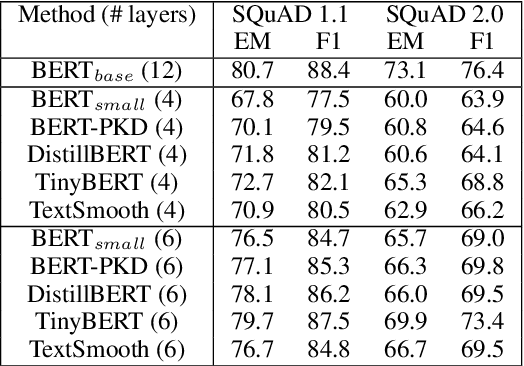

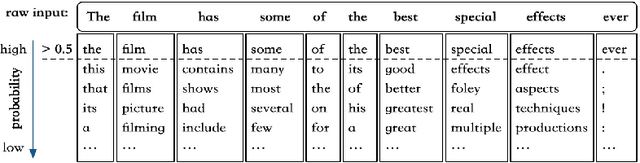

Abstract:This paper studies compressing pre-trained language models, like BERT (Devlin et al.,2019), via teacher-student knowledge distillation. Previous works usually force the student model to strictly mimic the smoothed labels predicted by the teacher BERT. As an alternative, we propose a new method for BERT distillation, i.e., asking the teacher to generate smoothed word ids, rather than labels, for teaching the student model in knowledge distillation. We call this kind of methodTextSmoothing. Practically, we use the softmax prediction of the Masked Language Model(MLM) in BERT to generate word distributions for given texts and smooth those input texts using that predicted soft word ids. We assume that both the smoothed labels and the smoothed texts can implicitly augment the input corpus, while text smoothing is intuitively more efficient since it can generate more instances in one neural network forward step.Experimental results on GLUE and SQuAD demonstrate that our solution can achieve competitive results compared with existing BERT distillation methods.

How to Evaluate the Next System: Automatic Dialogue Evaluation from the Perspective of Continual Learning

Dec 10, 2019

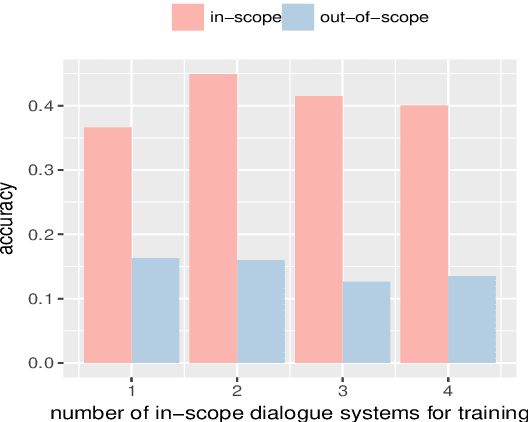

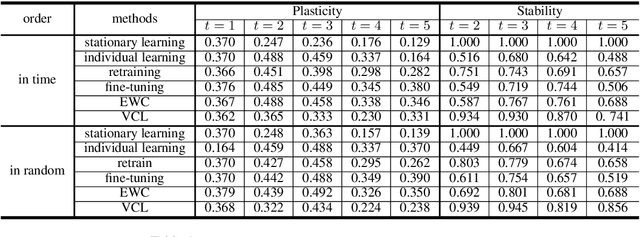

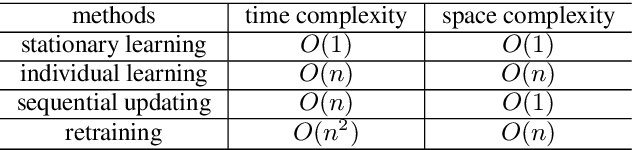

Abstract:Automatic dialogue evaluation plays a crucial role in open-domain dialogue research. Previous works train neural networks with limited annotation for conducting automatic dialogue evaluation, which would naturally affect the evaluation fairness as dialogue systems close to the scope of training corpus would have more preference than the other ones. In this paper, we study alleviating this problem from the perspective of continual learning: given an existing neural dialogue evaluator and the next system to be evaluated, we fine-tune the learned neural evaluator by selectively forgetting/updating its parameters, to jointly fit dialogue systems have been and will be evaluated. Our motivation is to seek for a lifelong and low-cost automatic evaluation for dialogue systems, rather than to reconstruct the evaluator over and over again. Experimental results show that our continual evaluator achieves comparable performance with reconstructing new evaluators, while requires significantly lower resources.

Proactive Human-Machine Conversation with Explicit Conversation Goals

Jun 13, 2019

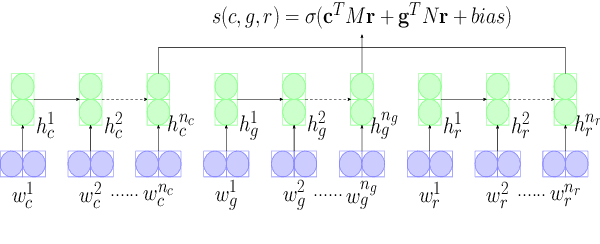

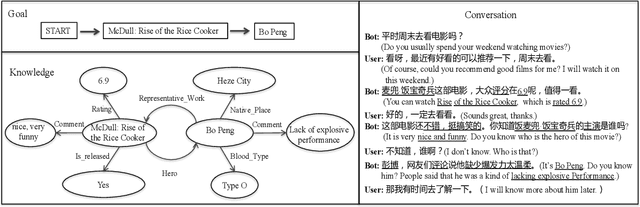

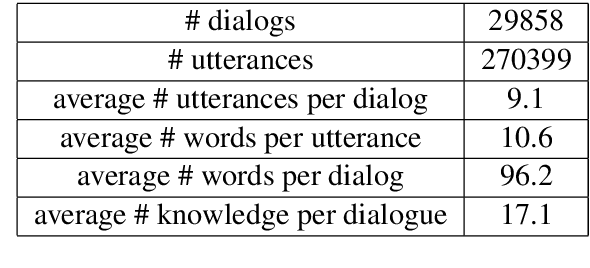

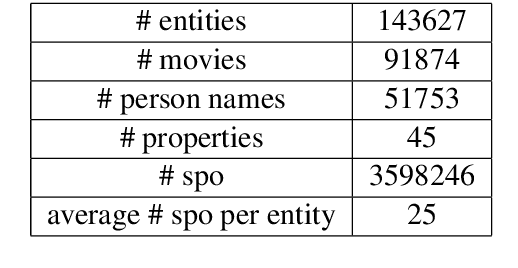

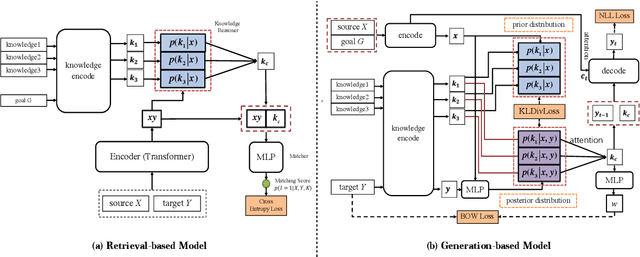

Abstract:Though great progress has been made for human-machine conversation, current dialogue system is still in its infancy: it usually converses passively and utters words more as a matter of response, rather than on its own initiatives. In this paper, we take a radical step towards building a human-like conversational agent: endowing it with the ability of proactively leading the conversation (introducing a new topic or maintaining the current topic). To facilitate the development of such conversation systems, we create a new dataset named DuConv where one acts as a conversation leader and the other acts as the follower. The leader is provided with a knowledge graph and asked to sequentially change the discussion topics, following the given conversation goal, and meanwhile keep the dialogue as natural and engaging as possible. DuConv enables a very challenging task as the model needs to both understand dialogue and plan over the given knowledge graph. We establish baseline results on this dataset (about 270K utterances and 30k dialogues) using several state-of-the-art models. Experimental results show that dialogue models that plan over the knowledge graph can make full use of related knowledge to generate more diverse multi-turn conversations. The baseline systems along with the dataset are publicly available

Power-Law Graph Cuts

Nov 25, 2014

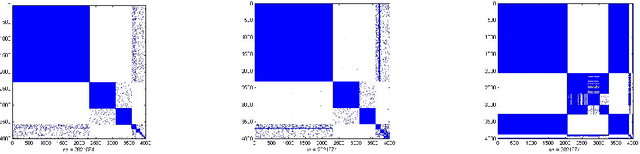

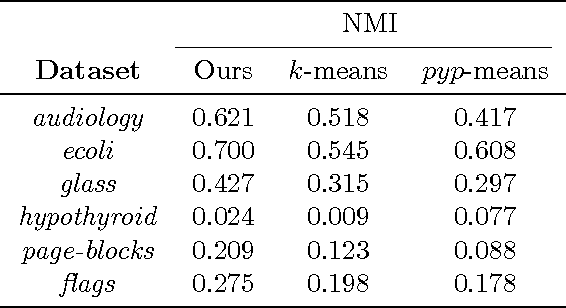

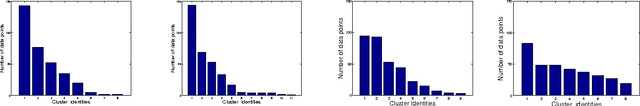

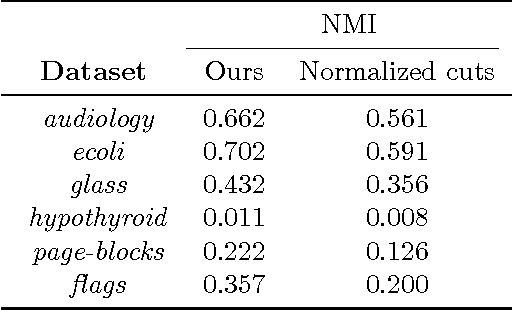

Abstract:Algorithms based on spectral graph cut objectives such as normalized cuts, ratio cuts and ratio association have become popular in recent years because they are widely applicable and simple to implement via standard eigenvector computations. Despite strong performance for a number of clustering tasks, spectral graph cut algorithms still suffer from several limitations: first, they require the number of clusters to be known in advance, but this information is often unknown a priori; second, they tend to produce clusters with uniform sizes. In some cases, the true clusters exhibit a known size distribution; in image segmentation, for instance, human-segmented images tend to yield segment sizes that follow a power-law distribution. In this paper, we propose a general framework of power-law graph cut algorithms that produce clusters whose sizes are power-law distributed, and also does not fix the number of clusters upfront. To achieve our goals, we treat the Pitman-Yor exchangeable partition probability function (EPPF) as a regularizer to graph cut objectives. Because the resulting objectives cannot be solved by relaxing via eigenvectors, we derive a simple iterative algorithm to locally optimize the objectives. Moreover, we show that our proposed algorithm can be viewed as performing MAP inference on a particular Pitman-Yor mixture model. Our experiments on various data sets show the effectiveness of our algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge