Xiang Ye

TALE-teller: Tendon-Actuated Linked Element Robotic Testbed for Investigating Tail Functions

Oct 28, 2024Abstract:Tails serve various functions in both robotics and biology, including expression, grasping, and defense. The vertebrate tails associated with these functions exhibit diverse patterns of vertebral lengths, but the precise mechanisms linking form to function have not yet been established. Vertebrate tails are complex musculoskeletal structures, making both direct experimentation and computational modeling challenging. This paper presents Tendon-Actuated Linked-Element (TALE), a modular robotic test bed to explore how tail morphology influences function. By varying 3D printed bones, silicone joints, and tendon configurations, TALE can match the morphology of extant, extinct, and even theoretical tails. We first characterized the stiffness of our joint design empirically and in simulation before testing the hypothesis that tails with different vertebral proportions curve differently. We then compared the maximum bending state of two common vertebrate proportions and one theoretical morphology. Uniform bending of joints with different vertebral proportions led to substantial differences in the location of the tail tip, suggesting a significant influence on overall tail function. Future studies can introduce more complex morphologies to establish the mechanisms of diverse tail functions. With this foundational knowledge, we will isolate the key features underlying tail function to inform the design for robotic tails. Images and videos can be found on TALE's project page: https://www.embirlab.com/tale.

Canonical Mean Filter for Almost Zero-Shot Multi-Task classification

Apr 08, 2022

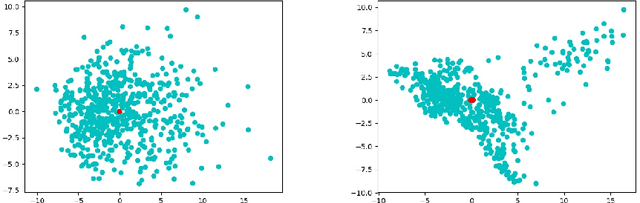

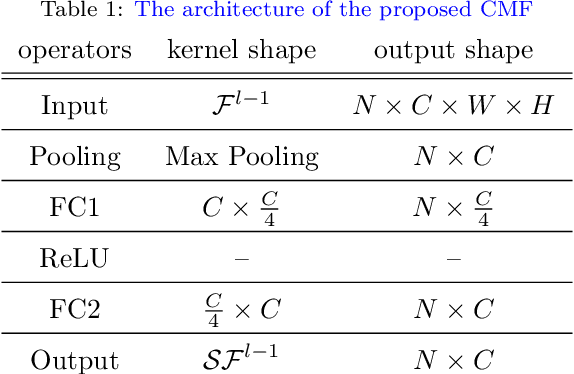

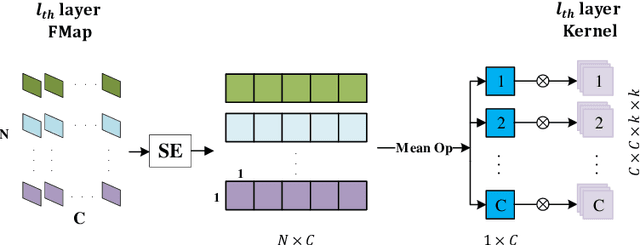

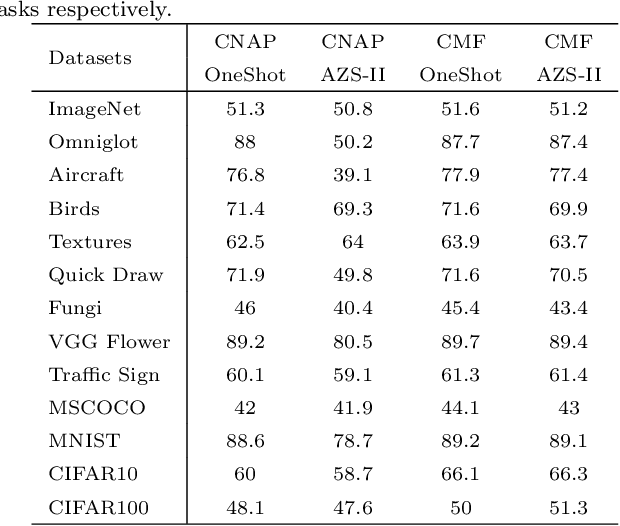

Abstract:The support set is a key to providing conditional prior for fast adaption of the model in few-shot tasks. But the strict form of support set makes its construction actually difficult in practical application. Motivated by ANIL, we rethink the role of adaption in the feature extractor of CNAPs, which is a state-of-the-art representative few-shot method. To investigate the role, Almost Zero-Shot (AZS) task is designed by fixing the support set to replace the common scheme, which provides corresponding support sets for the different conditional prior of different tasks. The AZS experiment results infer that the adaptation works little in the feature extractor. However, CNAPs cannot be robust to randomly selected support sets and perform poorly on some datasets of Meta-Dataset because of its scattered mean embeddings responded by the simple mean operator. To enhance the robustness of CNAPs, Canonical Mean Filter (CMF) module is proposed to make the mean embeddings intensive and stable in feature space by mapping the support sets into a canonical form. CMFs make CNAPs robust to any fixed support sets even if they are random matrices. This attribution makes CNAPs be able to remove the mean encoder and the parameter adaptation network at the test stage, while CNAP-CMF on AZS tasks keeps the performance with one-shot tasks. It leads to a big parameter reduction. Precisely, 40.48\% parameters are dropped at the test stage. Also, CNAP-CMF outperforms CNAPs in one-shot tasks because it addresses inner-task unstable performance problems. Classification performance, visualized and clustering results verify that CMFs make CNAPs better and simpler.

Towards Understanding the Effectiveness of Attention Mechanism

Jun 29, 2021

Abstract:Attention Mechanism is a widely used method for improving the performance of convolutional neural networks (CNNs) on computer vision tasks. Despite its pervasiveness, we have a poor understanding of what its effectiveness stems from. It is popularly believed that its effectiveness stems from the visual attention explanation, advocating focusing on the important part of input data rather than ingesting the entire input. In this paper, we find that there is only a weak consistency between the attention weights of features and their importance. Instead, we verify the crucial role of feature map multiplication in attention mechanism and uncover a fundamental impact of feature map multiplication on the learned landscapes of CNNs: with the high order non-linearity brought by the feature map multiplication, it played a regularization role on CNNs, which made them learn smoother and more stable landscapes near real samples compared to vanilla CNNs. This smoothness and stability induce a more predictive and stable behavior in-between real samples, and make CNNs generate better. Moreover, motivated by the proposed effectiveness of feature map multiplication, we design feature map multiplication network (FMMNet) by simply replacing the feature map addition in ResNet with feature map multiplication. FMMNet outperforms ResNet on various datasets, and this indicates that feature map multiplication plays a vital role in improving the performance even without finely designed attention mechanism in existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge