Towards Understanding the Effectiveness of Attention Mechanism

Paper and Code

Jun 29, 2021

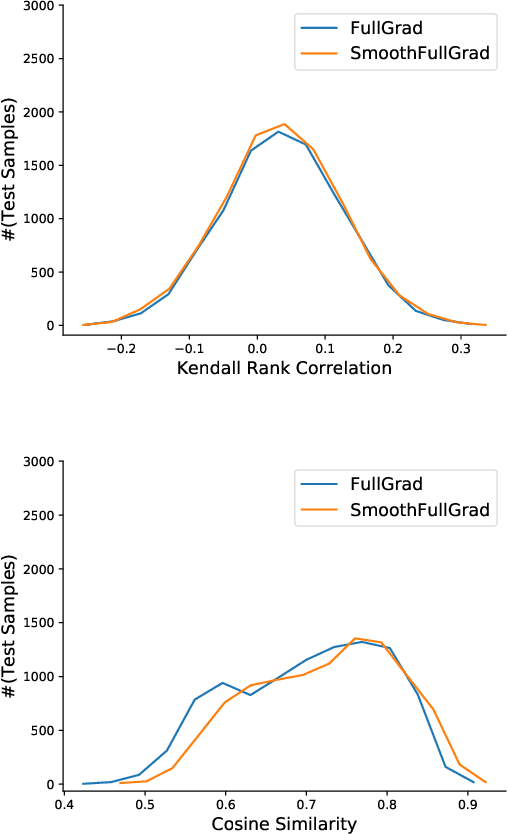

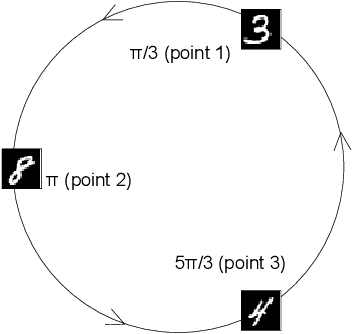

Attention Mechanism is a widely used method for improving the performance of convolutional neural networks (CNNs) on computer vision tasks. Despite its pervasiveness, we have a poor understanding of what its effectiveness stems from. It is popularly believed that its effectiveness stems from the visual attention explanation, advocating focusing on the important part of input data rather than ingesting the entire input. In this paper, we find that there is only a weak consistency between the attention weights of features and their importance. Instead, we verify the crucial role of feature map multiplication in attention mechanism and uncover a fundamental impact of feature map multiplication on the learned landscapes of CNNs: with the high order non-linearity brought by the feature map multiplication, it played a regularization role on CNNs, which made them learn smoother and more stable landscapes near real samples compared to vanilla CNNs. This smoothness and stability induce a more predictive and stable behavior in-between real samples, and make CNNs generate better. Moreover, motivated by the proposed effectiveness of feature map multiplication, we design feature map multiplication network (FMMNet) by simply replacing the feature map addition in ResNet with feature map multiplication. FMMNet outperforms ResNet on various datasets, and this indicates that feature map multiplication plays a vital role in improving the performance even without finely designed attention mechanism in existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge