Xiang Shen

Filter-And-Refine: A MLLM Based Cascade System for Industrial-Scale Video Content Moderation

Jul 23, 2025Abstract:Effective content moderation is essential for video platforms to safeguard user experience and uphold community standards. While traditional video classification models effectively handle well-defined moderation tasks, they struggle with complicated scenarios such as implicit harmful content and contextual ambiguity. Multimodal large language models (MLLMs) offer a promising solution to these limitations with their superior cross-modal reasoning and contextual understanding. However, two key challenges hinder their industrial adoption. First, the high computational cost of MLLMs makes full-scale deployment impractical. Second, adapting generative models for discriminative classification remains an open research problem. In this paper, we first introduce an efficient method to transform a generative MLLM into a multimodal classifier using minimal discriminative training data. To enable industry-scale deployment, we then propose a router-ranking cascade system that integrates MLLMs with a lightweight router model. Offline experiments demonstrate that our MLLM-based approach improves F1 score by 66.50% over traditional classifiers while requiring only 2% of the fine-tuning data. Online evaluations show that our system increases automatic content moderation volume by 41%, while the cascading deployment reduces computational cost to only 1.5% of direct full-scale deployment.

CPFD: Confidence-aware Privileged Feature Distillation for Short Video Classification

Oct 07, 2024

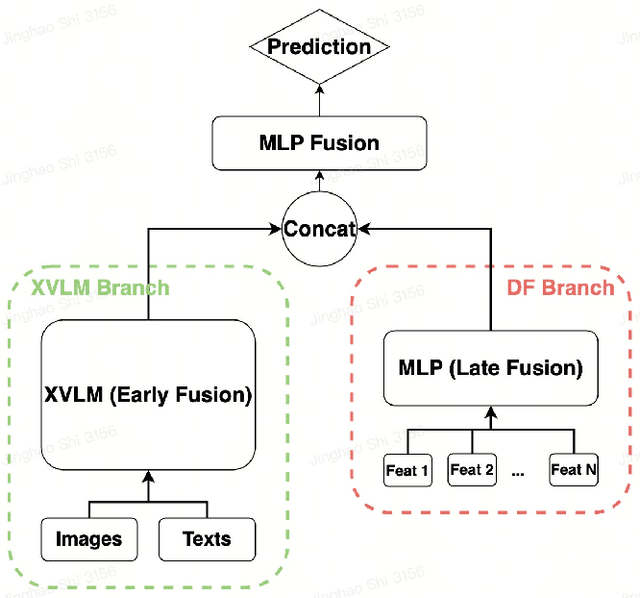

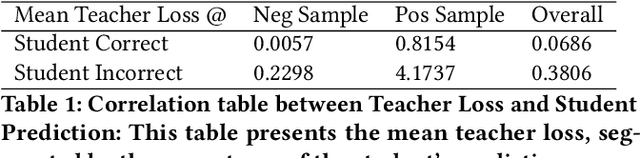

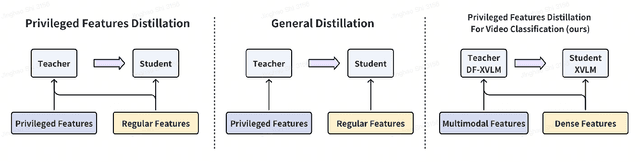

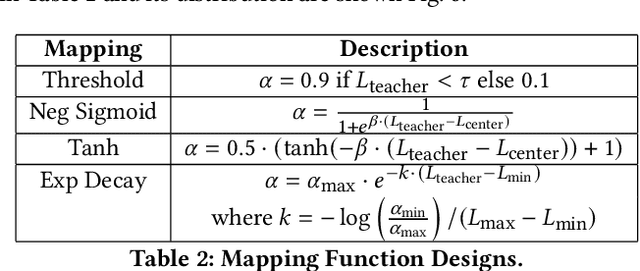

Abstract:Dense features, customized for different business scenarios, are essential in short video classification. However, their complexity, specific adaptation requirements, and high computational costs make them resource-intensive and less accessible during online inference. Consequently, these dense features are categorized as `Privileged Dense Features'.Meanwhile, end-to-end multi-modal models have shown promising results in numerous computer vision tasks. In industrial applications, prioritizing end-to-end multi-modal features, can enhance efficiency but often leads to the loss of valuable information from historical privileged dense features. To integrate both features while maintaining efficiency and manageable resource costs, we present Confidence-aware Privileged Feature Distillation (CPFD), which empowers features of an end-to-end multi-modal model by adaptively distilling privileged features during training. Unlike existing privileged feature distillation (PFD) methods, which apply uniform weights to all instances during distillation, potentially causing unstable performance across different business scenarios and a notable performance gap between teacher model (Dense Feature enhanced multimodal-model DF-X-VLM) and student model (multimodal-model only X-VLM), our CPFD leverages confidence scores derived from the teacher model to adaptively mitigate the performance variance with the student model. We conducted extensive offline experiments on five diverse tasks demonstrating that CPFD improves the video classification F1 score by 6.76% compared with end-to-end multimodal-model (X-VLM) and by 2.31% with vanilla PFD on-average. And it reduces the performance gap by 84.6% and achieves results comparable to teacher model DF-X-VLM. The effectiveness of CPFD is further substantiated by online experiments, and our framework has been deployed in production systems for over a dozen models.

Tracking Fast Neural Adaptation by Globally Adaptive Point Process Estimation for Brain-Machine Interface

Jul 27, 2021

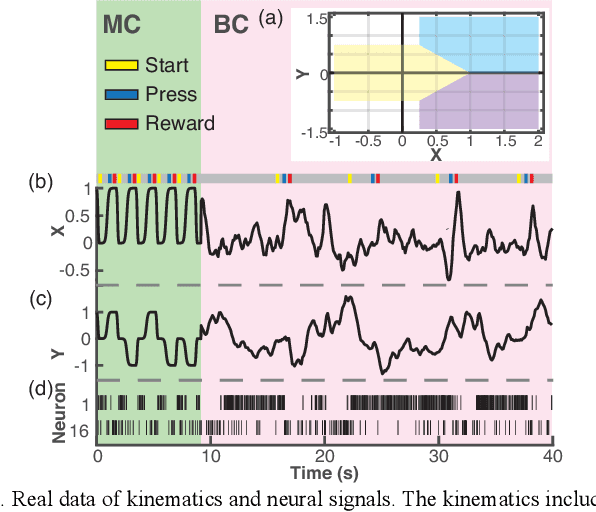

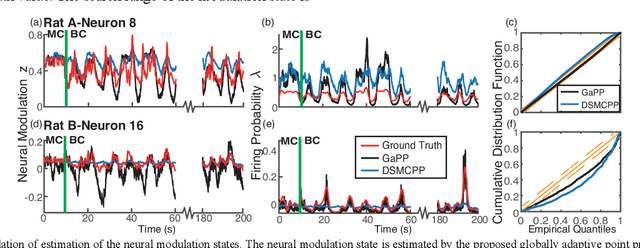

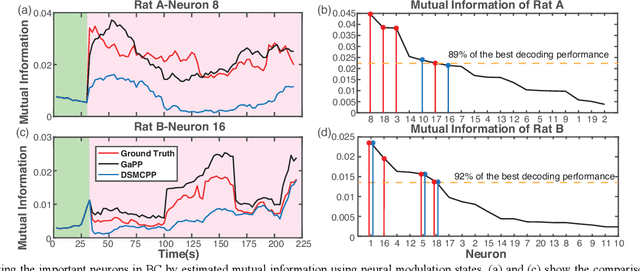

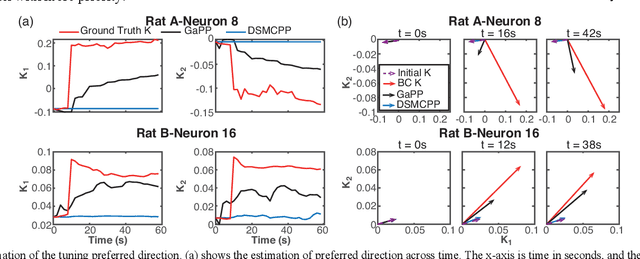

Abstract:Brain-machine interfaces (BMIs) help the disabled restore body functions by translating neural activity into digital commands to control external devices. Neural adaptation, where the brain signals change in response to external stimuli or movements, plays an important role in BMIs. When subjects purely use neural activity to brain-control a prosthesis, some neurons will actively explore a new tuning property to accomplish the movement task. The prediction of this neural tuning property can help subjects adapt more efficiently to brain control and maintain good decoding performance. Existing prediction methods track the slow change of the tuning property in the manual control, which is not suitable for the fast neural adaptation in brain control. In order to identify the active neurons in brain control and track their tuning property changes, we propose a globally adaptive point process method (GaPP) to estimate the neural modulation state from spike trains, decompose the states into the hyper preferred direction and reconstruct the kinematics in a dual-model framework. We implement the method on real data from rats performing a two-lever discrimination task under manual control and brain control. The results show our method successfully predicts the neural modulation state and identifies the neurons that become active in brain control. Compared to existing methods, ours tracks the fast changes of the hyper preferred direction from manual control to brain control more accurately and efficiently and reconstructs the kinematics better and faster.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge