Winter Guerra

FlightGoggles: Photorealistic Sensor Simulation for Perception-driven Robotics using Photogrammetry and Virtual Reality

May 27, 2019

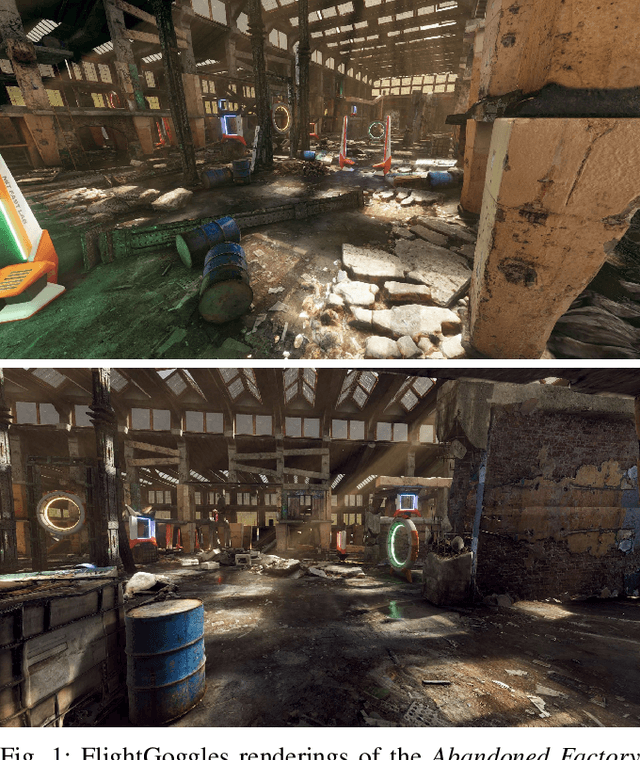

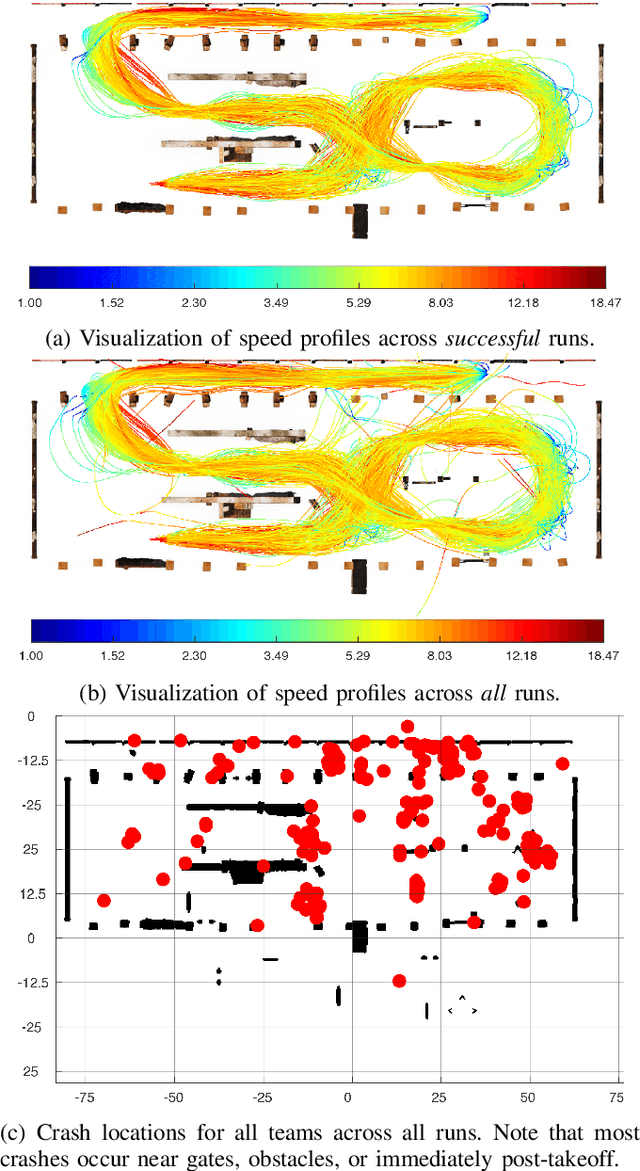

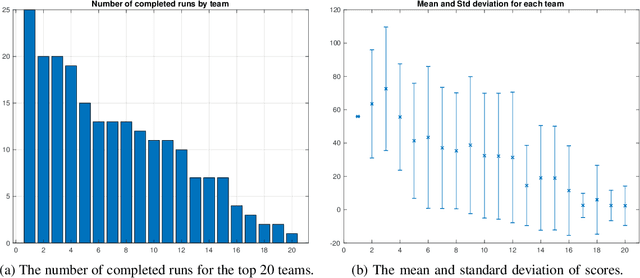

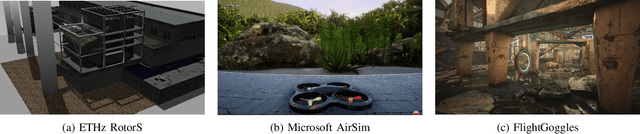

Abstract:FlightGoggles is a photorealistic sensor simulator for perception-driven robotic vehicles. The key contributions of FlightGoggles are twofold. First, FlightGoggles provides photorealistic exteroceptive sensor simulation using graphics assets generated with photogrammetry. Second, it also provides the ability to combine $\textit{(i)}$ synthetic exteroceptive measurements generated $\textit{in silico}$ in real time and $\textit{(ii)}$ vehicle dynamics and proprioceptive measurements generated $\textit{in motio}$ by vehicle(s) in flight in a motion-capture facility. FlightGoggles is capable of simulating a virtual-reality environment around autonomous vehicle(s) in flight. While a vehicle is in flight in the FlightGoggles virtual reality environment, exteroceptive sensors are rendered synthetically in real time while all complex extrinsic dynamics are generated organically through the natural interactions of the vehicle. The FlightGoggles framework allows for researchers to accelerate development by circumventing the need to estimate complex and hard-to-model interactions such as aerodynamics, motor mechanics, battery electrochemistry, and behavior of other agents. The ability to perform vehicle-in-the-loop experiments with photorealistic exteroceptive sensor simulation facilitates novel research directions involving, $\textit{e.g.}$, fast and agile autonomous flight in obstacle-rich environments, safe human interaction, and flexible sensor selection. FlightGoggles has been utilized as the main test for selecting nine teams that will advance in the AlphaPilot autonomous drone racing challenge. Subsequently, FlightGoggles has been actively used by the community. We survey approaches and results from the top twenty AlphaPilot teams, which may be of independent interest.

The Blackbird Dataset: A large-scale dataset for UAV perception in aggressive flight

Oct 03, 2018

Abstract:The Blackbird unmanned aerial vehicle (UAV) dataset is a large-scale, aggressive indoor flight dataset collected using a custom-built quadrotor platform for use in evaluation of agile perception.Inspired by the potential of future high-speed fully-autonomous drone racing, the Blackbird dataset contains over 10 hours of flight data from 168 flights over 17 flight trajectories and 5 environments at velocities up to $7.0ms^-1$. Each flight includes sensor data from 120Hz stereo and downward-facing photorealistic virtual cameras, 100Hz IMU, $\sim190Hz$ motor speed sensors, and 360Hz millimeter-accurate motion capture ground truth. Camera images for each flight were photorealistically rendered using FlightGoggles across a variety of environments to facilitate easy experimentation of high performance perception algorithms. The dataset is available for download at http://blackbird-dataset.mit.edu/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge