Wenwei Luo

A Semantic-Enhanced Heterogeneous Graph Learning Method for Flexible Objects Recognition

Mar 28, 2025Abstract:Flexible objects recognition remains a significant challenge due to its inherently diverse shapes and sizes, translucent attributes, and subtle inter-class differences. Graph-based models, such as graph convolution networks and graph vision models, are promising in flexible objects recognition due to their ability of capturing variable relations within the flexible objects. These methods, however, often focus on global visual relationships or fail to align semantic and visual information. To alleviate these limitations, we propose a semantic-enhanced heterogeneous graph learning method. First, an adaptive scanning module is employed to extract discriminative semantic context, facilitating the matching of flexible objects with varying shapes and sizes while aligning semantic and visual nodes to enhance cross-modal feature correlation. Second, a heterogeneous graph generation module aggregates global visual and local semantic node features, improving the recognition of flexible objects. Additionally, We introduce the FSCW, a large-scale flexible dataset curated from existing sources. We validate our method through extensive experiments on flexible datasets (FDA and FSCW), and challenge benchmarks (CIFAR-100 and ImageNet-Hard), demonstrating competitive performance.

SWAT: Sliding Window Adversarial Training for Gradual Domain Adaptation

Jan 31, 2025

Abstract:Domain shifts are critical issues that harm the performance of machine learning. Unsupervised Domain Adaptation (UDA) mitigates this issue but suffers when the domain shifts are steep and drastic. Gradual Domain Adaptation (GDA) alleviates this problem in a mild way by gradually adapting from the source to the target domain using multiple intermediate domains. In this paper, we propose Sliding Window Adversarial Training (SWAT) for Gradual Domain Adaptation. SWAT uses the construction of adversarial streams to connect the feature spaces of the source and target domains. In order to gradually narrow the small gap between adjacent intermediate domains, a sliding window paradigm is designed that moves along the adversarial stream. When the window moves to the end of the stream, i.e., the target domain, the domain shift is drastically reduced. Extensive experiments are conducted on public GDA benchmarks, and the results demonstrate that the proposed SWAT significantly outperforms the state-of-the-art approaches. The implementation is available at: https://anonymous.4open.science/r/SWAT-8677.

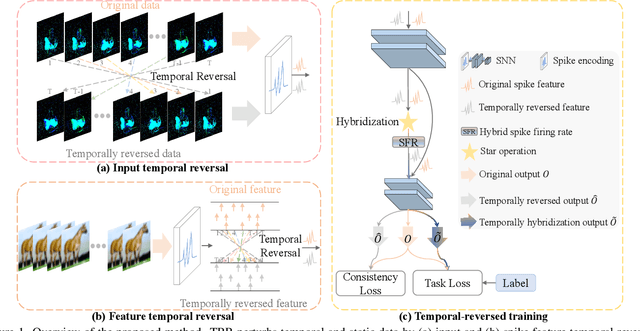

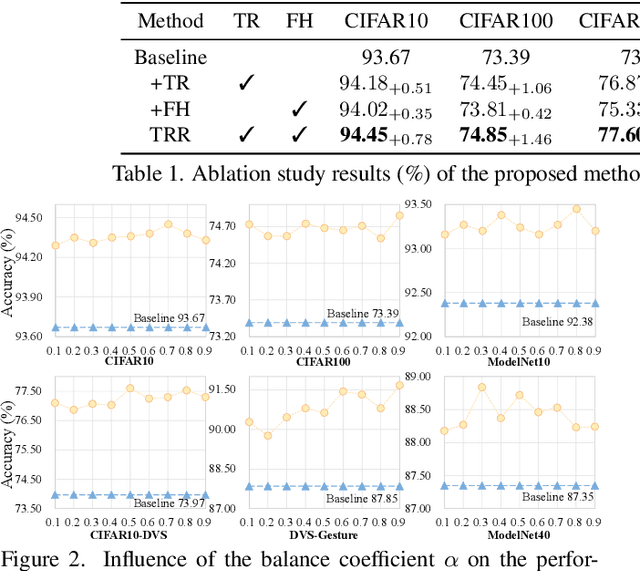

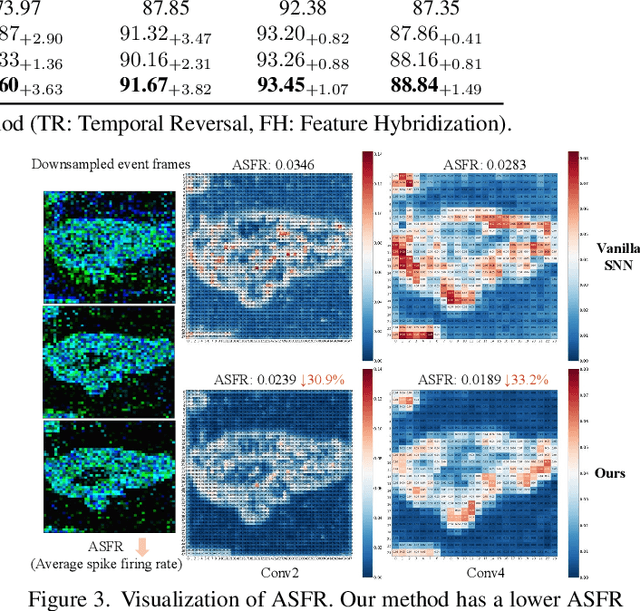

Temporal Reversed Training for Spiking Neural Networks with Generalized Spatio-Temporal Representation

Aug 17, 2024

Abstract:Spiking neural networks (SNNs) have received widespread attention as an ultra-low energy computing paradigm. Recent studies have focused on improving the feature extraction capability of SNNs, but they suffer from inefficient inference and suboptimal performance. In this paper, we propose a simple yet effective temporal reversed training (TRT) method to optimize the spatio-temporal performance of SNNs and circumvent these problems. We perturb the input temporal data by temporal reversal, prompting the SNN to produce original-reversed consistent output logits and to learn perturbation-invariant representations. For static data without temporal dimension, we generalize this strategy by exploiting the inherent temporal property of spiking neurons for spike feature temporal reversal. In addition, we utilize the lightweight ``star operation" (element-wise multiplication) to hybridize the original and temporally reversed spike firing rates and expand the implicit dimensions, which serves as spatio-temporal regularization to further enhance the generalization of the SNN. Our method involves only an additional temporal reversal operation and element-wise multiplication during training, thus incurring negligible training overhead and not affecting the inference efficiency at all. Extensive experiments on static/neuromorphic object/action recognition, and 3D point cloud classification tasks demonstrate the effectiveness and generalizability of our method. In particular, with only two timesteps, our method achieves 74.77\% and 90.57\% accuracy on ImageNet and ModelNet40, respectively.

A Hybrid Brain-Computer Interface Using Motor Imagery and SSVEP Based on Convolutional Neural Network

Dec 10, 2022

Abstract:The key to electroencephalography (EEG)-based brain-computer interface (BCI) lies in neural decoding, and its accuracy can be improved by using hybrid BCI paradigms, that is, fusing multiple paradigms. However, hybrid BCIs usually require separate processing processes for EEG signals in each paradigm, which greatly reduces the efficiency of EEG feature extraction and the generalizability of the model. Here, we propose a two-stream convolutional neural network (TSCNN) based hybrid brain-computer interface. It combines steady-state visual evoked potential (SSVEP) and motor imagery (MI) paradigms. TSCNN automatically learns to extract EEG features in the two paradigms in the training process, and improves the decoding accuracy by 25.4% compared with the MI mode, and 2.6% compared with SSVEP mode in the test data. Moreover, the versatility of TSCNN is verified as it provides considerable performance in both single-mode (70.2% for MI, 93.0% for SSVEP) and hybrid-mode scenarios (95.6% for MI-SSVEP hybrid). Our work will facilitate the real-world applications of EEG-based BCI systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge