Wendyam Eric Lionel Ilboudo

An Inference-Based Architecture for Intent and Affordance Saturation in Decision-Making

Dec 29, 2025Abstract:Decision paralysis, i.e. hesitation, freezing, or failure to act despite full knowledge and motivation, poses a challenge for choice models that assume options are already specified and readily comparable. Drawing on qualitative reports in autism research that are especially salient, we propose a computational account in which paralysis arises from convergence failure in a hierarchical decision process. We separate intent selection (what to pursue) from affordance selection (how to pursue the goal) and formalize commitment as inference under a mixture of reverse- and forward-Kullback-Leibler (KL) objectives. Reverse KL is mode-seeking and promotes rapid commitment, whereas forward KL is mode-covering and preserves multiple plausible goals or actions. In static and dynamic (drift-diffusion) models, forward-KL-biased inference yields slow, heavy-tailed response times and two distinct failure modes, intent saturation and affordance saturation, when values are similar. Simulations in multi-option tasks reproduce key features of decision inertia and shutdown, treating autism as an extreme regime of a general, inference-based, decision-making continuum.

Domains as Objectives: Domain-Uncertainty-Aware Policy Optimization through Explicit Multi-Domain Convex Coverage Set Learning

Oct 07, 2024Abstract:The problem of uncertainty is a feature of real world robotics problems and any control framework must contend with it in order to succeed in real applications tasks. Reinforcement Learning is no different, and epistemic uncertainty arising from model uncertainty or misspecification is a challenge well captured by the sim-to-real gap. A simple solution to this issue is domain randomization (DR), which unfortunately can result in conservative agents. As a remedy to this conservativeness, the use of universal policies that take additional information about the randomized domain has risen as an alternative solution, along with recurrent neural network-based controllers. Uncertainty-aware universal policies present a particularly compelling solution able to account for system identification uncertainties during deployment. In this paper, we reveal that the challenge of efficiently optimizing uncertainty-aware policies can be fundamentally reframed as solving the convex coverage set (CCS) problem within a multi-objective reinforcement learning (MORL) context. By introducing a novel Markov decision process (MDP) framework where each domain's performance is treated as an independent objective, we unify the training of uncertainty-aware policies with MORL approaches. This connection enables the application of MORL algorithms for domain randomization (DR), allowing for more efficient policy optimization. To illustrate this, we focus on the linear utility function, which aligns with the expectation in DR formulations, and propose a series of algorithms adapted from the MORL literature to solve the CCS, demonstrating their ability to enhance the performance of uncertainty-aware policies.

AdaTerm: Adaptive T-Distribution Estimated Robust Moments towards Noise-Robust Stochastic Gradient Optimizer

Jan 18, 2022

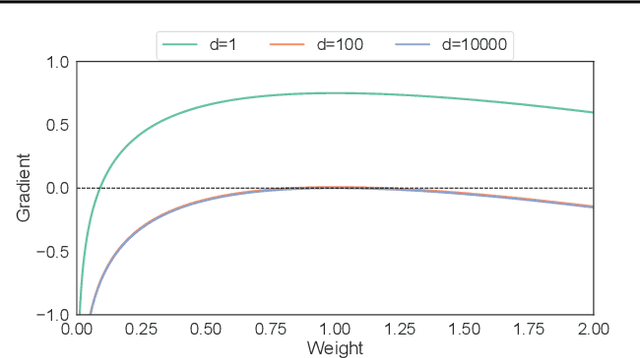

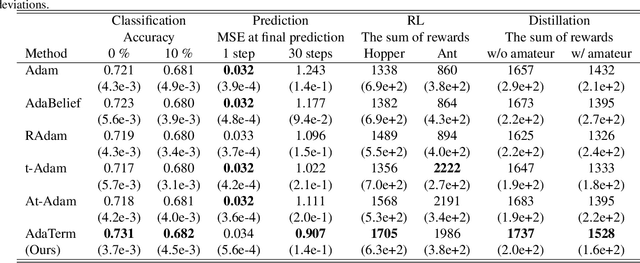

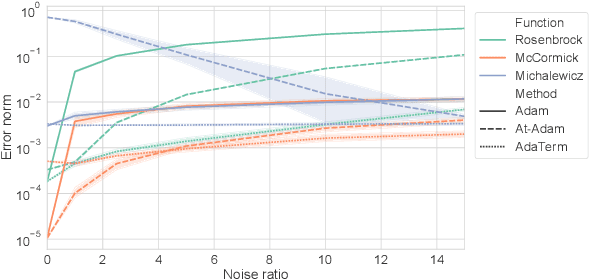

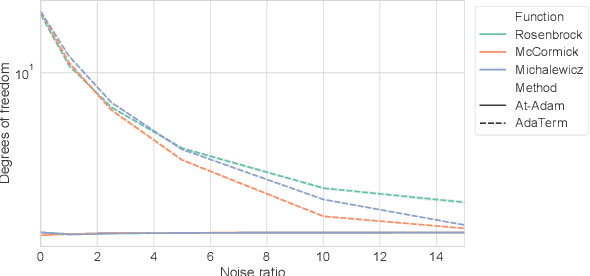

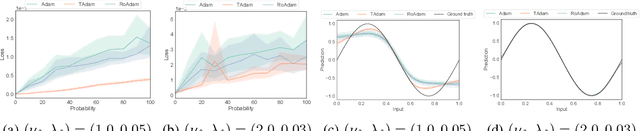

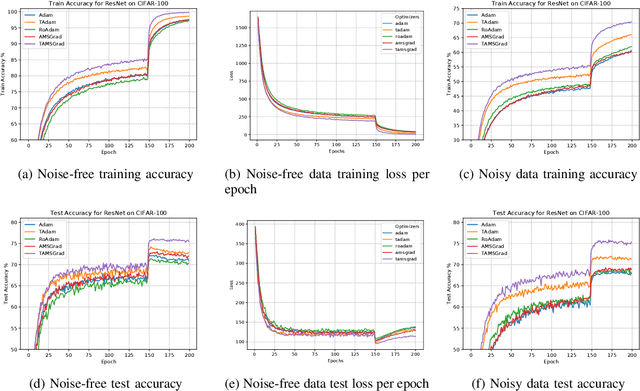

Abstract:As the problems to be optimized with deep learning become more practical, their datasets inevitably contain a variety of noise, such as mislabeling and substitution by estimated inputs/outputs, which would have negative impacts on the optimization results. As a safety net, it is a natural idea to improve a stochastic gradient descent (SGD) optimizer, which updates the network parameters as the final process of learning, to be more robust to noise. The related work revealed that the first momentum utilized in the Adam-like SGD optimizers can be modified based on the noise-robust student's t-distribution, resulting in inheriting the robustness to noise. In this paper, we propose AdaTerm, which derives not only the first momentum but also all the involved statistics based on the student's t-distribution. If the computed gradients seem to probably be aberrant, AdaTerm is expected to exclude the computed gradients for updates, and reinforce the robustness for the next updates; otherwise, it updates the network parameters normally, and can relax the robustness for the next updates. With this noise-adaptive behavior, the excellent learning performance of AdaTerm was confirmed via typical optimization problems with several cases where the noise ratio would be different.

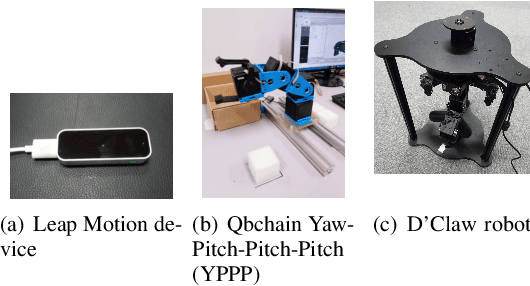

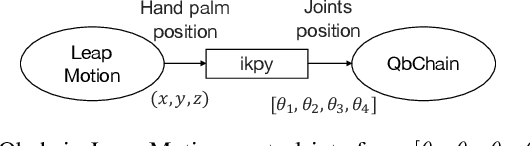

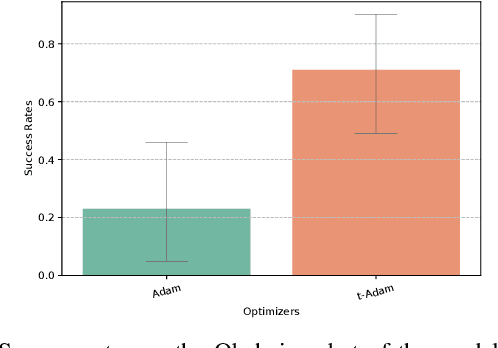

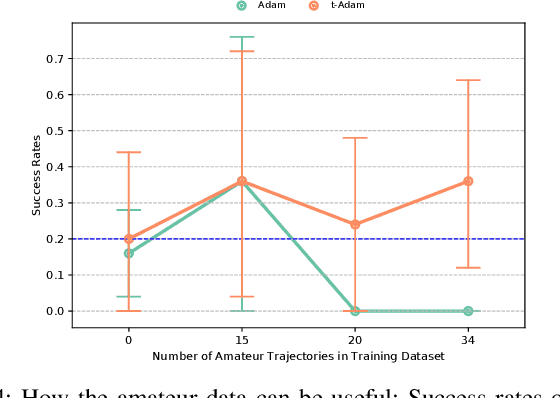

Adaptive t-Momentum-based Optimization for Unknown Ratio of Outliers in Amateur Data in Imitation Learning

Aug 02, 2021

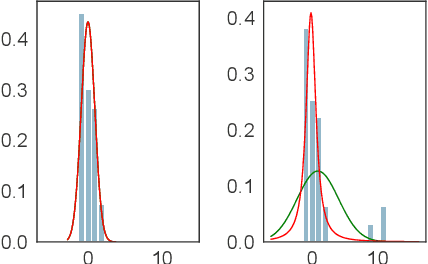

Abstract:Behavioral cloning (BC) bears a high potential for safe and direct transfer of human skills to robots. However, demonstrations performed by human operators often contain noise or imperfect behaviors that can affect the efficiency of the imitator if left unchecked. In order to allow the imitators to effectively learn from imperfect demonstrations, we propose to employ the robust t-momentum optimization algorithm. This algorithm builds on the Student's t-distribution in order to deal with heavy-tailed data and reduce the effect of outlying observations. We extend the t-momentum algorithm to allow for an adaptive and automatic robustness and show empirically how the algorithm can be used to produce robust BC imitators against datasets with unknown heaviness. Indeed, the imitators trained with the t-momentum-based Adam optimizers displayed robustness to imperfect demonstrations on two different manipulation tasks with different robots and revealed the capability to take advantage of the additional data while reducing the adverse effect of non-optimal behaviors.

t-Soft Update of Target Network for Deep Reinforcement Learning

Aug 25, 2020

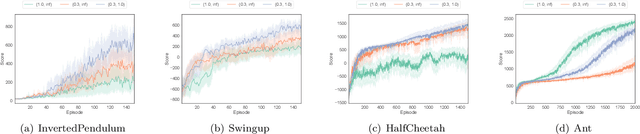

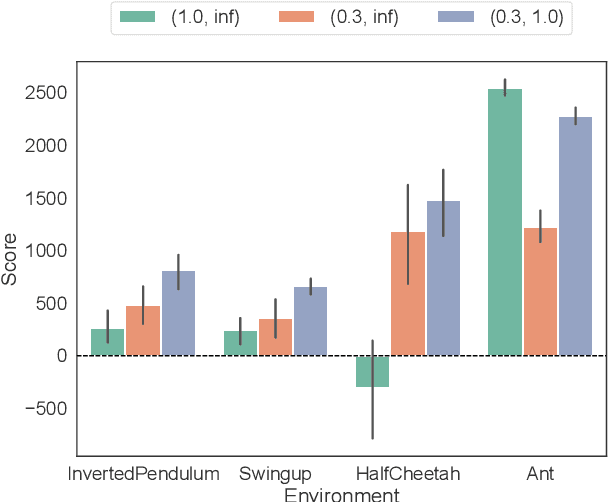

Abstract:This paper proposes a new robust update rule of the target network for deep reinforcement learning, to replace the conventional update rule, given as an exponential moving average. The problem with the conventional rule is the fact that all the parameters are smoothly updated with the same speed, even when some of them are trying to update toward the wrong directions. To robustly update the parameters, the t-soft update, which is inspired by the student-t distribution, is derived with reference to the analogy between the exponential moving average and the normal distribution. In most of PyBullet robotics simulations, an online actor-critic algorithm with the t-soft update outperformed the conventional methods in terms of the obtained return.

TAdam: A Robust Stochastic Gradient Optimizer

Mar 03, 2020

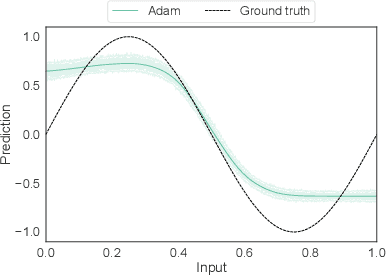

Abstract:Machine learning algorithms aim to find patterns from observations, which may include some noise, especially in robotics domain. To perform well even with such noise, we expect them to be able to detect outliers and discard them when needed. We therefore propose a new stochastic gradient optimization method, whose robustness is directly built in the algorithm, using the robust student-t distribution as its core idea. Adam, the popular optimization method, is modified with our method and the resultant optimizer, so-called TAdam, is shown to effectively outperform Adam in terms of robustness against noise on diverse task, ranging from regression and classification to reinforcement learning problems. The implementation of our algorithm can be found at https://github.com/Mahoumaru/TAdam.git

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge