Weitong Ruan

Customize Multi-modal RAI Guardrails with Precedent-based predictions

Jul 28, 2025

Abstract:A multi-modal guardrail must effectively filter image content based on user-defined policies, identifying material that may be hateful, reinforce harmful stereotypes, contain explicit material, or spread misinformation. Deploying such guardrails in real-world applications, however, poses significant challenges. Users often require varied and highly customizable policies and typically cannot provide abundant examples for each custom policy. Consequently, an ideal guardrail should be scalable to the multiple policies and adaptable to evolving user standards with minimal retraining. Existing fine-tuning methods typically condition predictions on pre-defined policies, restricting their generalizability to new policies or necessitating extensive retraining to adapt. Conversely, training-free methods struggle with limited context lengths, making it difficult to incorporate all the policies comprehensively. To overcome these limitations, we propose to condition model's judgment on "precedents", which are the reasoning processes of prior data points similar to the given input. By leveraging precedents instead of fixed policies, our approach greatly enhances the flexibility and adaptability of the guardrail. In this paper, we introduce a critique-revise mechanism for collecting high-quality precedents and two strategies that utilize precedents for robust prediction. Experimental results demonstrate that our approach outperforms previous methods across both few-shot and full-dataset scenarios and exhibits superior generalization to novel policies.

Improving Spoken Language Understanding By Exploiting ASR N-best Hypotheses

Jan 11, 2020

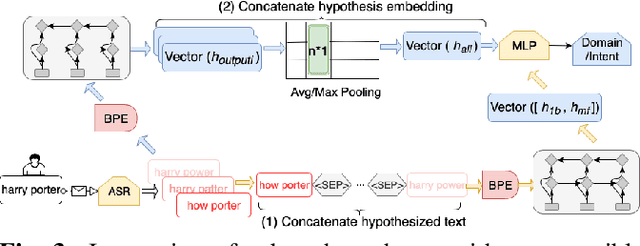

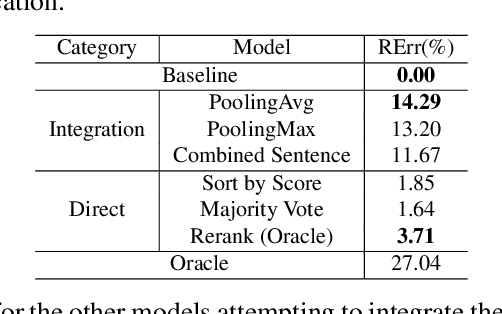

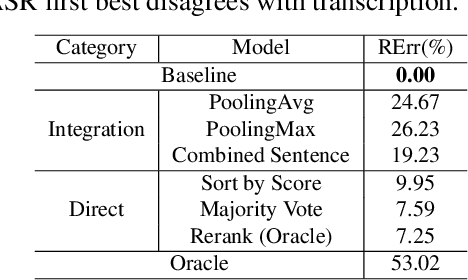

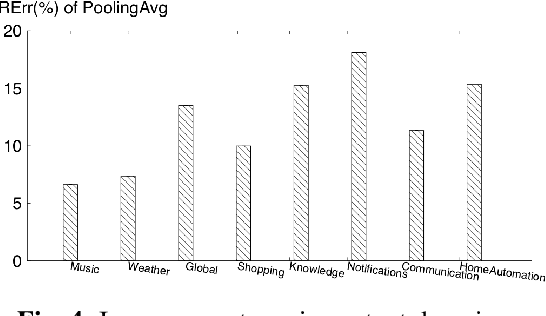

Abstract:In a modern spoken language understanding (SLU) system, the natural language understanding (NLU) module takes interpretations of a speech from the automatic speech recognition (ASR) module as the input. The NLU module usually uses the first best interpretation of a given speech in downstream tasks such as domain and intent classification. However, the ASR module might misrecognize some speeches and the first best interpretation could be erroneous and noisy. Solely relying on the first best interpretation could make the performance of downstream tasks non-optimal. To address this issue, we introduce a series of simple yet efficient models for improving the understanding of semantics of the input speeches by collectively exploiting the n-best speech interpretations from the ASR module.

Ensemble Multi-task Gaussian Process Regression with Multiple Latent Processes

May 09, 2018

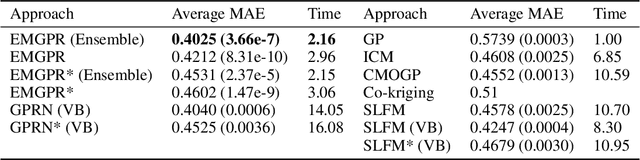

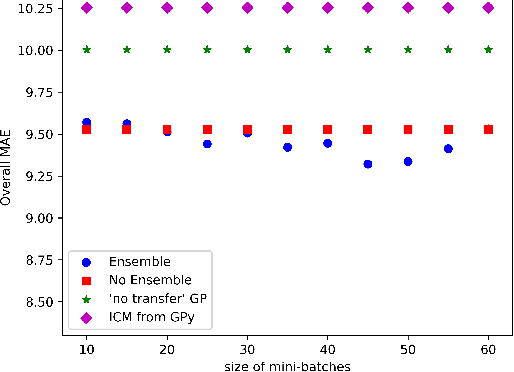

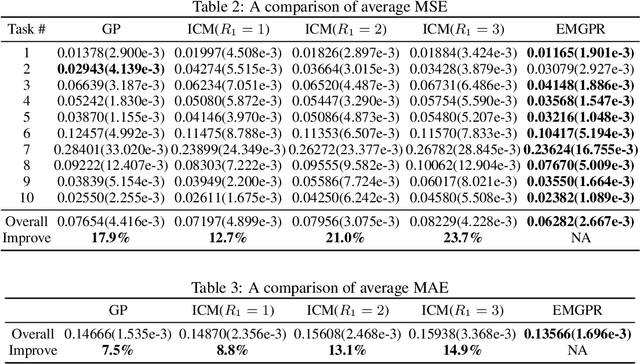

Abstract:Multi-task/Multi-output learning seeks to exploit correlation among tasks to enhance performance over learning or solving each task independently. In this paper, we investigate this problem in the context of Gaussian Processes (GPs) and propose a new model which learns a mixture of latent processes by decomposing the covariance matrix into a sum of structured hidden components each of which is controlled by a latent GP over input features and a "weight" over tasks. From this sum structure, we propose a parallelizable parameter learning algorithm with a predetermined initialization for the "weights". We also notice that an ensemble parameter learning approach using mini-batches of training data not only reduces the computation complexity of learning but also improves the regression performance. We evaluate our model on two datasets, the smaller Swiss Jura dataset and another relatively larger ATMS dataset from NOAA. Substantial improvements are observed compared with established alternatives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge