Weilong Fu

Simulating financial time series using attention

Jul 01, 2022

Abstract:Financial time series simulation is a central topic since it extends the limited real data for training and evaluation of trading strategies. It is also challenging because of the complex statistical properties of the real financial data. We introduce two generative adversarial networks (GANs), which utilize the convolutional networks with attention and the transformers, for financial time series simulation. The GANs learn the statistical properties in a data-driven manner and the attention mechanism helps to replicate the long-range dependencies. The proposed GANs are tested on the S&P 500 index and option data, examined by scores based on the stylized facts and are compared with the pure convolutional GAN, i.e. QuantGAN. The attention-based GANs not only reproduce the stylized facts, but also smooth the autocorrelation of returns.

Accurate Protein Structure Prediction by Embeddings and Deep Learning Representations

Nov 09, 2019

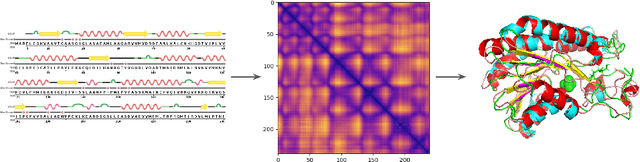

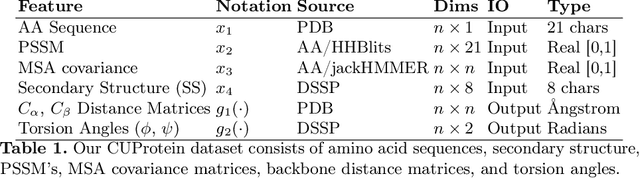

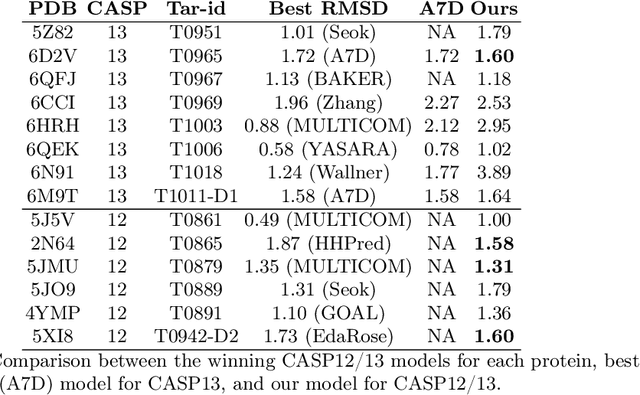

Abstract:Proteins are the major building blocks of life, and actuators of almost all chemical and biophysical events in living organisms. Their native structures in turn enable their biological functions which have a fundamental role in drug design. This motivates predicting the structure of a protein from its sequence of amino acids, a fundamental problem in computational biology. In this work, we demonstrate state-of-the-art protein structure prediction (PSP) results using embeddings and deep learning models for prediction of backbone atom distance matrices and torsion angles. We recover 3D coordinates of backbone atoms and reconstruct full atom protein by optimization. We create a new gold standard dataset of proteins which is comprehensive and easy to use. Our dataset consists of amino acid sequences, Q8 secondary structures, position specific scoring matrices, multiple sequence alignment co-evolutionary features, backbone atom distance matrices, torsion angles, and 3D coordinates. We evaluate the quality of our structure prediction by RMSD on the latest Critical Assessment of Techniques for Protein Structure Prediction (CASP) test data and demonstrate competitive results with the winning teams and AlphaFold in CASP13 and supersede the results of the winning teams in CASP12. We make our data, models, and code publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge