Wataru Hashimoto

Efficient Nearest Neighbor based Uncertainty Estimation for Natural Language Processing Tasks

Jul 02, 2024Abstract:Trustworthy prediction in Deep Neural Networks (DNNs), including Pre-trained Language Models (PLMs) is important for safety-critical applications in the real world. However, DNNs often suffer from uncertainty estimation, such as miscalibration. In particular, approaches that require multiple stochastic inference can mitigate this problem, but the expensive cost of inference makes them impractical. In this study, we propose $k$-Nearest Neighbor Uncertainty Estimation ($k$NN-UE), which is an uncertainty estimation method that uses the distances from the neighbors and label-existence ratio of neighbors. Experiments on sentiment analysis, natural language inference, and named entity recognition show that our proposed method outperforms the baselines or recent density-based methods in confidence calibration, selective prediction, and out-of-distribution detection. Moreover, our analyses indicate that introducing dimension reduction or approximate nearest neighbor search inspired by recent $k$NN-LM studies reduces the inference overhead without significantly degrading estimation performance when combined them appropriately.

Are Data Augmentation Methods in Named Entity Recognition Applicable for Uncertainty Estimation?

Jul 02, 2024

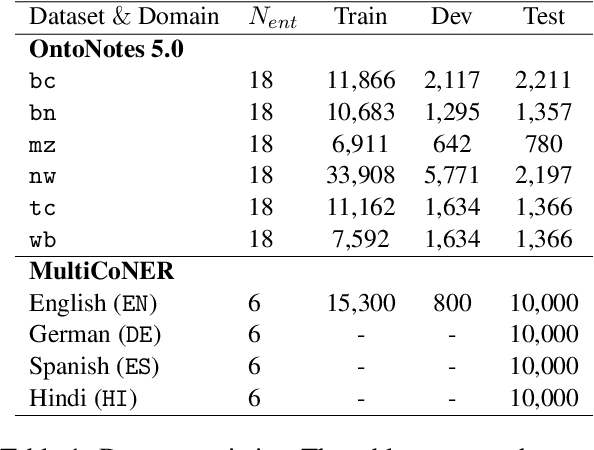

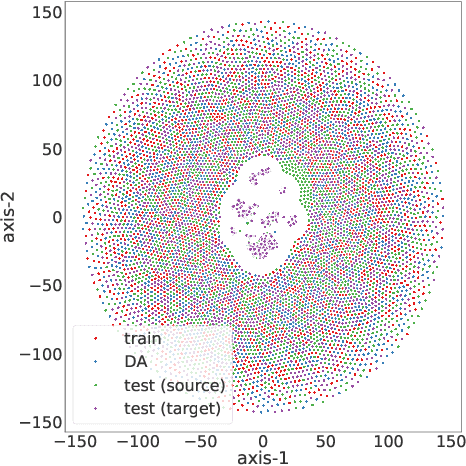

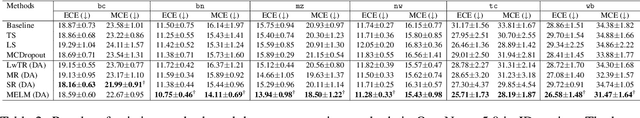

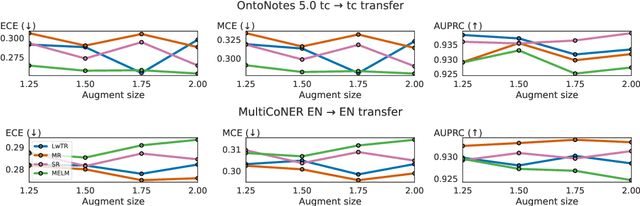

Abstract:This work investigates the impact of data augmentation on confidence calibration and uncertainty estimation in Named Entity Recognition (NER) tasks. For the future advance of NER in safety-critical fields like healthcare and finance, it is essential to achieve accurate predictions with calibrated confidence when applying Deep Neural Networks (DNNs), including Pre-trained Language Models (PLMs), as a real-world application. However, DNNs are prone to miscalibration, which limits their applicability. Moreover, existing methods for calibration and uncertainty estimation are computational expensive. Our investigation in NER found that data augmentation improves calibration and uncertainty in cross-genre and cross-lingual setting, especially in-domain setting. Furthermore, we showed that the calibration for NER tends to be more effective when the perplexity of the sentences generated by data augmentation is lower, and that increasing the size of the augmentation further improves calibration and uncertainty.

Long-term Safe Reinforcement Learning with Binary Feedback

Jan 11, 2024Abstract:Safety is an indispensable requirement for applying reinforcement learning (RL) to real problems. Although there has been a surge of safe RL algorithms proposed in recent years, most existing work typically 1) relies on receiving numeric safety feedback; 2) does not guarantee safety during the learning process; 3) limits the problem to a priori known, deterministic transition dynamics; and/or 4) assume the existence of a known safe policy for any states. Addressing the issues mentioned above, we thus propose Long-term Binaryfeedback Safe RL (LoBiSaRL), a safe RL algorithm for constrained Markov decision processes (CMDPs) with binary safety feedback and an unknown, stochastic state transition function. LoBiSaRL optimizes a policy to maximize rewards while guaranteeing a long-term safety that an agent executes only safe state-action pairs throughout each episode with high probability. Specifically, LoBiSaRL models the binary safety function via a generalized linear model (GLM) and conservatively takes only a safe action at every time step while inferring its effect on future safety under proper assumptions. Our theoretical results show that LoBiSaRL guarantees the long-term safety constraint, with high probability. Finally, our empirical results demonstrate that our algorithm is safer than existing methods without significantly compromising performance in terms of reward.

Safe Exploration in Reinforcement Learning: A Generalized Formulation and Algorithms

Oct 05, 2023

Abstract:Safe exploration is essential for the practical use of reinforcement learning (RL) in many real-world scenarios. In this paper, we present a generalized safe exploration (GSE) problem as a unified formulation of common safe exploration problems. We then propose a solution of the GSE problem in the form of a meta-algorithm for safe exploration, MASE, which combines an unconstrained RL algorithm with an uncertainty quantifier to guarantee safety in the current episode while properly penalizing unsafe explorations before actual safety violation to discourage them in future episodes. The advantage of MASE is that we can optimize a policy while guaranteeing with a high probability that no safety constraint will be violated under proper assumptions. Specifically, we present two variants of MASE with different constructions of the uncertainty quantifier: one based on generalized linear models with theoretical guarantees of safety and near-optimality, and another that combines a Gaussian process to ensure safety with a deep RL algorithm to maximize the reward. Finally, we demonstrate that our proposed algorithm achieves better performance than state-of-the-art algorithms on grid-world and Safety Gym benchmarks without violating any safety constraints, even during training.

Neural Controller Synthesis for Signal Temporal Logic Specifications Using Encoder-Decoder Structured Networks

Dec 10, 2022Abstract:In this paper, we propose a control synthesis method for signal temporal logic (STL) specifications with neural networks (NNs). Most of the previous works consider training a controller for only a given STL specification. These approaches, however, require retraining the NN controller if a new specification arises and needs to be satisfied, which results in large consumption of memory and inefficient training. To tackle this problem, we propose to construct NN controllers by introducing encoder-decoder structured NNs with an attention mechanism. The encoder takes an STL formula as input and encodes it into an appropriate vector, and the decoder outputs control signals that will meet the given specification. As the encoder, we consider three NN structures: sequential, tree-structured, and graph-structured NNs. All the model parameters are trained in an end-to-end manner to maximize the expected robustness that is known to be a quantitative semantics of STL formulae. We compare the control performances attained by the above NN structures through a numerical experiment of the path planning problem, showing the efficacy of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge