Wanli Qian

SHAPE-IT: Exploring Text-to-Shape-Display for Generative Shape-Changing Behaviors with LLMs

Sep 10, 2024

Abstract:This paper introduces text-to-shape-display, a novel approach to generating dynamic shape changes in pin-based shape displays through natural language commands. By leveraging large language models (LLMs) and AI-chaining, our approach allows users to author shape-changing behaviors on demand through text prompts without programming. We describe the foundational aspects necessary for such a system, including the identification of key generative elements (primitive, animation, and interaction) and design requirements to enhance user interaction, based on formative exploration and iterative design processes. Based on these insights, we develop SHAPE-IT, an LLM-based authoring tool for a 24 x 24 shape display, which translates the user's textual command into executable code and allows for quick exploration through a web-based control interface. We evaluate the effectiveness of SHAPE-IT in two ways: 1) performance evaluation and 2) user evaluation (N= 10). The study conclusions highlight the ability to facilitate rapid ideation of a wide range of shape-changing behaviors with AI. However, the findings also expose accuracy-related challenges and limitations, prompting further exploration into refining the framework for leveraging AI to better suit the unique requirements of shape-changing systems.

Extended Version of GTGraffiti: Spray Painting Graffiti Art from Human Painting Motions with a Cable Driven Parallel Robot

Sep 16, 2021

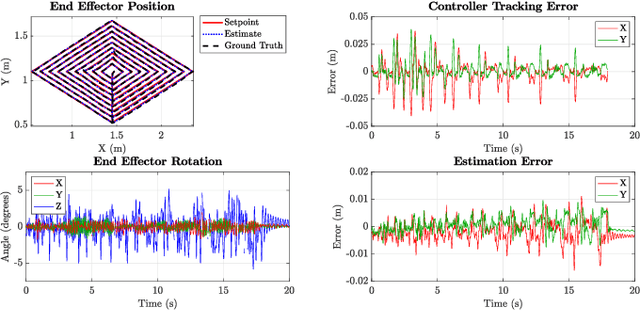

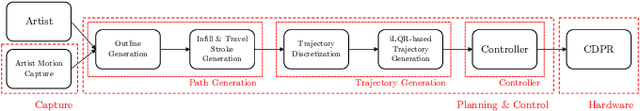

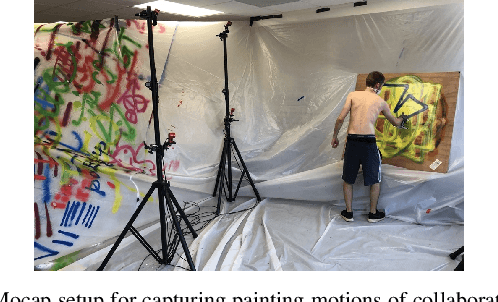

Abstract:We present GTGraffiti, a graffiti painting system from Georgia Tech that tackles challenges in art, hardware, and human-robot collaboration. The problem of painting graffiti in a human style is particularly challenging and requires a system-level approach because the robotics and art must be designed around each other. The robot must be highly dynamic over a large workspace while the artist must work within the robot's limitations. Our approach consists of three stages: artwork capture, robot hardware, and planning & control. We use motion capture to capture collaborator painting motions which are then composed and processed into a time-varying linear feedback controller for a cable-driven parallel robot (CDPR) to execute. In this work, we will describe the capturing process, the design and construction of a purpose-built CDPR, and the software for turning an artist's vision into control commands. Our work represents an important step towards faithfully recreating human graffiti artwork by demonstrating that we can reproduce artist motions up to 2m/s and 20m/s$^2$ within 9.3mm RMSE to paint artworks. Added material not in the original work is colored in red.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge