Vojtěch Řehák

Memory Assignment for Finite-Memory Strategies in Adversarial Patrolling Games

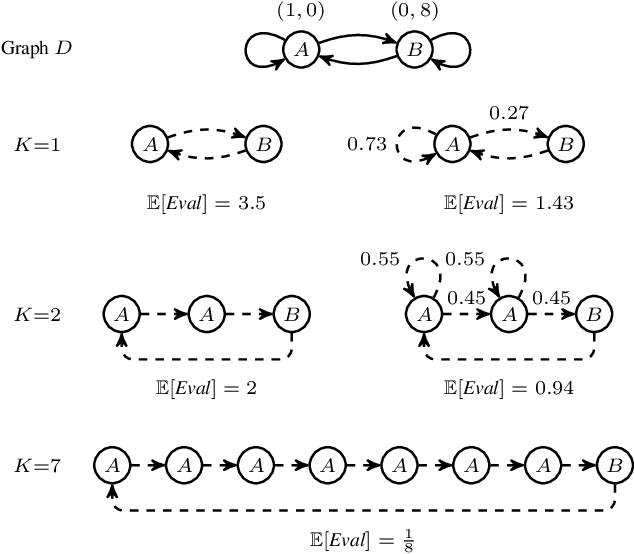

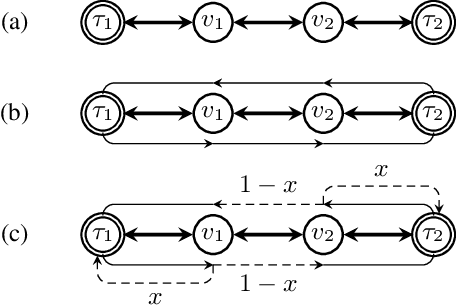

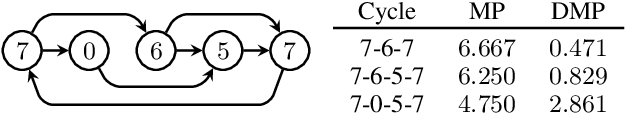

May 20, 2025Abstract:Adversarial Patrolling games form a subclass of Security games where a Defender moves between locations, guarding vulnerable targets. The main algorithmic problem is constructing a strategy for the Defender that minimizes the worst damage an Attacker can cause. We focus on the class of finite-memory (also known as regular) Defender's strategies that experimentally outperformed other competing classes. A finite-memory strategy can be seen as a positional strategy on a finite set of states. Each state consists of a pair of a location and a certain integer value--called memory. Existing algorithms improve the transitional probabilities between the states but require that the available memory size itself is assigned at each location manually. Choosing the right memory assignment is a well-known open and hard problem that hinders the usability of finite-memory strategies. We solve this issue by developing a general method that iteratively changes the memory assignment. Our algorithm can be used in connection with \emph{any} black-box strategy optimization tool. We evaluate our method on various experiments and show its robustness by solving instances of various patrolling models.

Multiple Mean-Payoff Optimization under Local Stability Constraints

Dec 17, 2024

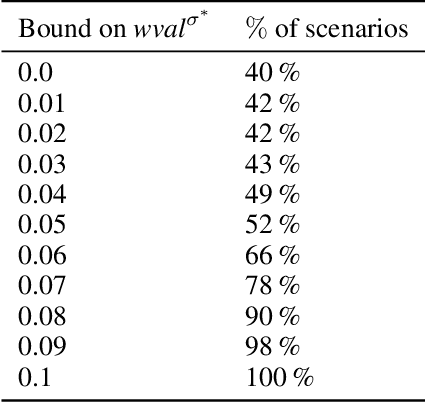

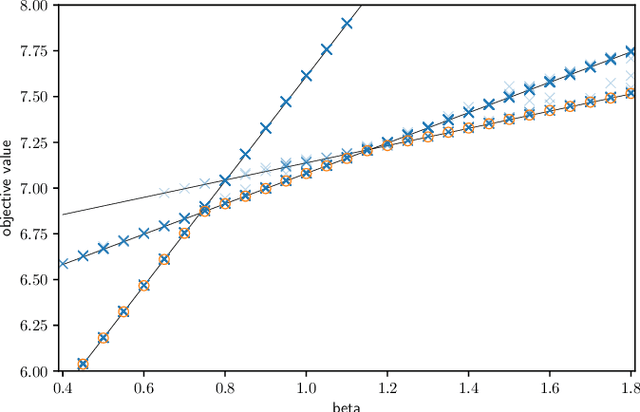

Abstract:The long-run average payoff per transition (mean payoff) is the main tool for specifying the performance and dependability properties of discrete systems. The problem of constructing a controller (strategy) simultaneously optimizing several mean payoffs has been deeply studied for stochastic and game-theoretic models. One common issue of the constructed controllers is the instability of the mean payoffs, measured by the deviations of the average rewards per transition computed in a finite "window" sliding along a run. Unfortunately, the problem of simultaneously optimizing the mean payoffs under local stability constraints is computationally hard, and the existing works do not provide a practically usable algorithm even for non-stochastic models such as two-player games. In this paper, we design and evaluate the first efficient and scalable solution to this problem applicable to Markov decision processes.

General Optimization Framework for Recurrent Reachability Objectives

May 27, 2022

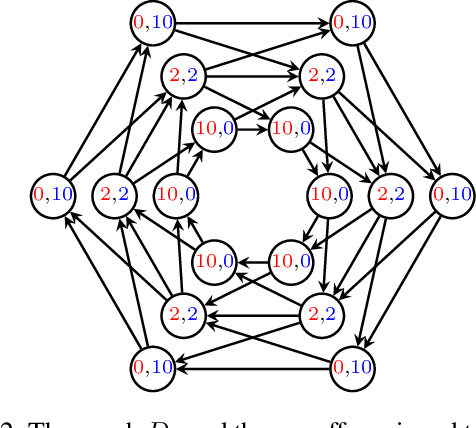

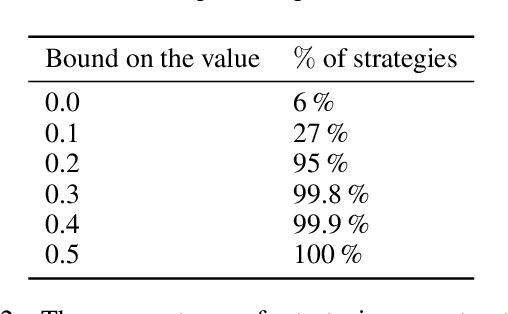

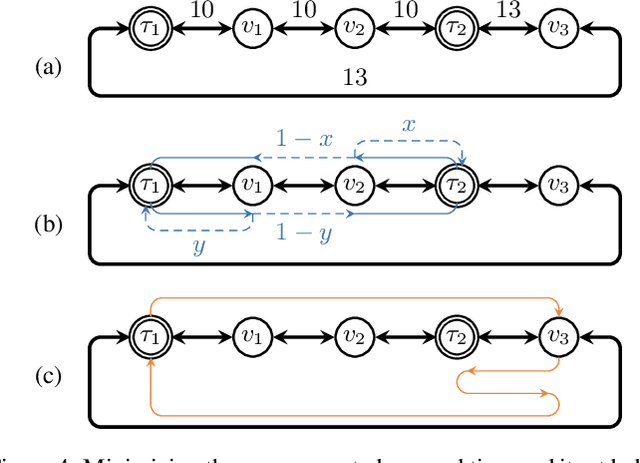

Abstract:We consider the mobile robot path planning problem for a class of recurrent reachability objectives. These objectives are parameterized by the expected time needed to visit one position from another, the expected square of this time, and also the frequency of moves between two neighboring locations. We design an efficient strategy synthesis algorithm for recurrent reachability objectives and demonstrate its functionality on non-trivial instances.

Synthesizing Efficient Solutions for Patrolling Problems in the Internet Environment

May 10, 2018

Abstract:We propose an algorithm for constructing efficient patrolling strategies in the Internet environment, where the protected targets are nodes connected to the network and the patrollers are software agents capable of detecting/preventing undesirable activities on the nodes. The algorithm is based on a novel compositional principle designed for a special class of strategies, and it can quickly construct (sub)optimal solutions even if the number of targets reaches hundreds of millions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge