Vladislav Pyatov

Robust Two-View Geometry Estimation with Implicit Differentiation

Oct 23, 2024Abstract:We present a novel two-view geometry estimation framework which is based on a differentiable robust loss function fitting. We propose to treat the robust fundamental matrix estimation as an implicit layer, which allows us to avoid backpropagation through time and significantly improves the numerical stability. To take full advantage of the information from the feature matching stage we incorporate learnable weights that depend on the matching confidences. In this way our solution brings together feature extraction, matching and two-view geometry estimation in a unified end-to-end trainable pipeline. We evaluate our approach on the camera pose estimation task in both outdoor and indoor scenarios. The experiments on several datasets show that the proposed method outperforms both classic and learning-based state-of-the-art methods by a large margin. The project webpage is available at: https://github.com/VladPyatov/ihls

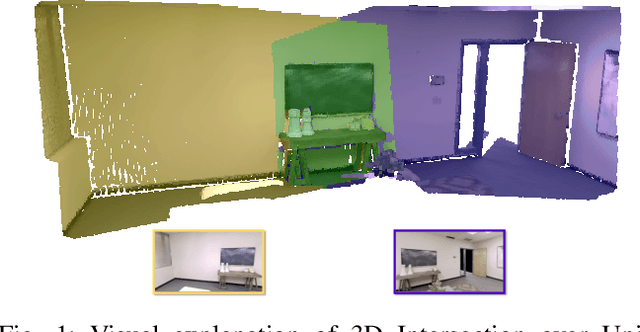

GSLoc: Visual Localization with 3D Gaussian Splatting

Oct 08, 2024

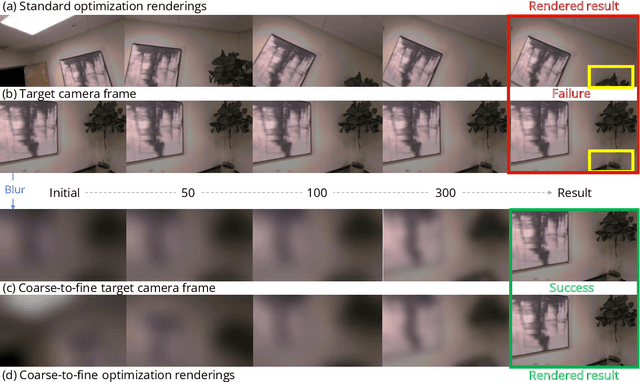

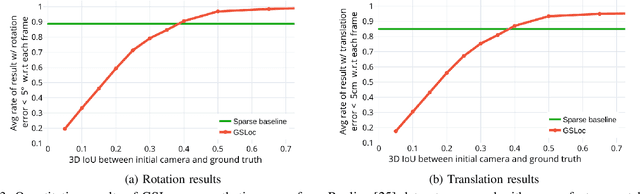

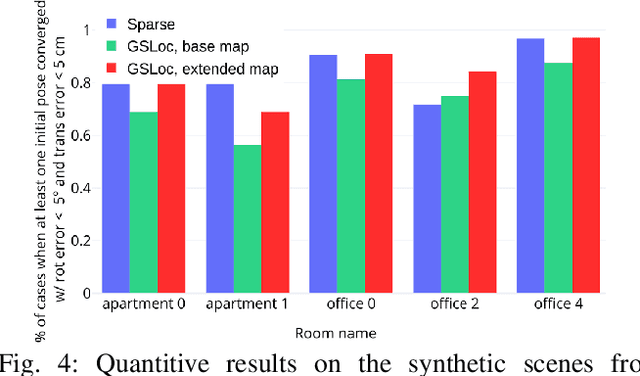

Abstract:We present GSLoc: a new visual localization method that performs dense camera alignment using 3D Gaussian Splatting as a map representation of the scene. GSLoc backpropagates pose gradients over the rendering pipeline to align the rendered and target images, while it adopts a coarse-to-fine strategy by utilizing blurring kernels to mitigate the non-convexity of the problem and improve the convergence. The results show that our approach succeeds at visual localization in challenging conditions of relatively small overlap between initial and target frames inside textureless environments when state-of-the-art neural sparse methods provide inferior results. Using the byproduct of realistic rendering from the 3DGS map representation, we show how to enhance localization results by mixing a set of observed and virtual reference keyframes when solving the image retrieval problem. We evaluate our method both on synthetic and real-world data, discussing its advantages and application potential.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge