Vitaly Schetinin

A Bayesian Methodology for Estimating Uncertainty of Decisions in Safety-Critical Systems

Dec 01, 2010

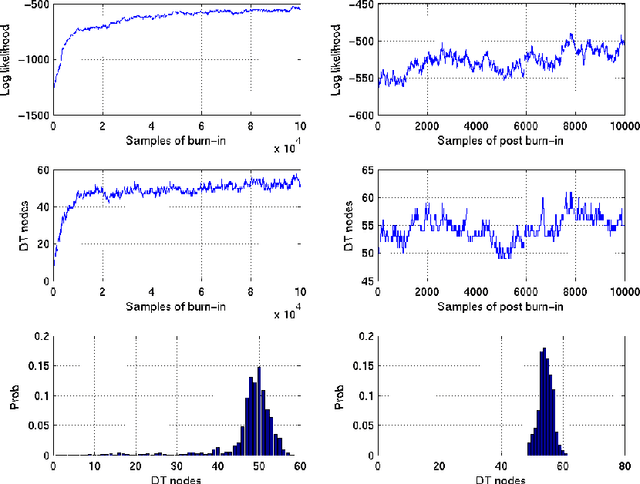

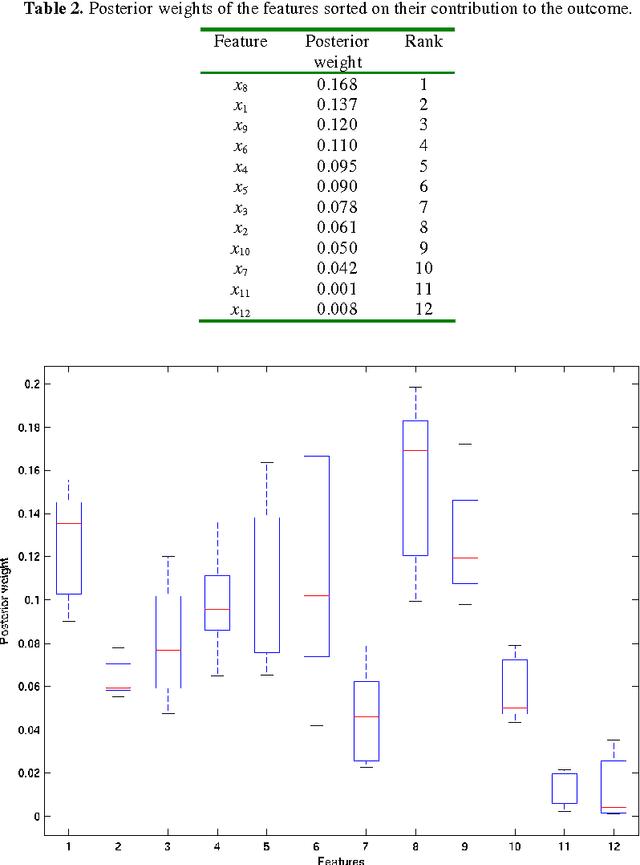

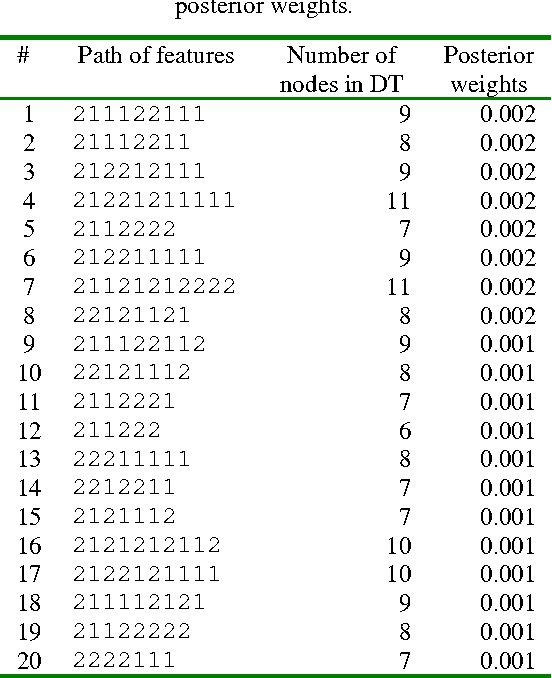

Abstract:Uncertainty of decisions in safety-critical engineering applications can be estimated on the basis of the Bayesian Markov Chain Monte Carlo (MCMC) technique of averaging over decision models. The use of decision tree (DT) models assists experts to interpret causal relations and find factors of the uncertainty. Bayesian averaging also allows experts to estimate the uncertainty accurately when a priori information on the favored structure of DTs is available. Then an expert can select a single DT model, typically the Maximum a Posteriori model, for interpretation purposes. Unfortunately, a priori information on favored structure of DTs is not always available. For this reason, we suggest a new prior on DTs for the Bayesian MCMC technique. We also suggest a new procedure of selecting a single DT and describe an application scenario. In our experiments on the Short-Term Conflict Alert data our technique outperforms the existing Bayesian techniques in predictive accuracy of the selected single DTs.

An Evolutionary-Based Approach to Learning Multiple Decision Models from Underrepresented Data

May 24, 2008

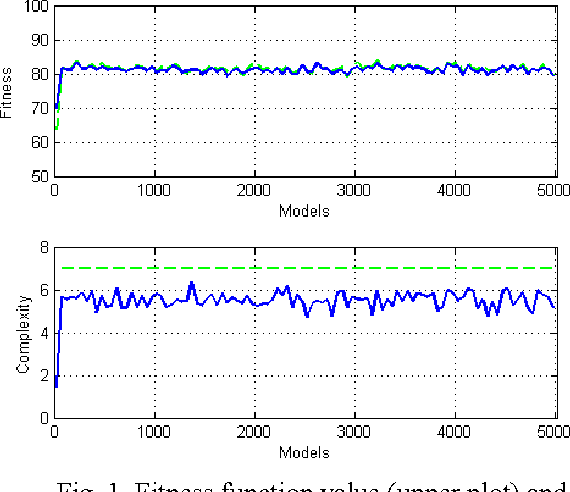

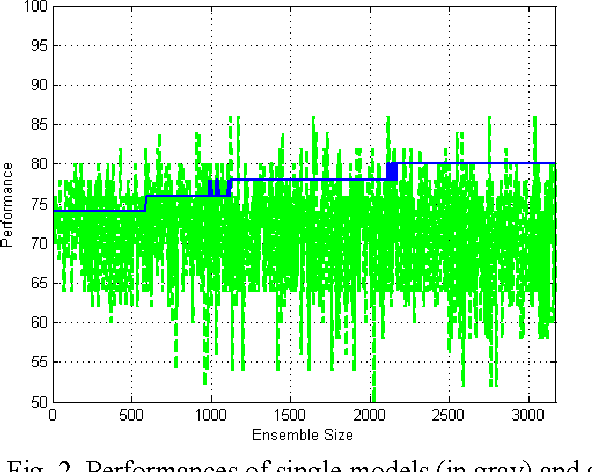

Abstract:The use of multiple Decision Models (DMs) enables to enhance the accuracy in decisions and at the same time allows users to evaluate the confidence in decision making. In this paper we explore the ability of multiple DMs to learn from a small amount of verified data. This becomes important when data samples are difficult to collect and verify. We propose an evolutionary-based approach to solving this problem. The proposed technique is examined on a few clinical problems presented by a small amount of data.

The Combined Technique for Detection of Artifacts in Clinical Electroencephalograms of Sleeping Newborns

Apr 14, 2005

Abstract:In this paper we describe a new method combining the polynomial neural network and decision tree techniques in order to derive comprehensible classification rules from clinical electroencephalograms (EEGs) recorded from sleeping newborns. These EEGs are heavily corrupted by cardiac, eye movement, muscle and noise artifacts and as a consequence some EEG features are irrelevant to classification problems. Combining the polynomial network and decision tree techniques, we discover comprehensible classification rules whilst also attempting to keep their classification error down. This technique is shown to outperform a number of commonly used machine learning technique applied to automatically recognize artifacts in the sleep EEGs.

A Neural-Network Technique to Learn Concepts from Electroencephalograms

Apr 14, 2005

Abstract:A new technique is presented developed to learn multi-class concepts from clinical electroencephalograms. A desired concept is represented as a neuronal computational model consisting of the input, hidden, and output neurons. In this model the hidden neurons learn independently to classify the electroencephalogram segments presented by spectral and statistical features. This technique has been applied to the electroencephalogram data recorded from 65 sleeping healthy newborns in order to learn a brain maturation concept of newborns aged between 35 and 51 weeks. The 39399 and 19670 segments from these data have been used for learning and testing the concept, respectively. As a result, the concept has correctly classified 80.1% of the testing segments or 87.7% of the 65 records.

An Evolving Cascade Neural Network Technique for Cleaning Sleep Electroencephalograms

Apr 14, 2005

Abstract:Evolving Cascade Neural Networks (ECNNs) and a new training algorithm capable of selecting informative features are described. The ECNN initially learns with one input node and then evolves by adding new inputs as well as new hidden neurons. The resultant ECNN has a near minimal number of hidden neurons and inputs. The algorithm is successfully used for training ECNN to recognise artefacts in sleep electroencephalograms (EEGs) which were visually labelled by EEG-viewers. In our experiments, the ECNN outperforms the standard neural-network as well as evolutionary techniques.

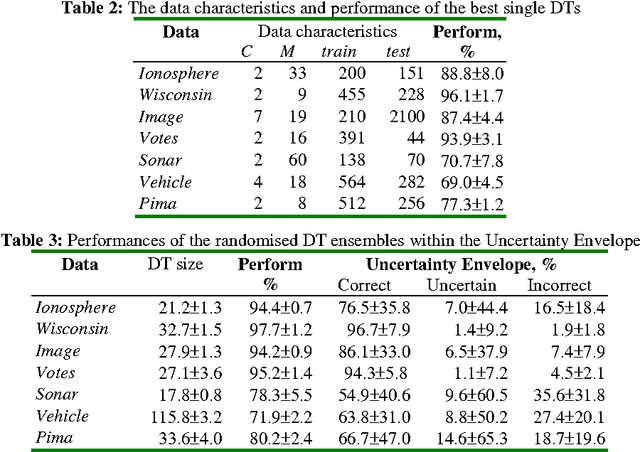

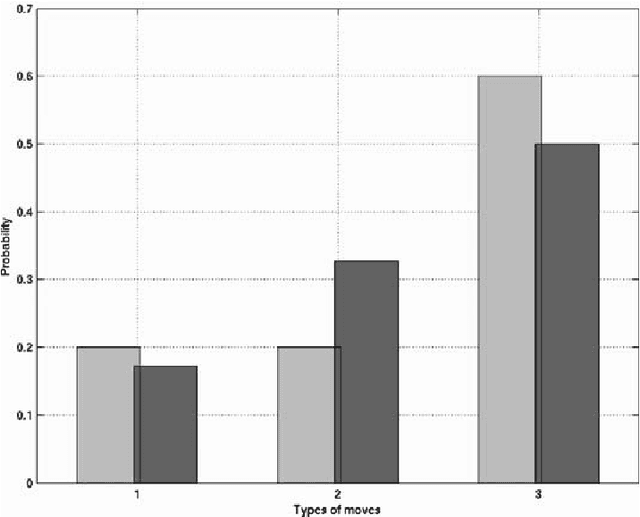

Comparison of the Bayesian and Randomised Decision Tree Ensembles within an Uncertainty Envelope Technique

Apr 14, 2005

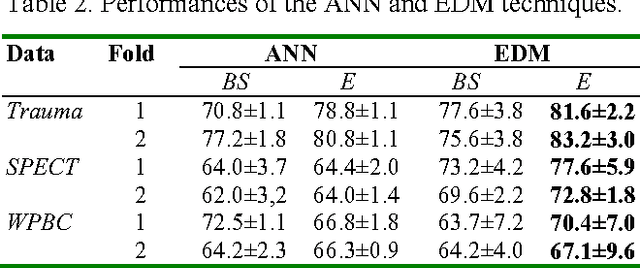

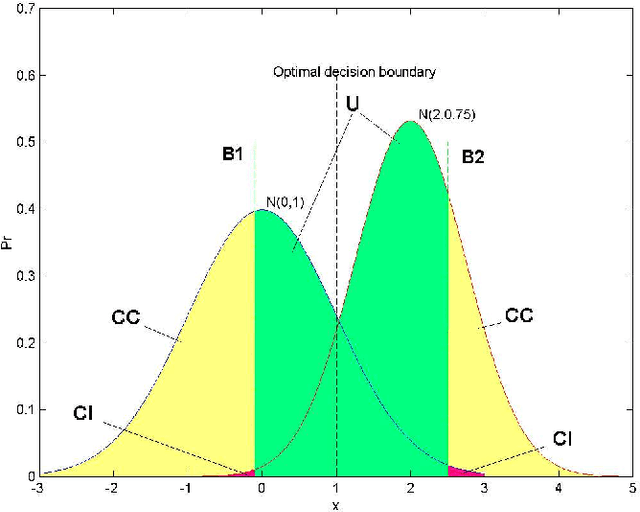

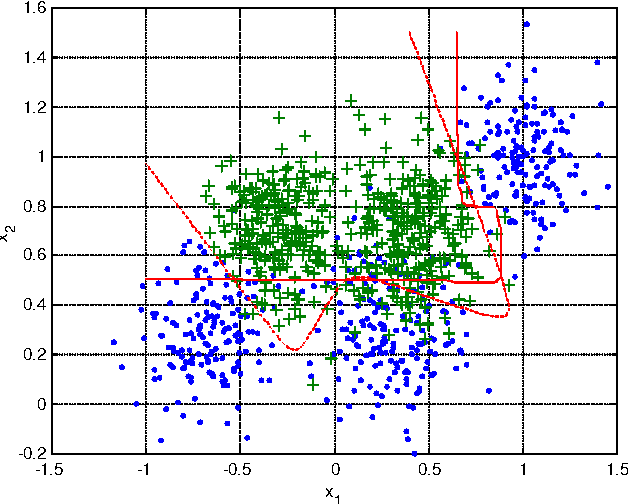

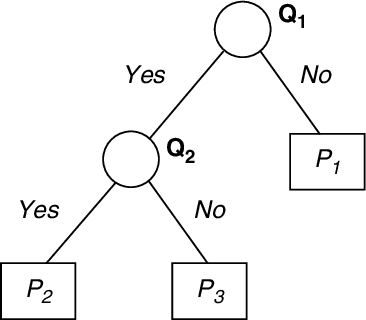

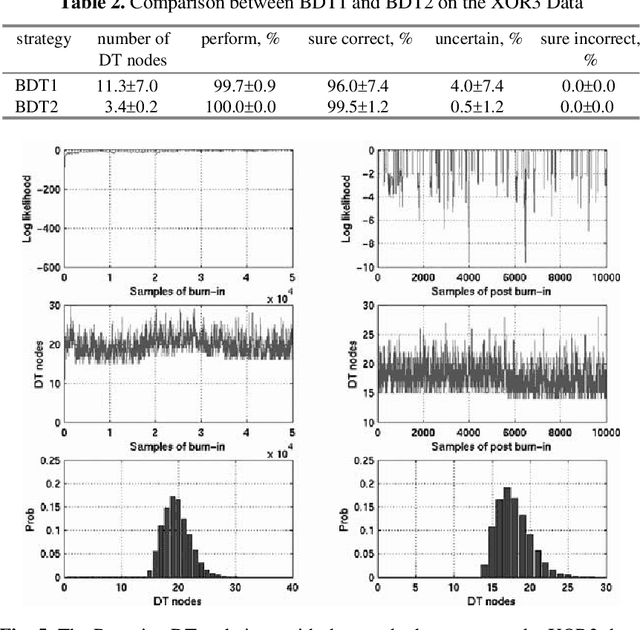

Abstract:Multiple Classifier Systems (MCSs) allow evaluation of the uncertainty of classification outcomes that is of crucial importance for safety critical applications. The uncertainty of classification is determined by a trade-off between the amount of data available for training, the classifier diversity and the required performance. The interpretability of MCSs can also give useful information for experts responsible for making reliable classifications. For this reason Decision Trees (DTs) seem to be attractive classification models for experts. The required diversity of MCSs exploiting such classification models can be achieved by using two techniques, the Bayesian model averaging and the randomised DT ensemble. Both techniques have revealed promising results when applied to real-world problems. In this paper we experimentally compare the classification uncertainty of the Bayesian model averaging with a restarting strategy and the randomised DT ensemble on a synthetic dataset and some domain problems commonly used in the machine learning community. To make the Bayesian DT averaging feasible, we use a Markov Chain Monte Carlo technique. The classification uncertainty is evaluated within an Uncertainty Envelope technique dealing with the class posterior distribution and a given confidence probability. Exploring a full posterior distribution, this technique produces realistic estimates which can be easily interpreted in statistical terms. In our experiments we found out that the Bayesian DTs are superior to the randomised DT ensembles within the Uncertainty Envelope technique.

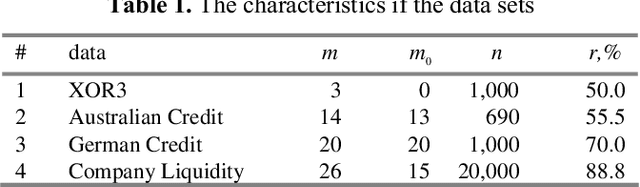

Estimating Classification Uncertainty of Bayesian Decision Tree Technique on Financial Data

Apr 14, 2005

Abstract:Bayesian averaging over classification models allows the uncertainty of classification outcomes to be evaluated, which is of crucial importance for making reliable decisions in applications such as financial in which risks have to be estimated. The uncertainty of classification is determined by a trade-off between the amount of data available for training, the diversity of a classifier ensemble and the required performance. The interpretability of classification models can also give useful information for experts responsible for making reliable classifications. For this reason Decision Trees (DTs) seem to be attractive classification models. The required diversity of the DT ensemble can be achieved by using the Bayesian model averaging all possible DTs. In practice, the Bayesian approach can be implemented on the base of a Markov Chain Monte Carlo (MCMC) technique of random sampling from the posterior distribution. For sampling large DTs, the MCMC method is extended by Reversible Jump technique which allows inducing DTs under given priors. For the case when the prior information on the DT size is unavailable, the sweeping technique defining the prior implicitly reveals a better performance. Within this Chapter we explore the classification uncertainty of the Bayesian MCMC techniques on some datasets from the StatLog Repository and real financial data. The classification uncertainty is compared within an Uncertainty Envelope technique dealing with the class posterior distribution and a given confidence probability. This technique provides realistic estimates of the classification uncertainty which can be easily interpreted in statistical terms with the aim of risk evaluation.

Neural-Network Techniques for Visual Mining Clinical Electroencephalograms

Apr 14, 2005

Abstract:In this chapter we describe new neural-network techniques developed for visual mining clinical electroencephalograms (EEGs), the weak electrical potentials invoked by brain activity. These techniques exploit fruitful ideas of Group Method of Data Handling (GMDH). Section 2 briefly describes the standard neural-network techniques which are able to learn well-suited classification modes from data presented by relevant features. Section 3 introduces an evolving cascade neural network technique which adds new input nodes as well as new neurons to the network while the training error decreases. This algorithm is applied to recognize artifacts in the clinical EEGs. Section 4 presents the GMDH-type polynomial networks learnt from data. We applied this technique to distinguish the EEGs recorded from an Alzheimer and a healthy patient as well as recognize EEG artifacts. Section 5 describes the new neural-network technique developed to induce multi-class concepts from data. We used this technique for inducing a 16-class concept from the large-scale clinical EEG data. Finally we discuss perspectives of applying the neural-network techniques to clinical EEGs.

A Neural Network Decision Tree for Learning Concepts from EEG Data

Apr 13, 2005

Abstract:To learn the multi-class conceptions from the electroencephalogram (EEG) data we developed a neural network decision tree (DT), that performs the linear tests, and a new training algorithm. We found that the known methods fail inducting the classification models when the data are presented by the features some of them are irrelevant, and the classes are heavily overlapped. To train the DT, our algorithm exploits a bottom up search of the features that provide the best classification accuracy of the linear tests. We applied the developed algorithm to induce the DT from the large EEG dataset consisted of 65 patients belonging to 16 age groups. In these recordings each EEG segment was represented by 72 calculated features. The DT correctly classified 80.8% of the training and 80.1% of the testing examples. Correspondingly it correctly classified 89.2% and 87.7% of the EEG recordings.

Polynomial Neural Networks Learnt to Classify EEG Signals

Apr 13, 2005

Abstract:A neural network based technique is presented, which is able to successfully extract polynomial classification rules from labeled electroencephalogram (EEG) signals. To represent the classification rules in an analytical form, we use the polynomial neural networks trained by a modified Group Method of Data Handling (GMDH). The classification rules were extracted from clinical EEG data that were recorded from an Alzheimer patient and the sudden death risk patients. The third data is EEG recordings that include the normal and artifact segments. These EEG data were visually identified by medical experts. The extracted polynomial rules verified on the testing EEG data allow to correctly classify 72% of the risk group patients and 96.5% of the segments. These rules performs slightly better than standard feedforward neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge