Vineet R. Kamat

Advancing Improvisation in Human-Robot Construction Collaboration: Taxonomy and Research Roadmap

Jan 23, 2026Abstract:The construction industry faces productivity stagnation, skilled labor shortages, and safety concerns. While robotic automation offers solutions, construction robots struggle to adapt to unstructured, dynamic sites. Central to this is improvisation, adapting to unexpected situations through creative problem-solving, which remains predominantly human. In construction's unpredictable environments, collaborative human-robot improvisation is essential for workflow continuity. This research develops a six-level taxonomy classifying human-robot collaboration (HRC) based on improvisation capabilities. Through systematic review of 214 articles (2010-2025), we categorize construction robotics across: Manual Work (Level 0), Human-Controlled Execution (Level 1), Adaptive Manipulation (Level 2), Imitation Learning (Level 3), Human-in-Loop BIM Workflow (Level 4), Cloud-Based Knowledge Integration (Level 5), and True Collaborative Improvisation (Level 6). Analysis reveals current research concentrates at lower levels, with critical gaps in experiential learning and limited progression toward collaborative improvisation. A five-dimensional radar framework illustrates progressive evolution of Planning, Cognitive Role, Physical Execution, Learning Capability, and Improvisation, demonstrating how complementary human-robot capabilities create team performance exceeding individual contributions. The research identifies three fundamental barriers: technical limitations in grounding and dialogic reasoning, conceptual gaps between human improvisation and robotics research, and methodological challenges. We recommend future research emphasizing improved human-robot communication via Augmented/Virtual Reality interfaces, large language model integration, and cloud-based knowledge systems to advance toward true collaborative improvisation.

Siamese Network with Dual Attention for EEG-Driven Social Learning: Bridging the Human-Robot Gap in Long-Tail Autonomous Driving

Apr 14, 2025Abstract:Robots with wheeled, quadrupedal, or humanoid forms are increasingly integrated into built environments. However, unlike human social learning, they lack a critical pathway for intrinsic cognitive development, namely, learning from human feedback during interaction. To understand human ubiquitous observation, supervision, and shared control in dynamic and uncertain environments, this study presents a brain-computer interface (BCI) framework that enables classification of Electroencephalogram (EEG) signals to detect cognitively demanding and safety-critical events. As a timely and motivating co-robotic engineering application, we simulate a human-in-the-loop scenario to flag risky events in semi-autonomous robotic driving-representative of long-tail cases that pose persistent bottlenecks to the safety performance of smart mobility systems and robotic vehicles. Drawing on recent advances in few-shot learning, we propose a dual-attention Siamese convolutional network paired with Dynamic Time Warping Barycenter Averaging approach to generate robust EEG-encoded signal representations. Inverse source localization reveals activation in Broadman areas 4 and 9, indicating perception-action coupling during task-relevant mental imagery. The model achieves 80% classification accuracy under data-scarce conditions and exhibits a nearly 100% increase in the utility of salient features compared to state-of-the-art methods, as measured through integrated gradient attribution. Beyond performance, this study contributes to our understanding of the cognitive architecture required for BCI agents-particularly the role of attention and memory mechanisms-in categorizing diverse mental states and supporting both inter- and intra-subject adaptation. Overall, this research advances the development of cognitive robotics and socially guided learning for service robots in complex built environments.

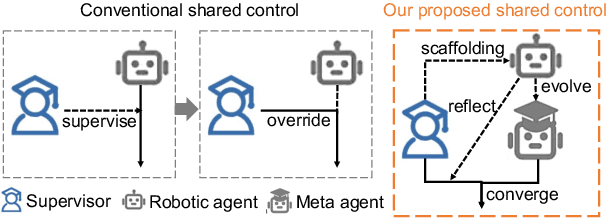

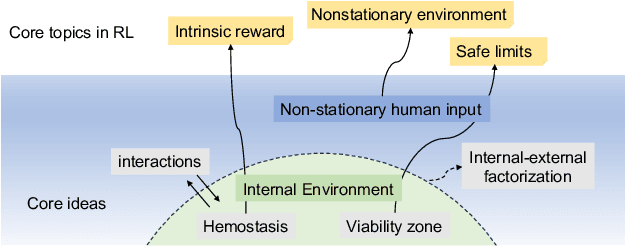

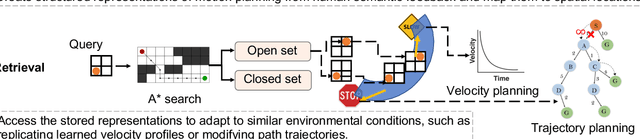

Interoceptive Robots for Convergent Shared Control in Collaborative Construction Work

Jan 16, 2025

Abstract:Building autonomous mobile robots (AMRs) with optimized efficiency and adaptive capabilities-able to respond to changing task demands and dynamic environments-is a strongly desired goal for advancing construction robotics. Such robots can play a critical role in enabling automation, reducing operational carbon footprints, and supporting modular construction processes. Inspired by the adaptive autonomy of living organisms, we introduce interoception, which centers on the robot's internal state representation, as a foundation for developing self-reflection and conscious learning to enable continual learning and adaptability in robotic agents. In this paper, we factorize internal state variables and mathematical properties as "cognitive dissonance" in shared control paradigms, where human interventions occasionally occur. We offer a new perspective on how interoception can help build adaptive motion planning in AMRs by integrating the legacy of heuristic costs from grid/graph-based algorithms with recent advances in neuroscience and reinforcement learning. Declarative and procedural knowledge extracted from human semantic inputs is encoded into a hypergraph model that overlaps with the spatial configuration of onsite layout for path planning. In addition, we design a velocity-replay module using an encoder-decoder architecture with few-shot learning to enable robots to replicate velocity profiles in contextualized scenarios for multi-robot synchronization and handover collaboration. These "cached" knowledge representations are demonstrated in simulated environments for multi-robot motion planning and stacking tasks. The insights from this study pave the way toward artificial general intelligence in AMRs, fostering their progression from complexity to competence in construction automation.

Towards Probabilistic Inference of Human Motor Intentions by Assistive Mobile Robots Controlled via a Brain-Computer Interface

Jan 09, 2025

Abstract:Assistive mobile robots are a transformative technology that helps persons with disabilities regain the ability to move freely. Although autonomous wheelchairs significantly reduce user effort, they still require human input to allow users to maintain control and adapt to changing environments. Brain Computer Interface (BCI) stands out as a highly user-friendly option that does not require physical movement. Current BCI systems can understand whether users want to accelerate or decelerate, but they implement these changes in discrete speed steps rather than allowing for smooth, continuous velocity adjustments. This limitation prevents the systems from mimicking the natural, fluid speed changes seen in human self-paced motion. The authors aim to address this limitation by redesigning the perception-action cycle in a BCI controlled robotic system: improving how the robotic agent interprets the user's motion intentions (world state) and implementing these actions in a way that better reflects natural physical properties of motion, such as inertia and damping. The scope of this paper focuses on the perception aspect. We asked and answered a normative question "what computation should the robotic agent carry out to optimally perceive incomplete or noisy sensory observations?" Empirical EEG data were collected, and probabilistic representation that served as world state distributions were learned and evaluated in a Generative Adversarial Network framework. The ROS framework was established that connected with a Gazebo environment containing a digital twin of an indoor space and a virtual model of a robotic wheelchair. Signal processing and statistical analyses were implemented to identity the most discriminative features in the spatial-spectral-temporal dimensions, which are then used to construct the world model for the robotic agent to interpret user motion intentions as a Bayesian observer.

Integrating Large Language Models with Multimodal Virtual Reality Interfaces to Support Collaborative Human-Robot Construction Work

Apr 04, 2024Abstract:In the construction industry, where work environments are complex, unstructured and often dangerous, the implementation of Human-Robot Collaboration (HRC) is emerging as a promising advancement. This underlines the critical need for intuitive communication interfaces that enable construction workers to collaborate seamlessly with robotic assistants. This study introduces a conversational Virtual Reality (VR) interface integrating multimodal interaction to enhance intuitive communication between construction workers and robots. By integrating voice and controller inputs with the Robot Operating System (ROS), Building Information Modeling (BIM), and a game engine featuring a chat interface powered by a Large Language Model (LLM), the proposed system enables intuitive and precise interaction within a VR setting. Evaluated by twelve construction workers through a drywall installation case study, the proposed system demonstrated its low workload and high usability with succinct command inputs. The proposed multimodal interaction system suggests that such technological integration can substantially advance the integration of robotic assistants in the construction industry.

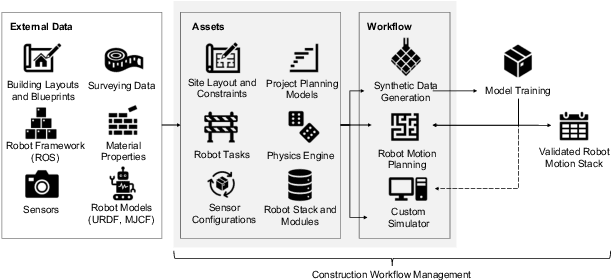

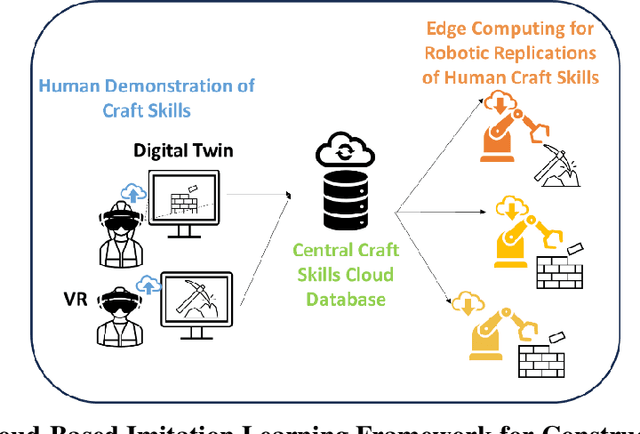

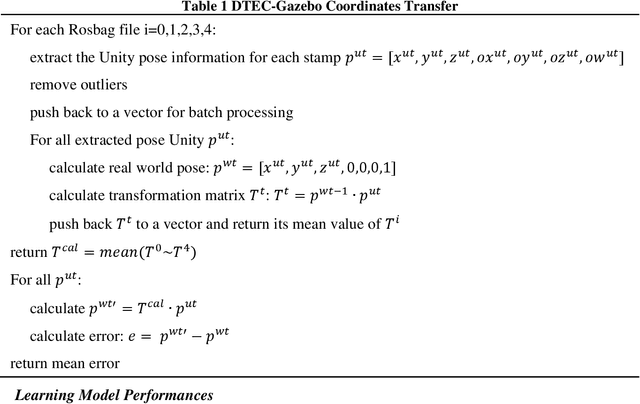

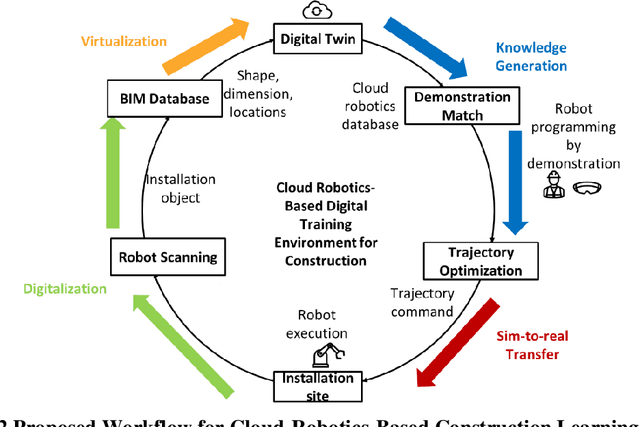

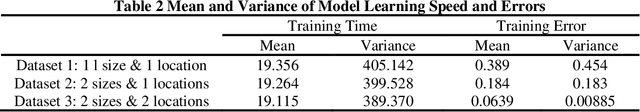

Cloud-Based Hierarchical Imitation Learning for Scalable Transfer of Construction Skills from Human Workers to Assisting Robots

Sep 20, 2023

Abstract:Assigning repetitive and physically-demanding construction tasks to robots can alleviate human workers's exposure to occupational injuries. Transferring necessary dexterous and adaptive artisanal construction craft skills from workers to robots is crucial for the successful delegation of construction tasks and achieving high-quality robot-constructed work. Predefined motion planning scripts tend to generate rigid and collision-prone robotic behaviors in unstructured construction site environments. In contrast, Imitation Learning (IL) offers a more robust and flexible skill transfer scheme. However, the majority of IL algorithms rely on human workers to repeatedly demonstrate task performance at full scale, which can be counterproductive and infeasible in the case of construction work. To address this concern, this paper proposes an immersive, cloud robotics-based virtual demonstration framework that serves two primary purposes. First, it digitalizes the demonstration process, eliminating the need for repetitive physical manipulation of heavy construction objects. Second, it employs a federated collection of reusable demonstrations that are transferable for similar tasks in the future and can thus reduce the requirement for repetitive illustration of tasks by human agents. Additionally, to enhance the trustworthiness, explainability, and ethical soundness of the robot training, this framework utilizes a Hierarchical Imitation Learning (HIL) model to decompose human manipulation skills into sequential and reactive sub-skills. These two layers of skills are represented by deep generative models, enabling adaptive control of robot actions. By delegating the physical strains of construction work to human-trained robots, this framework promotes the inclusion of workers with diverse physical capabilities and educational backgrounds within the construction industry.

Natural Language Instructions for Intuitive Human Interaction with Robotic Assistants in Field Construction Work

Jul 11, 2023

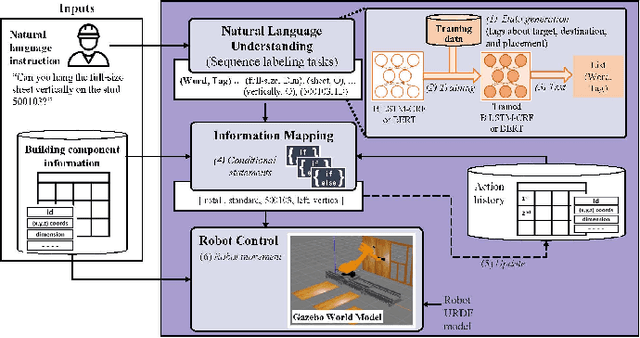

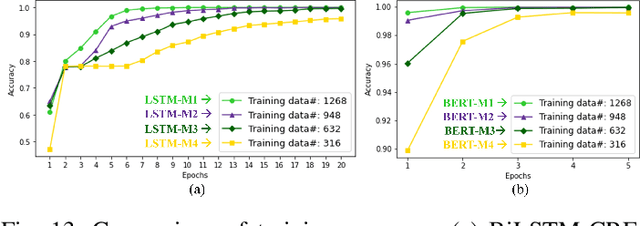

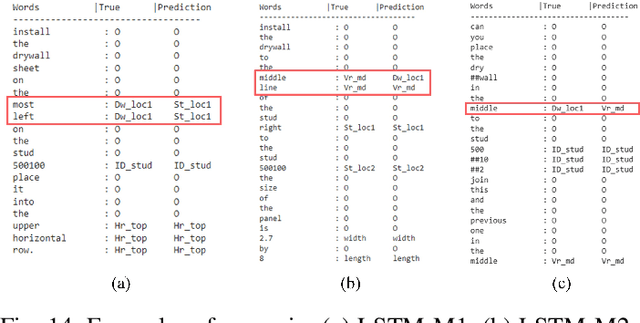

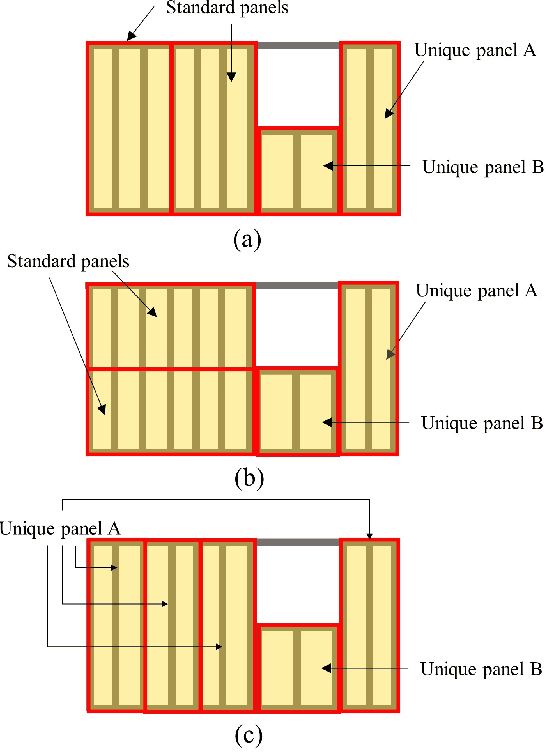

Abstract:The introduction of robots is widely considered to have significant potential of alleviating the issues of worker shortage and stagnant productivity that afflict the construction industry. However, it is challenging to use fully automated robots in complex and unstructured construction sites. Human-Robot Collaboration (HRC) has shown promise of combining human workers' flexibility and robot assistants' physical abilities to jointly address the uncertainties inherent in construction work. When introducing HRC in construction, it is critical to recognize the importance of teamwork and supervision in field construction and establish a natural and intuitive communication system for the human workers and robotic assistants. Natural language-based interaction can enable intuitive and familiar communication with robots for human workers who are non-experts in robot programming. However, limited research has been conducted on this topic in construction. This paper proposes a framework to allow human workers to interact with construction robots based on natural language instructions. The proposed method consists of three stages: Natural Language Understanding (NLU), Information Mapping (IM), and Robot Control (RC). Natural language instructions are input to a language model to predict a tag for each word in the NLU module. The IM module uses the result of the NLU module and building component information to generate the final instructional output essential for a robot to acknowledge and perform the construction task. A case study for drywall installation is conducted to evaluate the proposed approach. The obtained results highlight the potential of using natural language-based interaction to replicate the communication that occurs between human workers within the context of human-robot teams.

Enabling BIM-Driven Robotic Construction Workflows with Closed-Loop Digital Twins

Jun 16, 2023

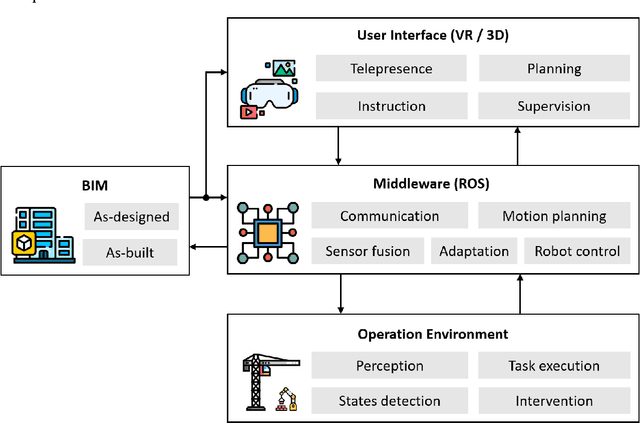

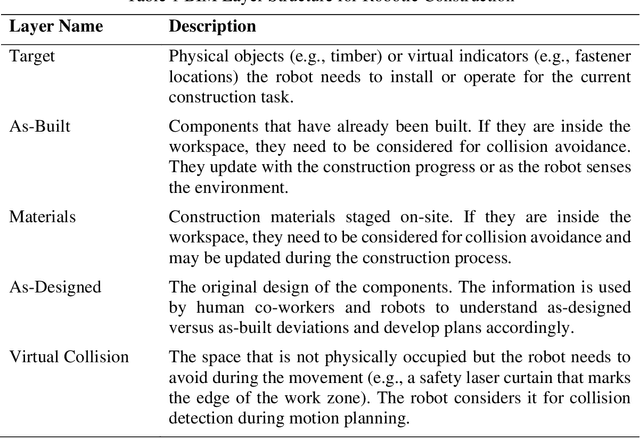

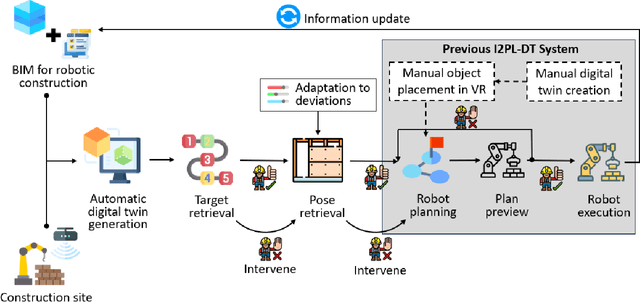

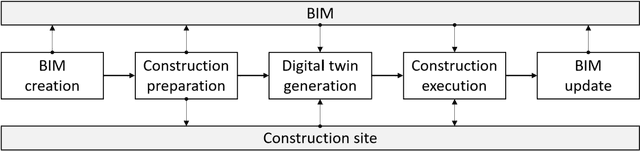

Abstract:Robots can greatly alleviate physical demands on construction workers while enhancing both the productivity and safety of construction projects. Leveraging a Building Information Model (BIM) offers a natural and promising approach to drive a robotic construction workflow. However, because of uncertainties inherent on construction sites, such as discrepancies between the designed and as-built workpieces, robots cannot solely rely on the BIM to guide field construction work. Human workers are adept at improvising alternative plans with their creativity and experience and thus can assist robots in overcoming uncertainties and performing construction work successfully. This research introduces an interactive closed-loop digital twin system that integrates a BIM into human-robot collaborative construction workflows. The robot is primarily driven by the BIM, but it adaptively adjusts its plan based on actual site conditions while the human co-worker supervises the process. If necessary, the human co-worker intervenes in the robot's plan by changing the task sequence or target position, requesting a new motion plan, or modifying the construction component(s)/material(s) to help the robot navigate uncertainties. To investigate the physical deployment of the system, a drywall installation case study is conducted with an industrial robotic arm in a laboratory. In addition, a block pick-and-place experiment is carried out to evaluate system performance. Integrating the flexibility of human workers and the autonomy and accuracy afforded by the BIM, the system significantly increases the robustness of construction robots in the performance of field construction work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge