Xiaoshan Zhou

Retrieval-augmented in-context learning for multimodal large language models in disease classification

May 04, 2025

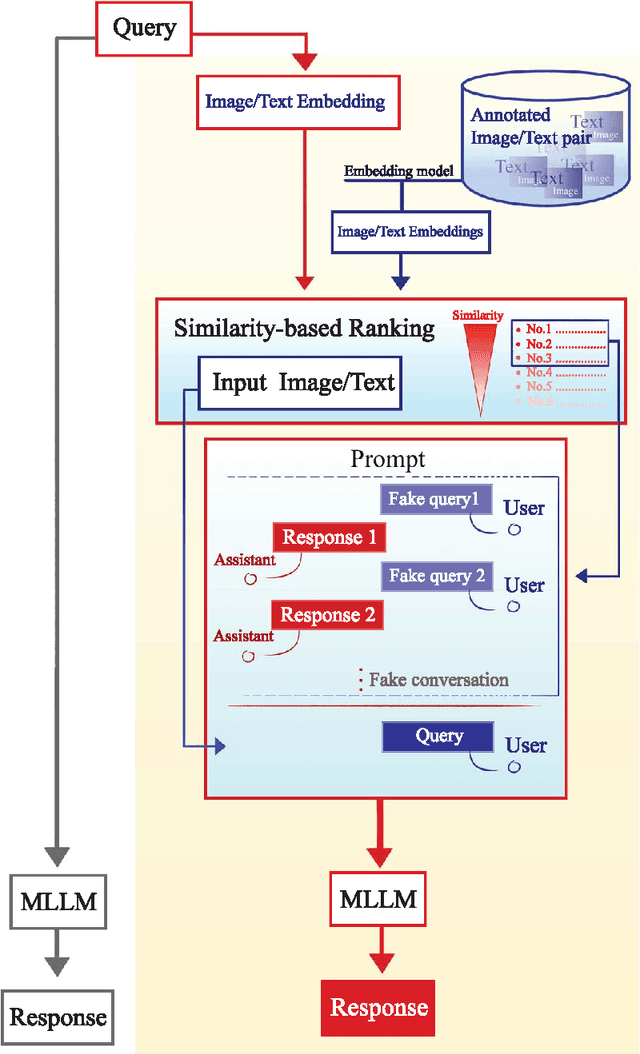

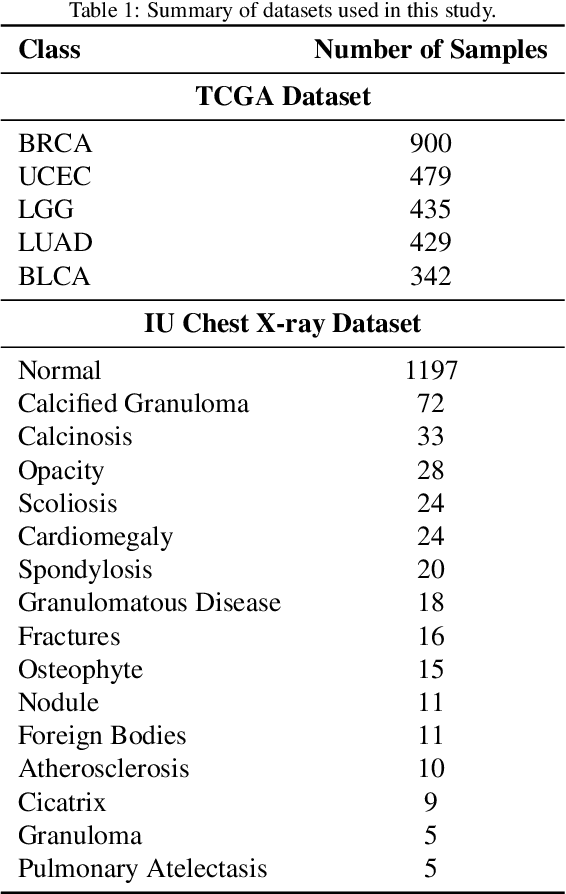

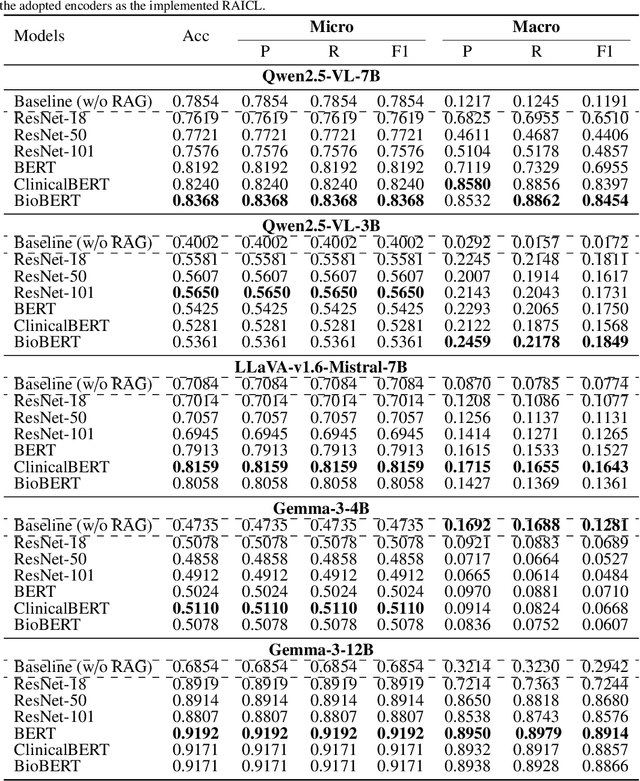

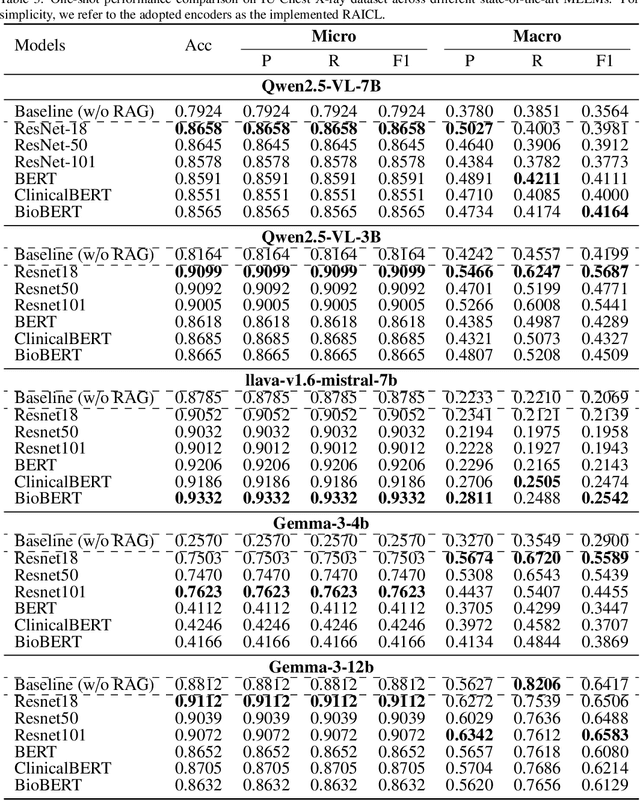

Abstract:Objectives: We aim to dynamically retrieve informative demonstrations, enhancing in-context learning in multimodal large language models (MLLMs) for disease classification. Methods: We propose a Retrieval-Augmented In-Context Learning (RAICL) framework, which integrates retrieval-augmented generation (RAG) and in-context learning (ICL) to adaptively select demonstrations with similar disease patterns, enabling more effective ICL in MLLMs. Specifically, RAICL examines embeddings from diverse encoders, including ResNet, BERT, BioBERT, and ClinicalBERT, to retrieve appropriate demonstrations, and constructs conversational prompts optimized for ICL. We evaluated the framework on two real-world multi-modal datasets (TCGA and IU Chest X-ray), assessing its performance across multiple MLLMs (Qwen, Llava, Gemma), embedding strategies, similarity metrics, and varying numbers of demonstrations. Results: RAICL consistently improved classification performance. Accuracy increased from 0.7854 to 0.8368 on TCGA and from 0.7924 to 0.8658 on IU Chest X-ray. Multi-modal inputs outperformed single-modal ones, with text-only inputs being stronger than images alone. The richness of information embedded in each modality will determine which embedding model can be used to get better results. Few-shot experiments showed that increasing the number of retrieved examples further enhanced performance. Across different similarity metrics, Euclidean distance achieved the highest accuracy while cosine similarity yielded better macro-F1 scores. RAICL demonstrated consistent improvements across various MLLMs, confirming its robustness and versatility. Conclusions: RAICL provides an efficient and scalable approach to enhance in-context learning in MLLMs for multimodal disease classification.

Siamese Network with Dual Attention for EEG-Driven Social Learning: Bridging the Human-Robot Gap in Long-Tail Autonomous Driving

Apr 14, 2025Abstract:Robots with wheeled, quadrupedal, or humanoid forms are increasingly integrated into built environments. However, unlike human social learning, they lack a critical pathway for intrinsic cognitive development, namely, learning from human feedback during interaction. To understand human ubiquitous observation, supervision, and shared control in dynamic and uncertain environments, this study presents a brain-computer interface (BCI) framework that enables classification of Electroencephalogram (EEG) signals to detect cognitively demanding and safety-critical events. As a timely and motivating co-robotic engineering application, we simulate a human-in-the-loop scenario to flag risky events in semi-autonomous robotic driving-representative of long-tail cases that pose persistent bottlenecks to the safety performance of smart mobility systems and robotic vehicles. Drawing on recent advances in few-shot learning, we propose a dual-attention Siamese convolutional network paired with Dynamic Time Warping Barycenter Averaging approach to generate robust EEG-encoded signal representations. Inverse source localization reveals activation in Broadman areas 4 and 9, indicating perception-action coupling during task-relevant mental imagery. The model achieves 80% classification accuracy under data-scarce conditions and exhibits a nearly 100% increase in the utility of salient features compared to state-of-the-art methods, as measured through integrated gradient attribution. Beyond performance, this study contributes to our understanding of the cognitive architecture required for BCI agents-particularly the role of attention and memory mechanisms-in categorizing diverse mental states and supporting both inter- and intra-subject adaptation. Overall, this research advances the development of cognitive robotics and socially guided learning for service robots in complex built environments.

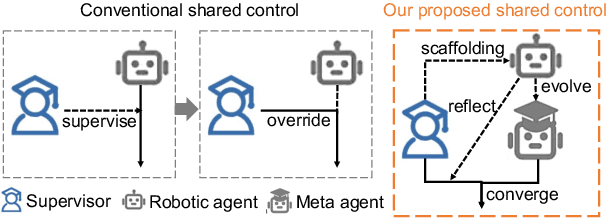

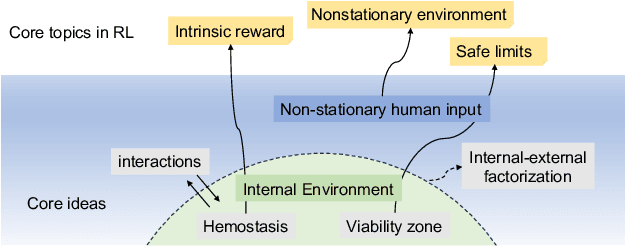

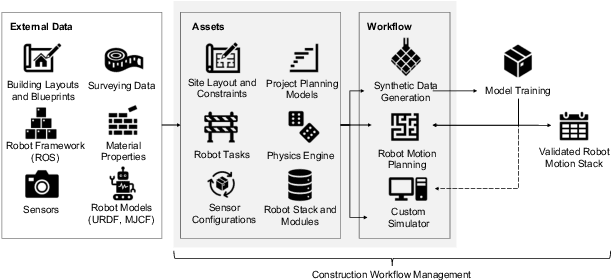

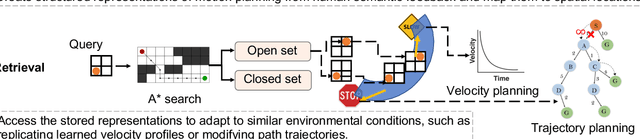

Interoceptive Robots for Convergent Shared Control in Collaborative Construction Work

Jan 16, 2025

Abstract:Building autonomous mobile robots (AMRs) with optimized efficiency and adaptive capabilities-able to respond to changing task demands and dynamic environments-is a strongly desired goal for advancing construction robotics. Such robots can play a critical role in enabling automation, reducing operational carbon footprints, and supporting modular construction processes. Inspired by the adaptive autonomy of living organisms, we introduce interoception, which centers on the robot's internal state representation, as a foundation for developing self-reflection and conscious learning to enable continual learning and adaptability in robotic agents. In this paper, we factorize internal state variables and mathematical properties as "cognitive dissonance" in shared control paradigms, where human interventions occasionally occur. We offer a new perspective on how interoception can help build adaptive motion planning in AMRs by integrating the legacy of heuristic costs from grid/graph-based algorithms with recent advances in neuroscience and reinforcement learning. Declarative and procedural knowledge extracted from human semantic inputs is encoded into a hypergraph model that overlaps with the spatial configuration of onsite layout for path planning. In addition, we design a velocity-replay module using an encoder-decoder architecture with few-shot learning to enable robots to replicate velocity profiles in contextualized scenarios for multi-robot synchronization and handover collaboration. These "cached" knowledge representations are demonstrated in simulated environments for multi-robot motion planning and stacking tasks. The insights from this study pave the way toward artificial general intelligence in AMRs, fostering their progression from complexity to competence in construction automation.

Towards Probabilistic Inference of Human Motor Intentions by Assistive Mobile Robots Controlled via a Brain-Computer Interface

Jan 09, 2025

Abstract:Assistive mobile robots are a transformative technology that helps persons with disabilities regain the ability to move freely. Although autonomous wheelchairs significantly reduce user effort, they still require human input to allow users to maintain control and adapt to changing environments. Brain Computer Interface (BCI) stands out as a highly user-friendly option that does not require physical movement. Current BCI systems can understand whether users want to accelerate or decelerate, but they implement these changes in discrete speed steps rather than allowing for smooth, continuous velocity adjustments. This limitation prevents the systems from mimicking the natural, fluid speed changes seen in human self-paced motion. The authors aim to address this limitation by redesigning the perception-action cycle in a BCI controlled robotic system: improving how the robotic agent interprets the user's motion intentions (world state) and implementing these actions in a way that better reflects natural physical properties of motion, such as inertia and damping. The scope of this paper focuses on the perception aspect. We asked and answered a normative question "what computation should the robotic agent carry out to optimally perceive incomplete or noisy sensory observations?" Empirical EEG data were collected, and probabilistic representation that served as world state distributions were learned and evaluated in a Generative Adversarial Network framework. The ROS framework was established that connected with a Gazebo environment containing a digital twin of an indoor space and a virtual model of a robotic wheelchair. Signal processing and statistical analyses were implemented to identity the most discriminative features in the spatial-spectral-temporal dimensions, which are then used to construct the world model for the robotic agent to interpret user motion intentions as a Bayesian observer.

A privacy-preserving data storage and service framework based on deep learning and blockchain for construction workers' wearable IoT sensors

Nov 19, 2022Abstract:Classifying brain signals collected by wearable Internet of Things (IoT) sensors, especially brain-computer interfaces (BCIs), is one of the fastest-growing areas of research. However, research has mostly ignored the secure storage and privacy protection issues of collected personal neurophysiological data. Therefore, in this article, we try to bridge this gap and propose a secure privacy-preserving protocol for implementing BCI applications. We first transformed brain signals into images and used generative adversarial network to generate synthetic signals to protect data privacy. Subsequently, we applied the paradigm of transfer learning for signal classification. The proposed method was evaluated by a case study and results indicate that real electroencephalogram data augmented with artificially generated samples provide superior classification performance. In addition, we proposed a blockchain-based scheme and developed a prototype on Ethereum, which aims to make storing, querying and sharing personal neurophysiological data and analysis reports secure and privacy-aware. The rights of three main transaction bodies - construction workers, BCI service providers and project managers - are described and the advantages of the proposed system are discussed. We believe this paper provides a well-rounded solution to safeguard private data against cyber-attacks, level the playing field for BCI application developers, and to the end improve professional well-being in the industry.

Brain informed transfer learning for categorizing construction hazards

Nov 17, 2022Abstract:A transfer learning paradigm is proposed for "knowledge" transfer between the human brain and convolutional neural network (CNN) for a construction hazard categorization task. Participants' brain activities are recorded using electroencephalogram (EEG) measurements when viewing the same images (target dataset) as the CNN. The CNN is pretrained on the EEG data and then fine-tuned on the construction scene images. The results reveal that the EEG-pretrained CNN achieves a 9 % higher accuracy compared with a network with same architecture but randomly initialized parameters on a three-class classification task. Brain activity from the left frontal cortex exhibits the highest performance gains, thus indicating high-level cognitive processing during hazard recognition. This work is a step toward improving machine learning algorithms by learning from human-brain signals recorded via a commercially available brain-computer interface. More generalized visual recognition systems can be effectively developed based on this approach of "keep human in the loop".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge