Vincent Grari

SAKE: Steering Activations for Knowledge Editing

Mar 03, 2025Abstract:As Large Langue Models have been shown to memorize real-world facts, the need to update this knowledge in a controlled and efficient manner arises. Designed with these constraints in mind, Knowledge Editing (KE) approaches propose to alter specific facts in pretrained models. However, they have been shown to suffer from several limitations, including their lack of contextual robustness and their failure to generalize to logical implications related to the fact. To overcome these issues, we propose SAKE, a steering activation method that models a fact to be edited as a distribution rather than a single prompt. Leveraging Optimal Transport, SAKE alters the LLM behavior over a whole fact-related distribution, defined as paraphrases and logical implications. Several numerical experiments demonstrate the effectiveness of this method: SAKE is thus able to perform more robust edits than its existing counterparts.

Controlled Model Debiasing through Minimal and Interpretable Updates

Feb 28, 2025Abstract:Traditional approaches to learning fair machine learning models often require rebuilding models from scratch, generally without accounting for potentially existing previous models. In a context where models need to be retrained frequently, this can lead to inconsistent model updates, as well as redundant and costly validation testing. To address this limitation, we introduce the notion of controlled model debiasing, a novel supervised learning task relying on two desiderata: that the differences between new fair model and the existing one should be (i) interpretable and (ii) minimal. After providing theoretical guarantees to this new problem, we introduce a novel algorithm for algorithmic fairness, COMMOD, that is both model-agnostic and does not require the sensitive attribute at test time. In addition, our algorithm is explicitly designed to enforce minimal and interpretable changes between biased and debiased predictions -a property that, while highly desirable in high-stakes applications, is rarely prioritized as an explicit objective in fairness literature. Our approach combines a concept-based architecture and adversarial learning and we demonstrate through empirical results that it achieves comparable performance to state-of-the-art debiasing methods while performing minimal and interpretable prediction changes.

Post-processing fairness with minimal changes

Aug 27, 2024Abstract:In this paper, we introduce a novel post-processing algorithm that is both model-agnostic and does not require the sensitive attribute at test time. In addition, our algorithm is explicitly designed to enforce minimal changes between biased and debiased predictions; a property that, while highly desirable, is rarely prioritized as an explicit objective in fairness literature. Our approach leverages a multiplicative factor applied to the logit value of probability scores produced by a black-box classifier. We demonstrate the efficacy of our method through empirical evaluations, comparing its performance against other four debiasing algorithms on two widely used datasets in fairness research.

OptiGrad: A Fair and more Efficient Price Elasticity Optimization via a Gradient Based Learning

Apr 16, 2024Abstract:This paper presents a novel approach to optimizing profit margins in non-life insurance markets through a gradient descent-based method, targeting three key objectives: 1) maximizing profit margins, 2) ensuring conversion rates, and 3) enforcing fairness criteria such as demographic parity (DP). Traditional pricing optimization, which heavily lean on linear and semi definite programming, encounter challenges in balancing profitability and fairness. These challenges become especially pronounced in situations that necessitate continuous rate adjustments and the incorporation of fairness criteria. Specifically, indirect Ratebook optimization, a widely-used method for new business price setting, relies on predictor models such as XGBoost or GLMs/GAMs to estimate on downstream individually optimized prices. However, this strategy is prone to sequential errors and struggles to effectively manage optimizations for continuous rate scenarios. In practice, to save time actuaries frequently opt for optimization within discrete intervals (e.g., range of [-20\%, +20\%] with fix increments) leading to approximate estimations. Moreover, to circumvent infeasible solutions they often use relaxed constraints leading to suboptimal pricing strategies. The reverse-engineered nature of traditional models complicates the enforcement of fairness and can lead to biased outcomes. Our method addresses these challenges by employing a direct optimization strategy in the continuous space of rates and by embedding fairness through an adversarial predictor model. This innovation not only reduces sequential errors and simplifies the complexities found in traditional models but also directly integrates fairness measures into the commercial premium calculation. We demonstrate improved margin performance and stronger enforcement of fairness highlighting the critical need to evolve existing pricing strategies.

On the Fairness ROAD: Robust Optimization for Adversarial Debiasing

Oct 27, 2023Abstract:In the field of algorithmic fairness, significant attention has been put on group fairness criteria, such as Demographic Parity and Equalized Odds. Nevertheless, these objectives, measured as global averages, have raised concerns about persistent local disparities between sensitive groups. In this work, we address the problem of local fairness, which ensures that the predictor is unbiased not only in terms of expectations over the whole population, but also within any subregion of the feature space, unknown at training time. To enforce this objective, we introduce ROAD, a novel approach that leverages the Distributionally Robust Optimization (DRO) framework within a fair adversarial learning objective, where an adversary tries to infer the sensitive attribute from the predictions. Using an instance-level re-weighting strategy, ROAD is designed to prioritize inputs that are likely to be locally unfair, i.e. where the adversary faces the least difficulty in reconstructing the sensitive attribute. Numerical experiments demonstrate the effectiveness of our method: it achieves Pareto dominance with respect to local fairness and accuracy for a given global fairness level across three standard datasets, and also enhances fairness generalization under distribution shift.

A fair pricing model via adversarial learning

Mar 07, 2022

Abstract:At the core of insurance business lies classification between risky and non-risky insureds, actuarial fairness meaning that risky insureds should contribute more and pay a higher premium than non-risky or less-risky ones. Actuaries, therefore, use econometric or machine learning techniques to classify, but the distinction between a fair actuarial classification and "discrimination" is subtle. For this reason, there is a growing interest about fairness and discrimination in the actuarial community Lindholm, Richman, Tsanakas, and Wuthrich (2022). Presumably, non-sensitive characteristics can serve as substitutes or proxies for protected attributes. For example, the color and model of a car, combined with the driver's occupation, may lead to an undesirable gender bias in the prediction of car insurance prices. Surprisingly, we will show that debiasing the predictor alone may be insufficient to maintain adequate accuracy (1). Indeed, the traditional pricing model is currently built in a two-stage structure that considers many potentially biased components such as car or geographic risks. We will show that this traditional structure has significant limitations in achieving fairness. For this reason, we have developed a novel pricing model approach. Recently some approaches have Blier-Wong, Cossette, Lamontagne, and Marceau (2021); Wuthrich and Merz (2021) shown the value of autoencoders in pricing. In this paper, we will show that (2) this can be generalized to multiple pricing factors (geographic, car type), (3) it perfectly adapted for a fairness context (since it allows to debias the set of pricing components): We extend this main idea to a general framework in which a single whole pricing model is trained by generating the geographic and car pricing components needed to predict the pure premium while mitigating the unwanted bias according to the desired metric.

Fairness without the sensitive attribute via Causal Variational Autoencoder

Sep 10, 2021

Abstract:In recent years, most fairness strategies in machine learning models focus on mitigating unwanted biases by assuming that the sensitive information is observed. However this is not always possible in practice. Due to privacy purposes and var-ious regulations such as RGPD in EU, many personal sensitive attributes are frequently not collected. We notice a lack of approaches for mitigating bias in such difficult settings, in particular for achieving classical fairness objectives such as Demographic Parity and Equalized Odds. By leveraging recent developments for approximate inference, we propose an approach to fill this gap. Based on a causal graph, we rely on a new variational auto-encoding based framework named SRCVAE to infer a sensitive information proxy, that serve for bias mitigation in an adversarial fairness approach. We empirically demonstrate significant improvements over existing works in the field. We observe that the generated proxy's latent space recovers sensitive information and that our approach achieves a higher accuracy while obtaining the same level of fairness on two real datasets, as measured using com-mon fairness definitions.

Learning Unbiased Representations via Rényi Minimization

Sep 07, 2020

Abstract:In recent years, significant work has been done to include fairness constraints in the training objective of machine learning algorithms. Many state-of the-art algorithms tackle this challenge by learning a fair representation which captures all the relevant information to predict the output Y while not containing any information about a sensitive attribute S. In this paper, we propose an adversarial algorithm to learn unbiased representations via the Hirschfeld-Gebelein-Renyi (HGR) maximal correlation coefficient. We leverage recent work which has been done to estimate this coefficient by learning deep neural network transformations and use it as a minmax game to penalize the intrinsic bias in a multi dimensional latent representation. Compared to other dependence measures, the HGR coefficient captures more information about the non-linear dependencies with the sensitive variable, making the algorithm more efficient in mitigating bias in the representation. We empirically evaluate and compare our approach and demonstrate significant improvements over existing works in the field.

Adversarial Learning for Counterfactual Fairness

Aug 30, 2020

Abstract:In recent years, fairness has become an important topic in the machine learning research community. In particular, counterfactual fairness aims at building prediction models which ensure fairness at the most individual level. Rather than globally considering equity over the entire population, the idea is to imagine what any individual would look like with a variation of a given attribute of interest, such as a different gender or race for instance. Existing approaches rely on Variational Auto-encoding of individuals, using Maximum Mean Discrepancy (MMD) penalization to limit the statistical dependence of inferred representations with their corresponding sensitive attributes. This enables the simulation of counterfactual samples used for training the target fair model, the goal being to produce similar outcomes for every alternate version of any individual. In this work, we propose to rely on an adversarial neural learning approach, that enables more powerful inference than with MMD penalties, and is particularly better fitted for the continuous setting, where values of sensitive attributes cannot be exhaustively enumerated. Experiments show significant improvements in term of counterfactual fairness for both the discrete and the continuous settings.

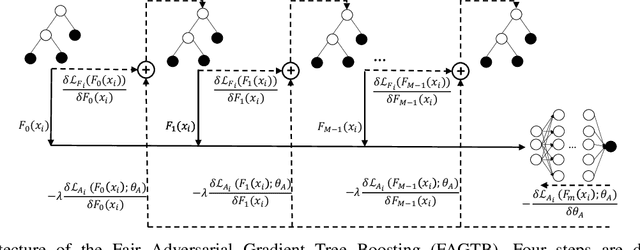

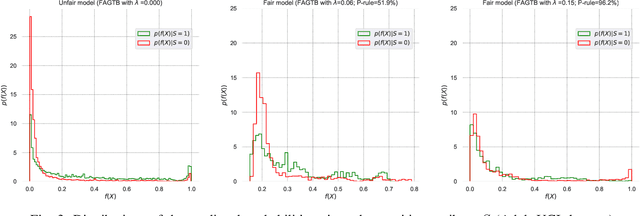

Fair Adversarial Gradient Tree Boosting

Nov 18, 2019

Abstract:Fair classification has become an important topic in machine learning research. While most bias mitigation strategies focus on neural networks, we noticed a lack of work on fair classifiers based on decision trees even though they have proven very efficient. In an up-to-date comparison of state-of-the-art classification algorithms in tabular data, tree boosting outperforms deep learning. For this reason, we have developed a novel approach of adversarial gradient tree boosting. The objective of the algorithm is to predict the output $Y$ with gradient tree boosting while minimizing the ability of an adversarial neural network to predict the sensitive attribute $S$. The approach incorporates at each iteration the gradient of the neural network directly in the gradient tree boosting. We empirically assess our approach on 4 popular data sets and compare against state-of-the-art algorithms. The results show that our algorithm achieves a higher accuracy while obtaining the same level of fairness, as measured using a set of different common fairness definitions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge