Vincent Frappier

Is graph-based feature selection of genes better than random?

Nov 19, 2019

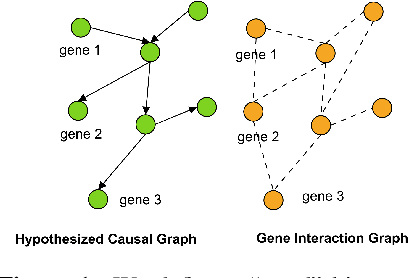

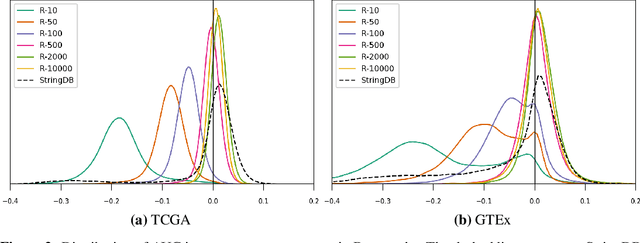

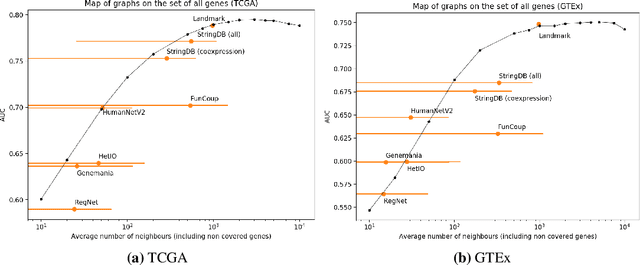

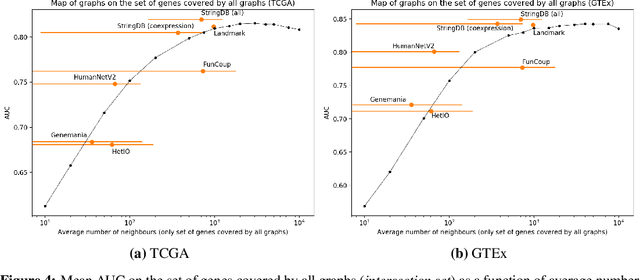

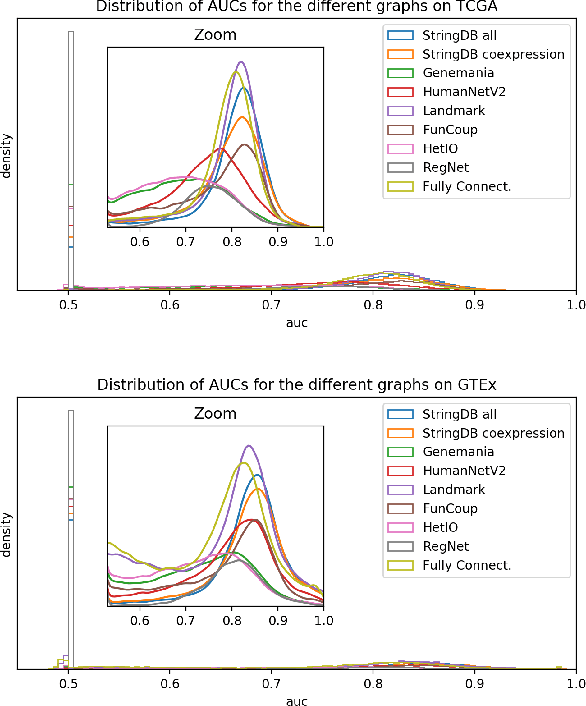

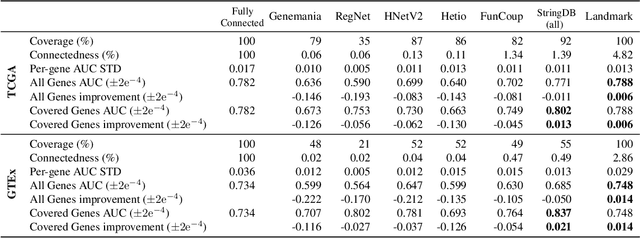

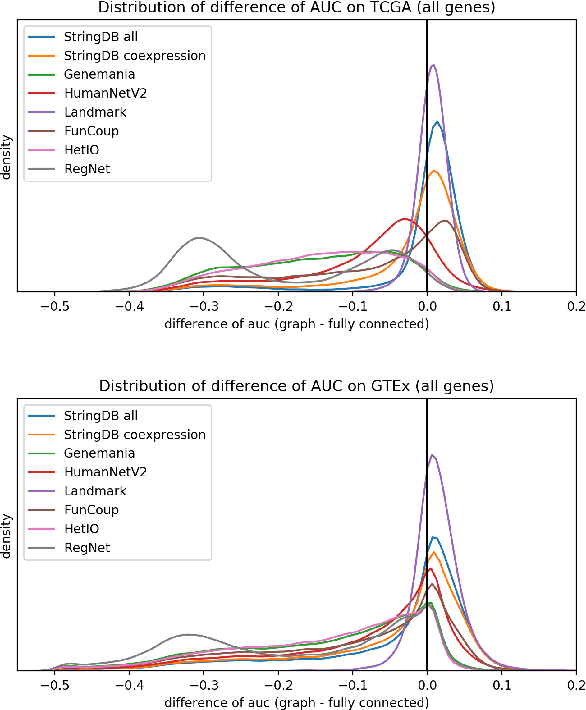

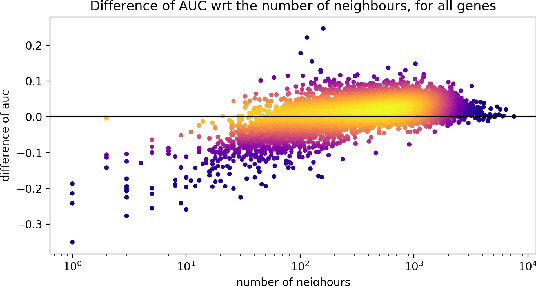

Abstract:Gene interaction graphs aim to capture various relationships between genes and represent decades of biology research. When trying to make predictions from genomic data, those graphs could be used to overcome the curse of dimensionality by making machine learning models sparser and more consistent with biological common knowledge. In this work, we focus on assessing whether those graphs capture dependencies seen in gene expression data better than random. We formulate a condition that graphs should satisfy to provide a good prior knowledge and propose to test it using a `Single Gene Inference' (SGI) task. We compare random graphs with seven major gene interaction graphs published by different research groups, aiming to measure the true benefit of using biologically relevant graphs in this context. Our analysis finds that dependencies can be captured almost as well at random which suggests that, in terms of gene expression levels, the relevant information about the state of the cell is spread across many genes.

Analysis of Gene Interaction Graphs for Biasing Machine Learning Models

May 06, 2019

Abstract:Gene interaction graphs aim to capture various relationships between genes and can be used to create more biologically-intuitive models for machine learning. There are many such graphs available which can differ in the number of genes and edges covered. In this work, we attempt to evaluate the biases provided by those graphs through utilizing them for 'Single Gene Inference' (SGI) which serves as, what we believe is, a proxy for more relevant prediction tasks. The SGI task assesses how well a gene's neighbors in a particular graph can 'explain' the gene itself in comparison to the baseline of using all the genes in the dataset. We evaluate seven major gene interaction graphs created by different research groups on two distinct datasets, TCGA and GTEx. We find that some graphs perform on par with the unbiased baseline for most genes with a significantly smaller feature set.

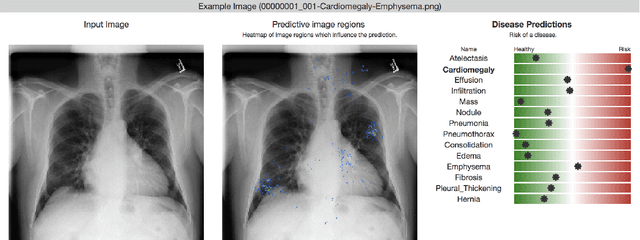

Chester: A Web Delivered Locally Computed Chest X-Ray Disease Prediction System

Jan 31, 2019

Abstract:Deep learning has shown promise to augment radiologists and improve the standard of care globally. Two main issues that complicate deploying these systems are patient privacy and scaling to the global population. To deploy a system at scale with minimal computational cost while preserving privacy we present a web delivered (but locally run) system for diagnosing chest X-Rays. Code is delivered via a URL to a web browser (including cell phones) but the patient data remains on the users machine and all processing occurs locally. The system is designed to be used as a reference where a user can process an image to confirm or aid in their diagnosis. The system contains three main components: out-of-distribution detection, disease prediction, and prediction explanation. The system open source and freely available here: https://mlmed.org/tools/xray/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge