Vinay

IntentionNet: Map-Lite Visual Navigation at the Kilometre Scale

Jul 03, 2024

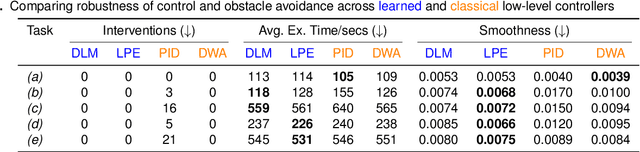

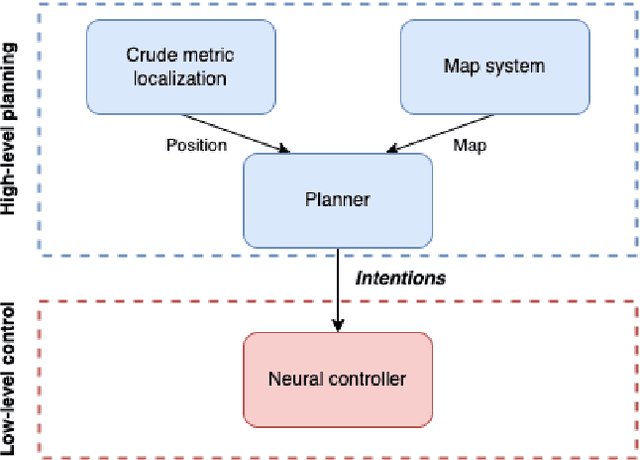

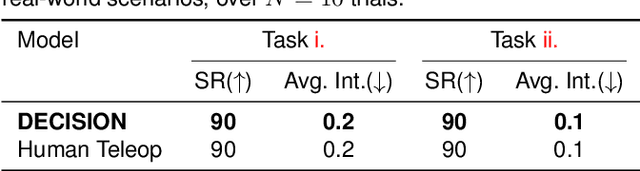

Abstract:This work explores the challenges of creating a scalable and robust robot navigation system that can traverse both indoor and outdoor environments to reach distant goals. We propose a navigation system architecture called IntentionNet that employs a monolithic neural network as the low-level planner/controller, and uses a general interface that we call intentions to steer the controller. The paper proposes two types of intentions, Local Path and Environment (LPE) and Discretised Local Move (DLM), and shows that DLM is robust to significant metric positioning and mapping errors. The paper also presents Kilo-IntentionNet, an instance of the IntentionNet system using the DLM intention that is deployed on a Boston Dynamics Spot robot, and which successfully navigates through complex indoor and outdoor environments over distances of up to a kilometre with only noisy odometry.

End-to-End Partially Observable Visual Navigation in a Diverse Environment

Sep 16, 2021

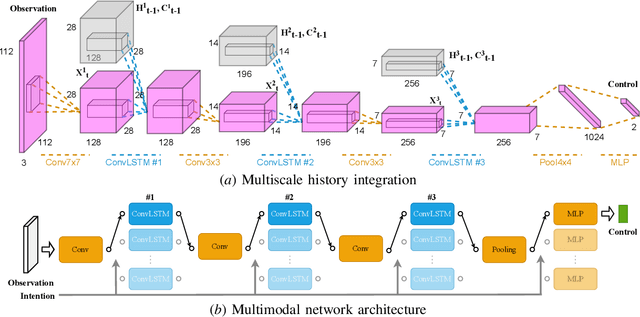

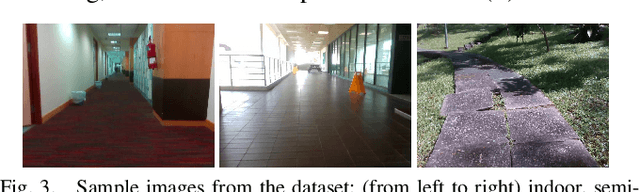

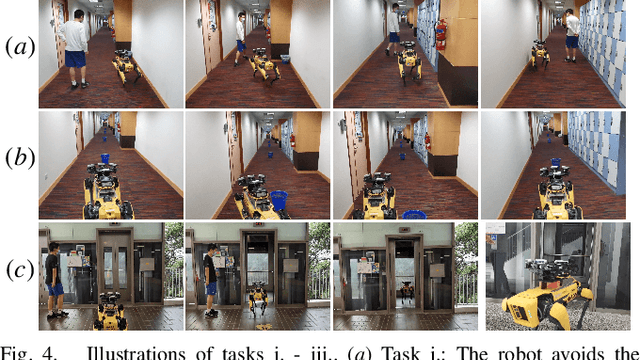

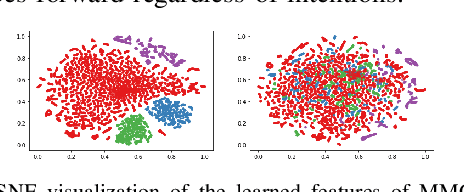

Abstract:How can a robot navigate successfully in a rich and diverse environment, indoors or outdoors, along an office corridor or a trail in the park, on the flat ground, the staircase, or the elevator, etc.? To this end, this work aims at three challenges: (i) complex visual observations, (ii) partial observability of local sensing, and (iii) multimodal navigation behaviors that depend on both the local environment and the high-level goal. We propose a novel neural network (NN) architecture to represent a local controller and leverage the flexibility of the end-to-end approach to learn a powerful policy. To tackle complex visual observations, we extract multiscale spatial information through convolution layers. To deal with partial observability, we encode rich history information in LSTM-like modules. Importantly, we integrate the two into a single unified architecture that exploits convolutional memory cells to track the observation history at multiple spatial scales, which can capture the complex spatiotemporal dependencies between observations and controls. We additionally condition the network on the high-level goal in order to generate different navigation behavior modes. Specifically, we propose to use independent memory cells for different modes to prevent mode collapse in the learned policy. We implemented the NN controller on the SPOT robot and evaluate it on three challenging tasks with partial observations: adversarial pedestrian avoidance, blind-spot obstacle avoidance, and elevator riding. Our model significantly outperforms CNNs, conventional LSTMs, or the ablated versions of our model. A demo video will be publicly available, showing our SPOT robot traversing many different locations on our university campus.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge