Viktor Seib

Generation of Synthetic Images for Pedestrian Detection Using a Sequence of GANs

Jan 14, 2024Abstract:Creating annotated datasets demands a substantial amount of manual effort. In this proof-of-concept work, we address this issue by proposing a novel image generation pipeline. The pipeline consists of three distinct generative adversarial networks (previously published), combined in a novel way to augment a dataset for pedestrian detection. Despite the fact that the generated images are not always visually pleasant to the human eye, our detection benchmark reveals that the results substantially surpass the baseline. The presented proof-of-concept work was done in 2020 and is now published as a technical report after a three years retention period.

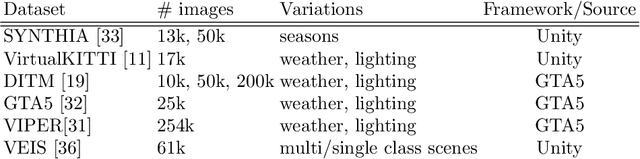

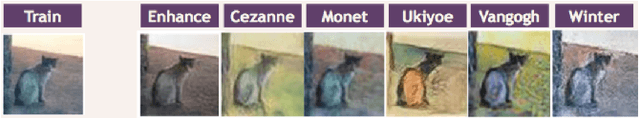

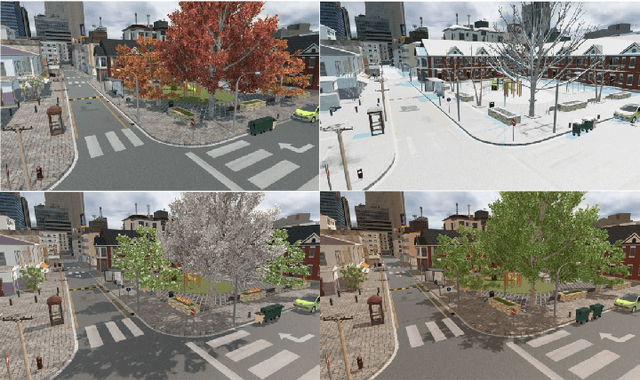

Mixing Real and Synthetic Data to Enhance Neural Network Training -- A Review of Current Approaches

Jul 17, 2020

Abstract:Deep neural networks have gained tremendous importance in many computer vision tasks. However, their power comes at the cost of large amounts of annotated data required for supervised training. In this work we review and compare different techniques available in the literature to improve training results without acquiring additional annotated real-world data. This goal is mostly achieved by applying annotation-preserving transformations to existing data or by synthetically creating more data.

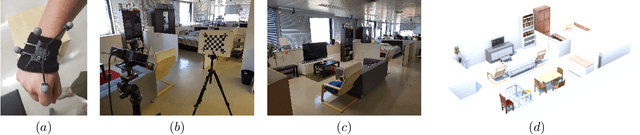

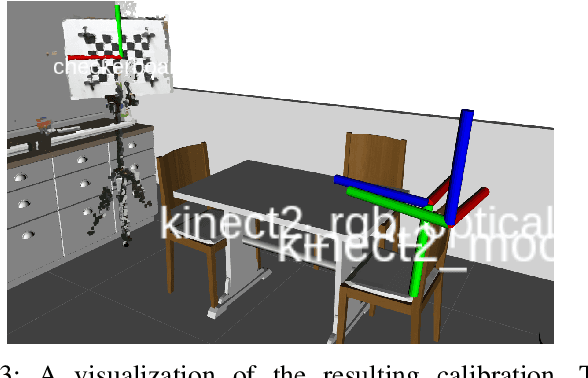

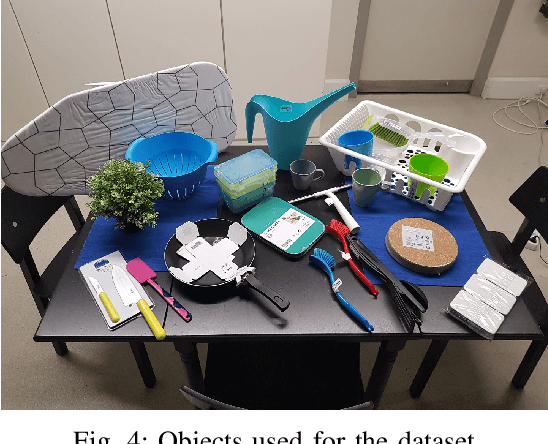

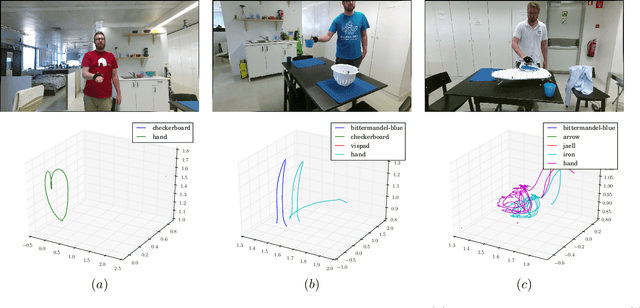

Simitate: A Hybrid Imitation Learning Benchmark

May 15, 2019

Abstract:We present Simitate --- a hybrid benchmarking suite targeting the evaluation of approaches for imitation learning. A dataset containing 1938 sequences where humans perform daily activities in a realistic environment is presented. The dataset is strongly coupled with an integration into a simulator. RGB and depth streams with a resolution of 960$\mathbb{\times}$540 at 30Hz and accurate ground truth poses for the demonstrator's hand, as well as the object in 6 DOF at 120Hz are provided. Along with our dataset we provide the 3D model of the used environment, labeled object images and pre-trained models. A benchmarking suite that aims at fostering comparability and reproducibility supports the development of imitation learning approaches. Further, we propose and integrate evaluation metrics on assessing the quality of effect and trajectory of the imitation performed in simulation. Simitate is available on our project website: \url{https://agas.uni-koblenz.de/data/simitate/}.

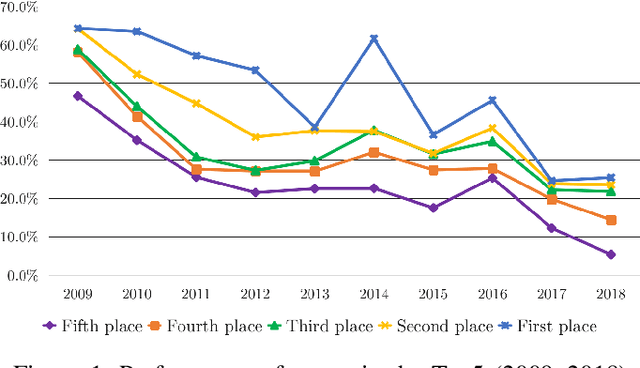

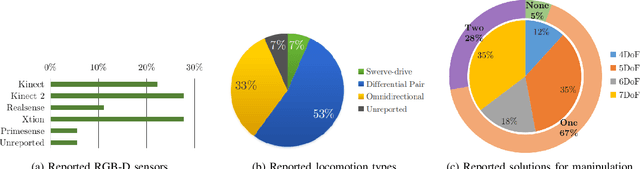

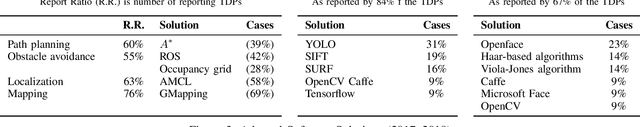

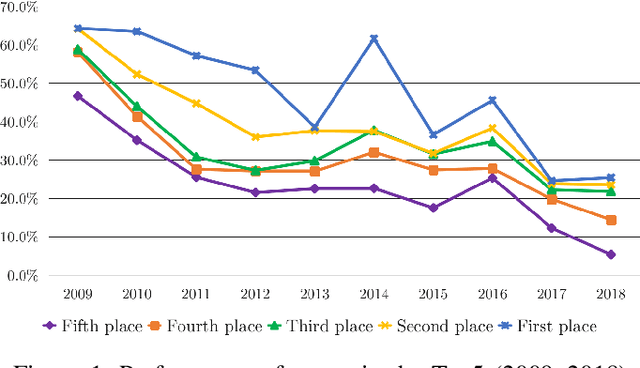

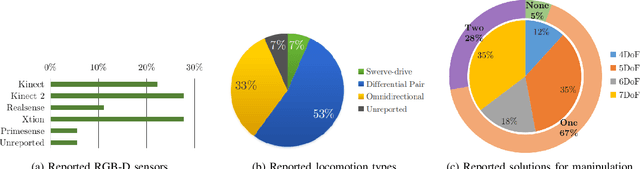

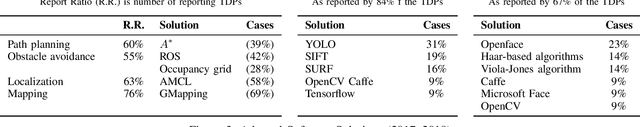

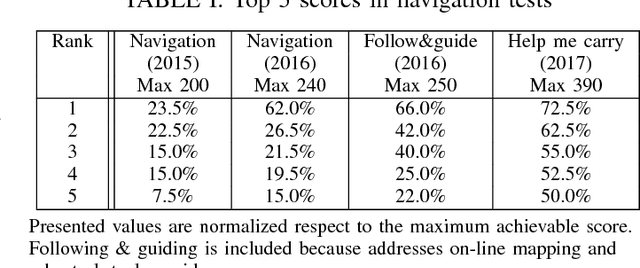

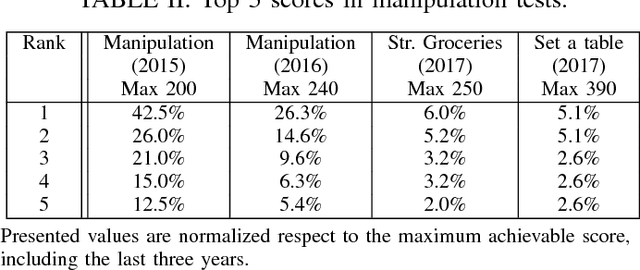

Trends, Challenges and Adopted Strategies in RoboCup@Home (2019 version)

Mar 25, 2019

Abstract:Scientific competitions are crucial in the field of service robotics. They foster knowledge exchange and benchmarking, allowing teams to test their research in unstandardized scenarios. In this paper, we summarize the trending solutions and approaches used in RoboCup@Home. Further on, we discuss the attained achievements and challenges to overcome in relation with the progress required to fulfill the long-term goal of the league. Consequently, we propose a set of milestones for upcoming competitions by considering the current capabilities of the robots and their limitations. With this work we aim at laying the foundations towards the creation of roadmaps that can help to direct efforts in testing and benchmarking in robotics competitions.

Trends, Challenges and Adopted Strategies in RoboCup@Home

Mar 06, 2019

Abstract:Scientific competitions are crucial in the field of service robotics. They foster knowledge exchange and allow teams to test their research in unstandardized scenarios and compare result. Such is the case of RoboCup@Home. However, keeping track of all the technologies and solution approaches used by teams to solve the tests can be a challenge in itself. Moreover, after eleven years of competitions, it's easy to delve too much into the field, losing perspective and forgetting about the user's needs and long term goals. In this paper, we aim to tackle this problems by presenting a summary of the trending solutions and approaches used in RoboCup@Home, and discussing the attained achievements and challenges to overcome in relation with the progress required to fulfill the long-term goal of the league. Hence, considering the current capabilities of the robots and their limitations, we propose a set of milestones to address in upcoming competitions. With this work we lay the foundations towards the creation of roadmaps that can help to direct efforts in testing and benchmarking in robotics competitions.

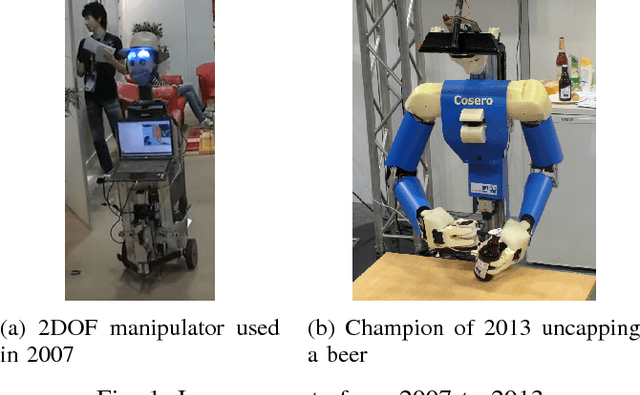

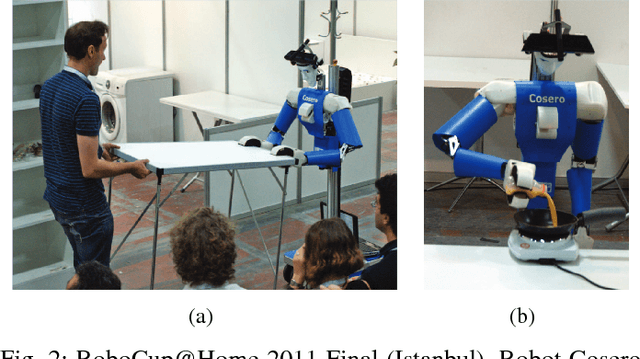

RoboCup@Home: Summarizing achievements in over eleven years of competition

Feb 02, 2019

Abstract:Scientific competitions are important in robotics because they foster knowledge exchange and allow teams to test their research in unstandardized scenarios and compare result. In the field of service robotics its role becomes crucial. Competitions like RoboCup@Home bring robots to people, a fundamental step to integrate them into society. In this paper we summarize and discuss the differences between the achievements claimed by teams in their team description papers, and the results observed during the competition^1 from a qualitative perspective. We conclude with a set of important challenges to be conquered first in order to take robots to people's homes. We believe that competitions are also an excellent opportunity to collect data of direct and unbiased interactions for further research. ^1 The authors belong to several teams who have participated in RoboCup@Home as early as 2007

* 6 pages, 4 images, 3 tables Published in: 2018 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC)

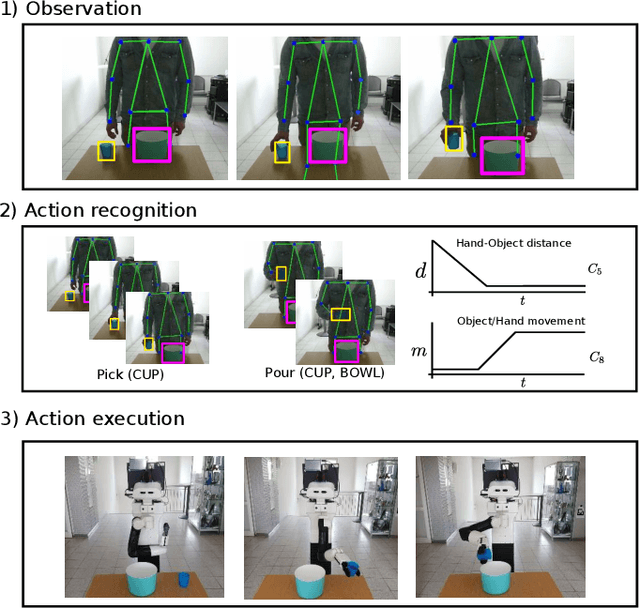

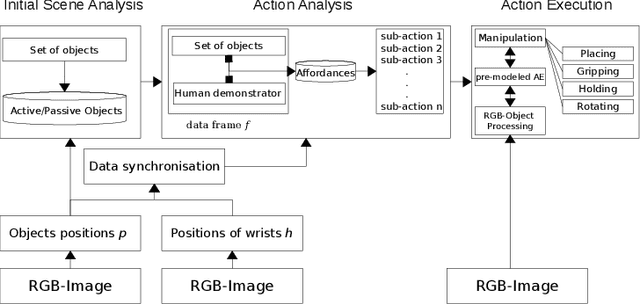

Markerless Visual Robot Programming by Demonstration

Jul 30, 2018

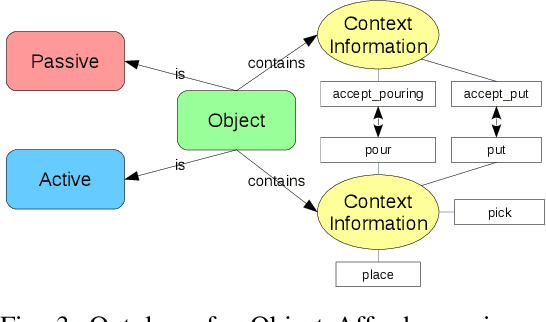

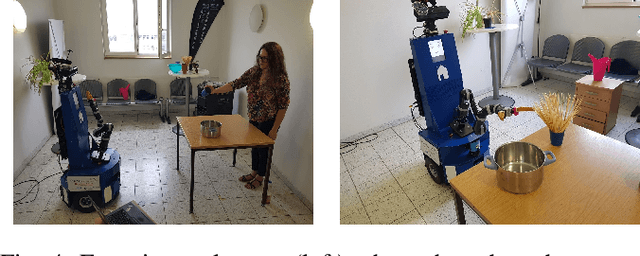

Abstract:In this paper we present an approach for learning to imitate human behavior on a semantic level by markerless visual observation. We analyze a set of spatial constraints on human pose data extracted using convolutional pose machines and object informations extracted from 2D image sequences. A scene analysis, based on an ontology of objects and affordances, is combined with continuous human pose estimation and spatial object relations. Using a set of constraints we associate the observed human actions with a set of executable robot commands. We demonstrate our approach in a kitchen task, where the robot learns to prepare a meal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge