Vikash Balasubramanian

Polarized-VAE: Proximity Based Disentangled Representation Learning for Text Generation

Apr 22, 2020

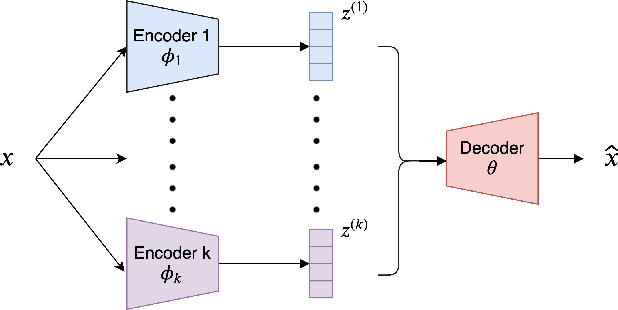

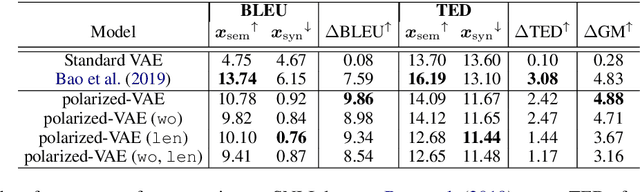

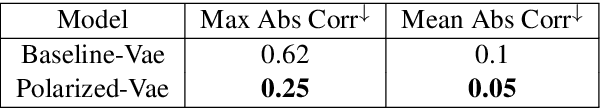

Abstract:Learning disentangled representations of real world data is a challenging open problem. Most previous methods have focused on either fully supervised approaches which use attribute labels or unsupervised approaches that manipulate the factorization in the latent space of models such as the variational autoencoder (VAE), by training with task-specific losses. In this work we propose polarized-VAE, a novel approach that disentangles selected attributes in the latent space based on proximity measures reflecting the similarity between data points with respect to these attributes. We apply our method to disentangle the semantics and syntax of a sentence and carry out transfer experiments. Polarized-VAE significantly outperforms the VAE baseline and is competitive with the state-of-the-art approaches, while being more a general framework that is applicable to other attribute disentanglement tasks.

Conditional Response Generation Using Variational Alignment

Nov 10, 2019

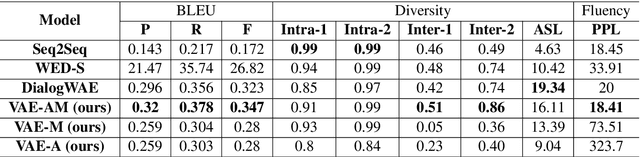

Abstract:Generating relevant/conditioned responses in dialog is challenging, and requires not only proper modelling of context in the conversation, but also the ability to generate fluent sentences during inference. In this paper, we propose a two-step framework based on generative adversarial nets for generating conditioned responses. Our model first learns meaningful representations of sentences, and then uses a generator to \textit{match} the query with the response distribution. Latent codes from the latter are then used to generate responses. Both quantitative and qualitative evaluations show that our model generates more fluent, relevant and diverse responses than the existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge