Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Kashif Khan

Conditional Response Generation Using Variational Alignment

Nov 10, 2019Figures and Tables:

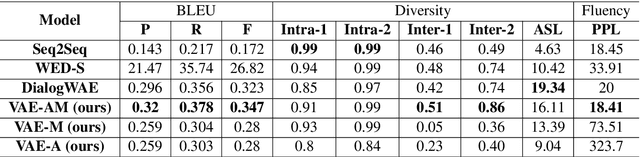

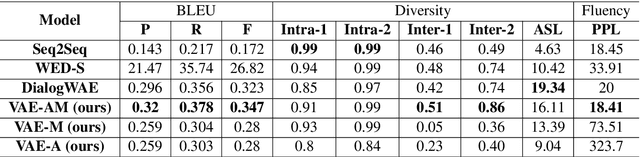

Abstract:Generating relevant/conditioned responses in dialog is challenging, and requires not only proper modelling of context in the conversation, but also the ability to generate fluent sentences during inference. In this paper, we propose a two-step framework based on generative adversarial nets for generating conditioned responses. Our model first learns meaningful representations of sentences, and then uses a generator to \textit{match} the query with the response distribution. Latent codes from the latter are then used to generate responses. Both quantitative and qualitative evaluations show that our model generates more fluent, relevant and diverse responses than the existing state-of-the-art methods.

* 7 pages, 2 Tables, 1 figure

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge