Vid Stojevic

Sparse hierarchical representation learning on molecular graphs

Aug 06, 2019

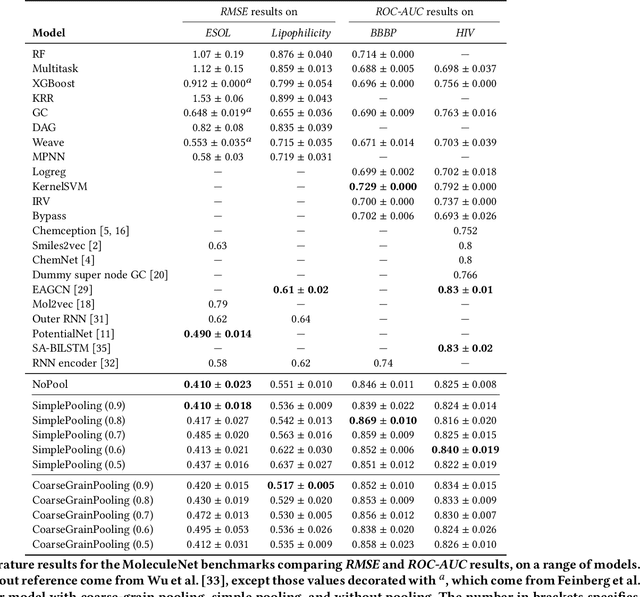

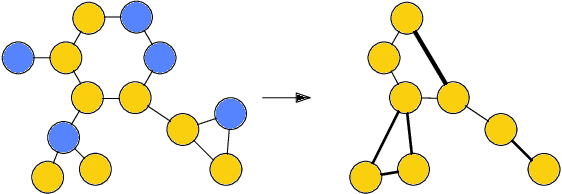

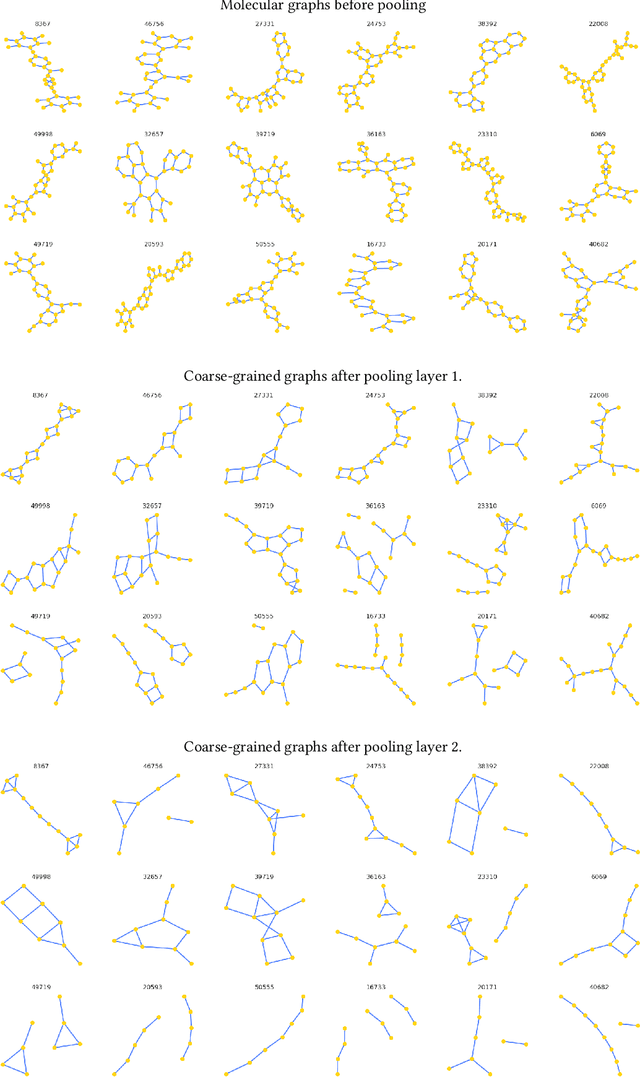

Abstract:Architectures for sparse hierarchical representation learning have recently been proposed for graph-structured data, but so far assume the absence of edge features in the graph. We close this gap and propose a method to pool graphs with edge features, inspired by the hierarchical nature of chemistry. In particular, we introduce two types of pooling layers compatible with an edge-feature graph-convolutional architecture and investigate their performance for molecules relevant to drug discovery on a set of two classification and two regression benchmark datasets of MoleculeNet. We find that our models significantly outperform previous benchmarks on three of the datasets and reach state-of-the-art results on the fourth benchmark, with pooling improving performance for three out of four tasks, keeping performance stable on the fourth task, and generally speeding up the training process.

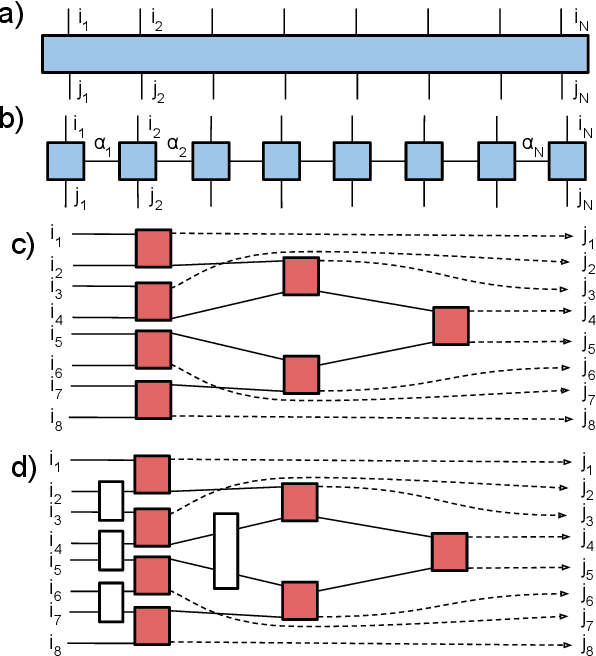

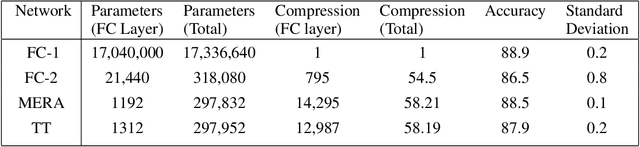

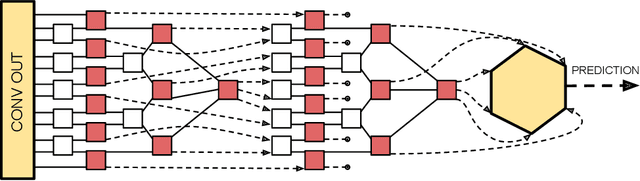

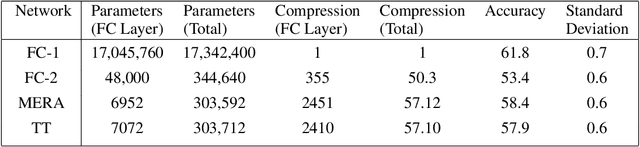

Compact Neural Networks based on the Multiscale Entanglement Renormalization Ansatz

Apr 03, 2018

Abstract:This paper demonstrates a method for tensorizing neural networks based upon an efficient way of approximating scale invariant quantum states, the Multi-scale Entanglement Renormalization Ansatz (MERA). We employ MERA as a replacement for the fully connected layers in a convolutional neural network and test this implementation on the CIFAR-10 and CIFAR-100 datasets. The proposed method outperforms factorization using tensor trains, providing greater compression for the same level of accuracy and greater accuracy for the same level of compression. We demonstrate MERA layers with 14000 times fewer parameters and a reduction in accuracy of less than 1% compared to the equivalent fully connected layers, scaling like O(N).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge