Vedran Sabol

Establishing and Evaluating Trustworthy AI: Overview and Research Challenges

Nov 15, 2024

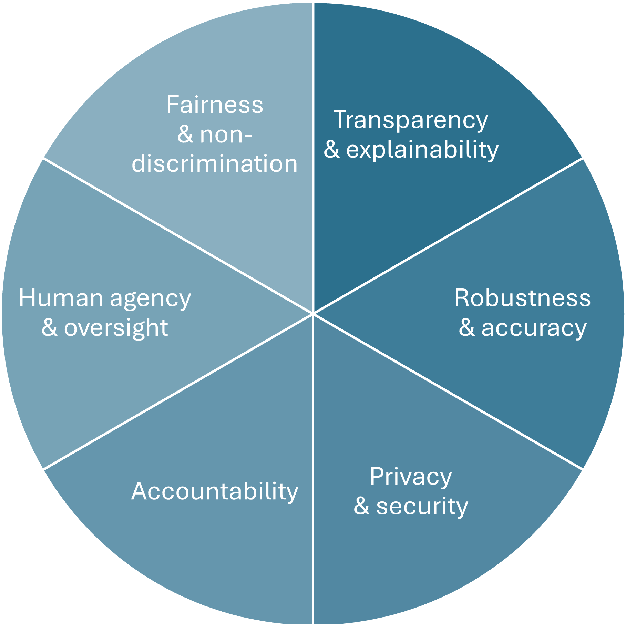

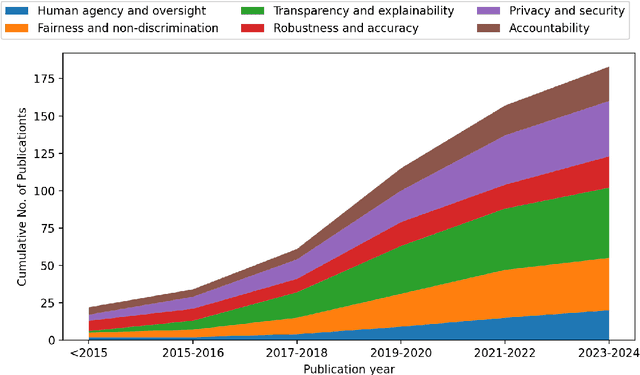

Abstract:Artificial intelligence (AI) technologies (re-)shape modern life, driving innovation in a wide range of sectors. However, some AI systems have yielded unexpected or undesirable outcomes or have been used in questionable manners. As a result, there has been a surge in public and academic discussions about aspects that AI systems must fulfill to be considered trustworthy. In this paper, we synthesize existing conceptualizations of trustworthy AI along six requirements: 1) human agency and oversight, 2) fairness and non-discrimination, 3) transparency and explainability, 4) robustness and accuracy, 5) privacy and security, and 6) accountability. For each one, we provide a definition, describe how it can be established and evaluated, and discuss requirement-specific research challenges. Finally, we conclude this analysis by identifying overarching research challenges across the requirements with respect to 1) interdisciplinary research, 2) conceptual clarity, 3) context-dependency, 4) dynamics in evolving systems, and 5) investigations in real-world contexts. Thus, this paper synthesizes and consolidates a wide-ranging and active discussion currently taking place in various academic sub-communities and public forums. It aims to serve as a reference for a broad audience and as a basis for future research directions.

XAI Methods for Neural Time Series Classification: A Brief Review

Aug 18, 2021Abstract:Deep learning models have recently demonstrated remarkable results in a variety of tasks, which is why they are being increasingly applied in high-stake domains, such as industry, medicine, and finance. Considering that automatic predictions in these domains might have a substantial impact on the well-being of a person, as well as considerable financial and legal consequences to an individual or a company, all actions and decisions that result from applying these models have to be accountable. Given that a substantial amount of data that is collected in high-stake domains are in the form of time series, in this paper we examine the current state of eXplainable AI (XAI) methods with a focus on approaches for opening up deep learning black boxes for the task of time series classification. Finally, our contribution also aims at deriving promising directions for future work, to advance XAI for deep learning on time series data.

* 8 pages, 0 figures, Accepted as a poster presentation

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge