Vassilios A. Tsachouridis

Seeing double with a multifunctional reservoir computer

May 09, 2023

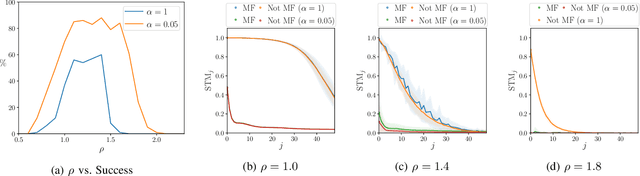

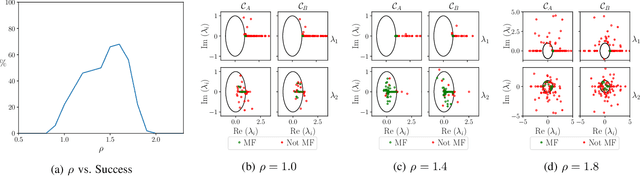

Abstract:Multifunctional biological neural networks exploit multistability in order to perform multiple tasks without changing any network properties. Enabling artificial neural networks (ANNs) to obtain certain multistabilities in order to perform several tasks, where each task is related to a particular attractor in the network's state space, naturally has many benefits from a machine learning perspective. Given the association to multistability, in this paper we explore how the relationship between different attractors influences the ability of a reservoir computer (RC), which is a dynamical system in the form of an ANN, to achieve multifunctionality. We construct the `seeing double' problem to systematically study how a RC reconstructs a coexistence of attractors when there is an overlap between them. As the amount of overlap increases, we discover that for multifunctionality to occur, there is a critical dependence on a suitable choice of the spectral radius for the RC's internal network connections. A bifurcation analysis reveals how multifunctionality emerges and is destroyed as the RC enters a chaotic regime that can lead to chaotic itinerancy.

Exploring the limits of multifunctionality across different reservoir computers

May 23, 2022

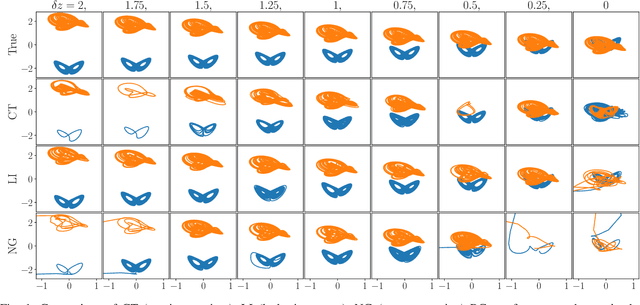

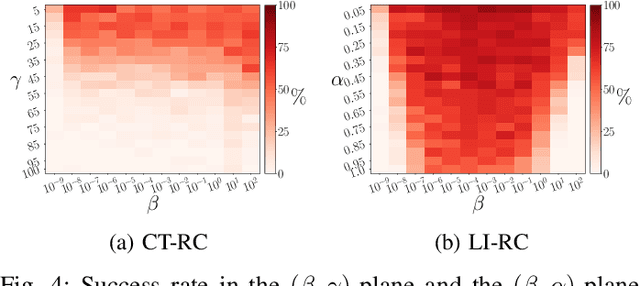

Abstract:Multifunctional neural networks are capable of performing more than one task without changing any network connections. In this paper we explore the performance of a continuous-time, leaky-integrator, and next-generation `reservoir computer' (RC), when trained on tasks which test the limits of multifunctionality. In the first task we train each RC to reconstruct a coexistence of chaotic attractors from different dynamical systems. By moving the data describing these attractors closer together, we find that the extent to which each RC can reconstruct both attractors diminishes as they begin to overlap in state space. In order to provide a greater understanding of this inhibiting effect, in the second task we train each RC to reconstruct a coexistence of two circular orbits which differ only in the direction of rotation. We examine the critical effects that certain parameters can have in each RC to achieve multifunctionality in this extreme case of completely overlapping training data.

Multifunctionality in a Reservoir Computer

Aug 10, 2020

Abstract:Multifunctionality is a well observed phenomenological feature of biological neural networks and considered to be of fundamental importance to the survival of certain species over time. These multifunctional neural networks are capable of performing more than one task without changing any network connections. In this paper we investigate how this neurological idiosyncrasy can be achieved in an artificial setting with a modern machine learning paradigm known as `Reservoir Computing'. A training technique is designed to enable a Reservoir Computer to perform tasks of a multifunctional nature. We explore the critical effects that changes in certain parameters can have on the Reservoir Computers' ability to express multifunctionality. We also expose the existence of several `untrained attractors'; attractors which dwell within the prediction state space of the Reservoir Computer that were not part of the training. We conduct a bifurcation analysis of these untrained attractors and discuss the implications of our results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge