Varsha Dani

Improved Reconstruction of Random Geometric Graphs

Jul 29, 2021

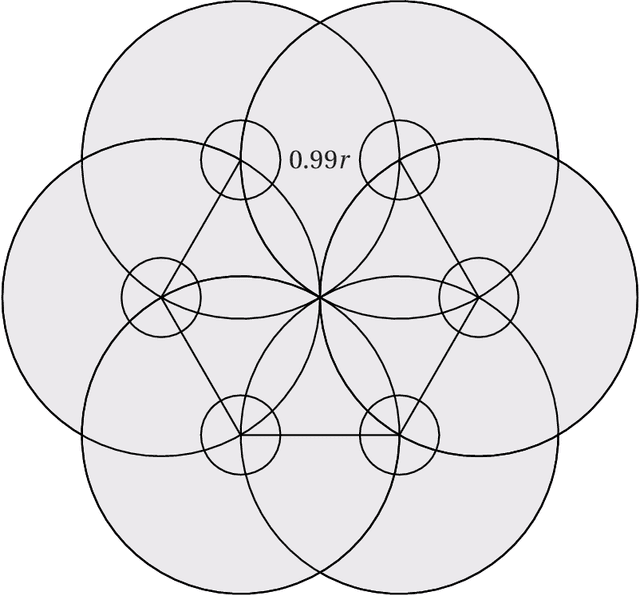

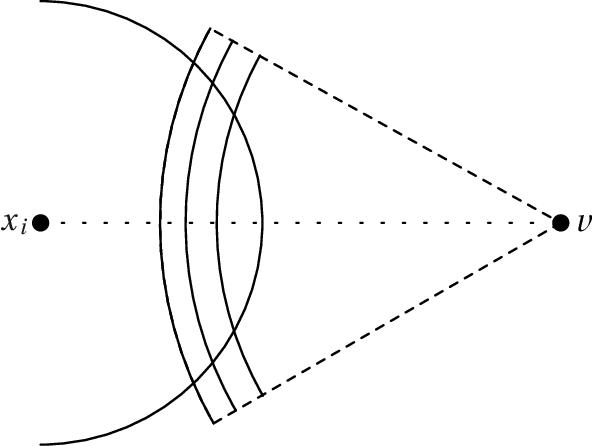

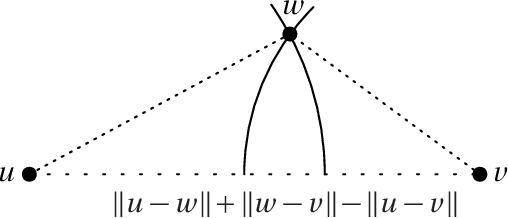

Abstract:Embedding graphs in a geographical or latent space, i.e., inferring locations for vertices in Euclidean space or on a smooth submanifold, is a common task in network analysis, statistical inference, and graph visualization. We consider the classic model of random geometric graphs where $n$ points are scattered uniformly in a square of area $n$, and two points have an edge between them if and only if their Euclidean distance is less than $r$. The reconstruction problem then consists of inferring the vertex positions, up to symmetry, given only the adjacency matrix of the resulting graph. We give an algorithm that, if $r=n^\alpha$ for $\alpha > 0$, with high probability reconstructs the vertex positions with a maximum error of $O(n^\beta)$ where $\beta=1/2-(4/3)\alpha$, until $\alpha \ge 3/8$ where $\beta=0$ and the error becomes $O(\sqrt{\log n})$. This improves over earlier results, which were unable to reconstruct with error less than $r$. Our method estimates Euclidean distances using a hybrid of graph distances and short-range estimates based on the number of common neighbors. We sketch proofs that our results also apply on the surface of a sphere, and (with somewhat different exponents) in any fixed dimension.

An Empirical Comparison of Algorithms for Aggregating Expert Predictions

Jun 27, 2012

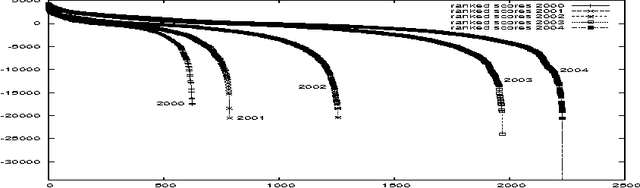

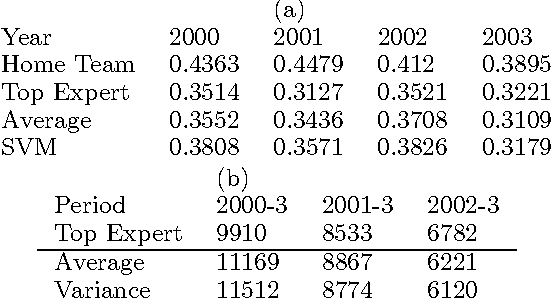

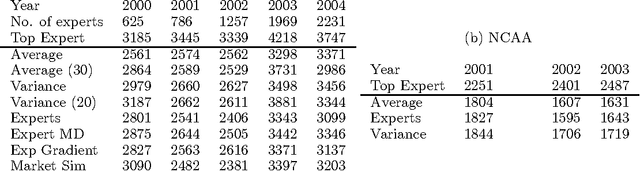

Abstract:Predicting the outcomes of future events is a challenging problem for which a variety of solution methods have been explored and attempted. We present an empirical comparison of a variety of online and offline adaptive algorithms for aggregating experts' predictions of the outcomes of five years of US National Football League games (1319 games) using expert probability elicitations obtained from an Internet contest called ProbabilitySports. We find that it is difficult to improve over simple averaging of the predictions in terms of prediction accuracy, but that there is room for improvement in quadratic loss. Somewhat surprisingly, a Bayesian estimation algorithm which estimates the variance of each expert's prediction exhibits the most consistent superior performance over simple averaging among our collection of algorithms.

How to Beat the Adaptive Multi-Armed Bandit

Feb 14, 2006Abstract:The multi-armed bandit is a concise model for the problem of iterated decision-making under uncertainty. In each round, a gambler must pull one of $K$ arms of a slot machine, without any foreknowledge of their payouts, except that they are uniformly bounded. A standard objective is to minimize the gambler's regret, defined as the gambler's total payout minus the largest payout which would have been achieved by any fixed arm, in hindsight. Note that the gambler is only told the payout for the arm actually chosen, not for the unchosen arms. Almost all previous work on this problem assumed the payouts to be non-adaptive, in the sense that the distribution of the payout of arm $j$ in round $i$ is completely independent of the choices made by the gambler on rounds $1, \dots, i-1$. In the more general model of adaptive payouts, the payouts in round $i$ may depend arbitrarily on the history of past choices made by the algorithm. We present a new algorithm for this problem, and prove nearly optimal guarantees for the regret against both non-adaptive and adaptive adversaries. After $T$ rounds, our algorithm has regret $O(\sqrt{T})$ with high probability (the tail probability decays exponentially). This dependence on $T$ is best possible, and matches that of the full-information version of the problem, in which the gambler is told the payouts for all $K$ arms after each round. Previously, even for non-adaptive payouts, the best high-probability bounds known were $O(T^{2/3})$, due to Auer, Cesa-Bianchi, Freund and Schapire. The expected regret of their algorithm is $O(T^{1/2}) for non-adaptive payouts, but as we show, $\Omega(T^{2/3})$ for adaptive payouts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge