Vanika Singhal

One-shot Localization and Segmentation of Medical Images with Foundation Models

Oct 28, 2023

Abstract:Recent advances in Vision Transformers (ViT) and Stable Diffusion (SD) models with their ability to capture rich semantic features of the image have been used for image correspondence tasks on natural images. In this paper, we examine the ability of a variety of pre-trained ViT (DINO, DINOv2, SAM, CLIP) and SD models, trained exclusively on natural images, for solving the correspondence problems on medical images. While many works have made a case for in-domain training, we show that the models trained on natural images can offer good performance on medical images across different modalities (CT,MR,Ultrasound) sourced from various manufacturers, over multiple anatomical regions (brain, thorax, abdomen, extremities), and on wide variety of tasks. Further, we leverage the correspondence with respect to a template image to prompt a Segment Anything (SAM) model to arrive at single shot segmentation, achieving dice range of 62%-90% across tasks, using just one image as reference. We also show that our single-shot method outperforms the recently proposed few-shot segmentation method - UniverSeg (Dice range 47%-80%) on most of the semantic segmentation tasks(six out of seven) across medical imaging modalities.

Majorization Minimization Technique for Optimally Solving Deep Dictionary Learning

Dec 11, 2019

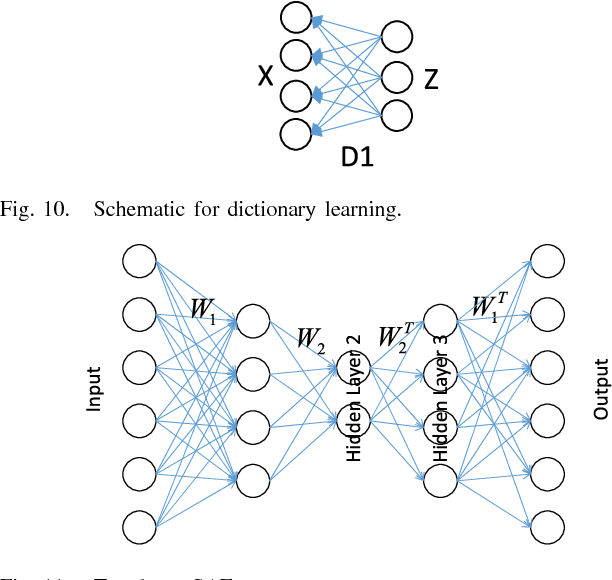

Abstract:The concept of deep dictionary learning has been recently proposed. Unlike shallow dictionary learning which learns single level of dictionary to represent the data, it uses multiple layers of dictionaries. So far, the problem could only be solved in a greedy fashion; this was achieved by learning a single layer of dictionary in each stage where the coefficients from the previous layer acted as inputs to the subsequent layer (only the first layer used the training samples as inputs). This was not optimal; there was feedback from shallower to deeper layers but not the other way. This work proposes an optimal solution to deep dictionary learning whereby all the layers of dictionaries are solved simultaneously. We employ the Majorization Minimization approach. Experiments have been carried out on benchmark datasets; it shows that optimal learning indeed improves over greedy piecemeal learning. Comparison with other unsupervised deep learning tools (stacked denoising autoencoder, deep belief network, contractive autoencoder and K-sparse autoencoder) show that our method supersedes their performance both in accuracy and speed.

Discriminative Robust Deep Dictionary Learning for Hyperspectral Image Classification

Dec 11, 2019

Abstract:This work proposes a new framework for deep learning that has been particularly tailored for hyperspectral image classification. We learn multiple levels of dictionaries in a robust fashion. The last layer is discriminative that learns a linear classifier. The training proceeds greedily, at a time a single level of dictionary is learnt and the coefficients used to train the next level. The coefficients from the final level are used for classification. Robustness is incorporated by minimizing the absolute deviations instead of the more popular Euclidean norm. The inbuilt robustness helps combat mixed noise (Gaussian and sparse) present in hyperspectral images. Results show that our proposed techniques outperforms all other deep learning methods Deep Belief Network (DBN), Stacked Autoencoder (SAE) and Convolutional Neural Network (CNN). The experiments have been carried out on benchmark hyperspectral imaging datasets.

Simultaneous Detection of Multiple Appliances from Smart-meter Measurements via Multi-Label Consistent Deep Dictionary Learning and Deep Transform Learning

Dec 11, 2019

Abstract:Currently there are several well-known approaches to non-intrusive appliance load monitoring rule based, stochastic finite state machines, neural networks and sparse coding. Recently several studies have proposed a new approach based on multi label classification. Different appliances are treated as separate classes, and the task is to identify the classes given the aggregate smart-meter reading. Prior studies in this area have used off the shelf algorithms like MLKNN and RAKEL to address this problem. In this work, we propose a deep learning based technique. There are hardly any studies in deep learning based multi label classification; two new deep learning techniques to solve the said problem are fundamental contributions of this work. These are deep dictionary learning and deep transform learning. Thorough experimental results on benchmark datasets show marked improvement over existing studies.

Row-Sparse Discriminative Deep Dictionary Learning for Hyperspectral Image Classification

Dec 11, 2019

Abstract:In recent studies in hyperspectral imaging, biometrics and energy analytics, the framework of deep dictionary learning has shown promise. Deep dictionary learning outperforms other traditional deep learning tools when training data is limited; therefore hyperspectral imaging is one such example that benefits from this framework. Most of the prior studies were based on the unsupervised formulation; and in all cases, the training algorithm was greedy and hence sub-optimal. This is the first work that shows how to learn the deep dictionary learning problem in a joint fashion. Moreover, we propose a new discriminative penalty to the said framework. The third contribution of this work is showing how to incorporate stochastic regularization techniques into the deep dictionary learning framework. Experimental results on hyperspectral image classification shows that the proposed technique excels over all state-of-the-art deep and shallow (traditional) learning based methods published in recent times.

Reconstructing Multi-echo Magnetic Resonance Images via Structured Deep Dictionary Learning

Dec 10, 2019

Abstract:Multi-echo magnetic resonance (MR) images are acquired by changing the echo times (for T2 weighted) or relaxation times (for T1 weighted) of scans. The resulting (multi-echo) images are usually used for quantitative MR imaging. Acquiring MR images is a slow process and acquiring multi scans of the same cross section for multi-echo imaging is even slower. In order to accelerate the scan, compressed sensing (CS) based techniques have been advocating partial K-space (Fourier domain) scans; the resulting images are reconstructed via structured CS algorithms. In recent times, it has been shown that instead of using off-the-shelf CS, better results can be obtained by adaptive reconstruction algorithms based on structured dictionary learning. In this work, we show that the reconstruction results can be further improved by using structured deep dictionaries. Experimental results on real datasets show that by using our proposed technique the scan-time can be cut by half compared to the state-of-the-art.

How to Train Your Deep Neural Network with Dictionary Learning

Dec 22, 2016

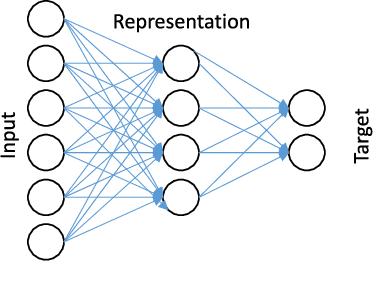

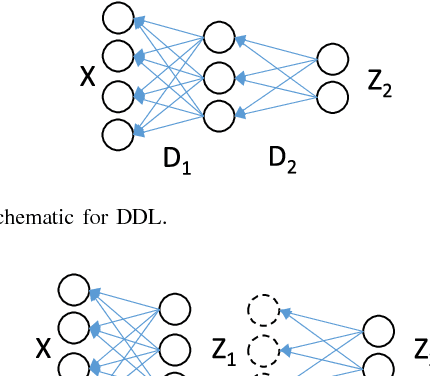

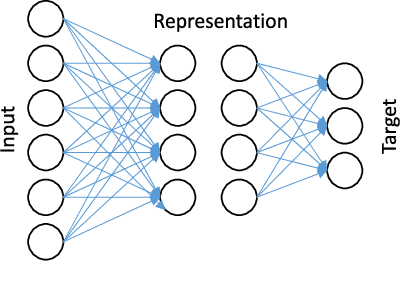

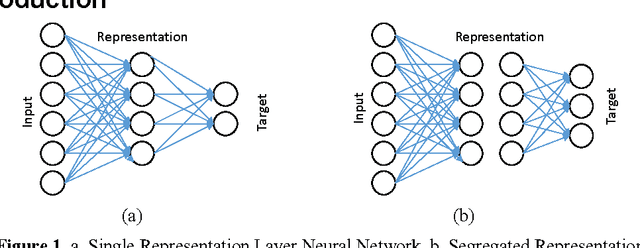

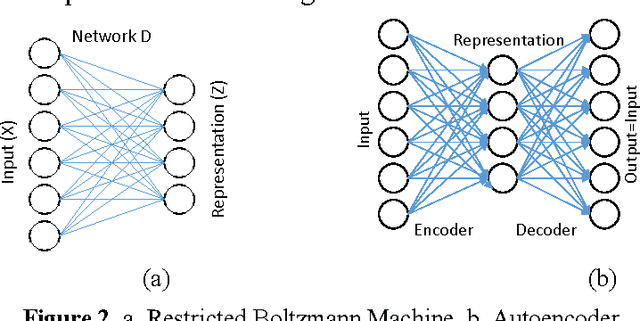

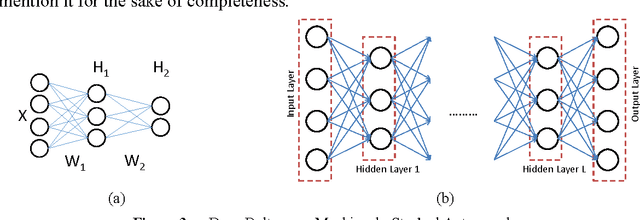

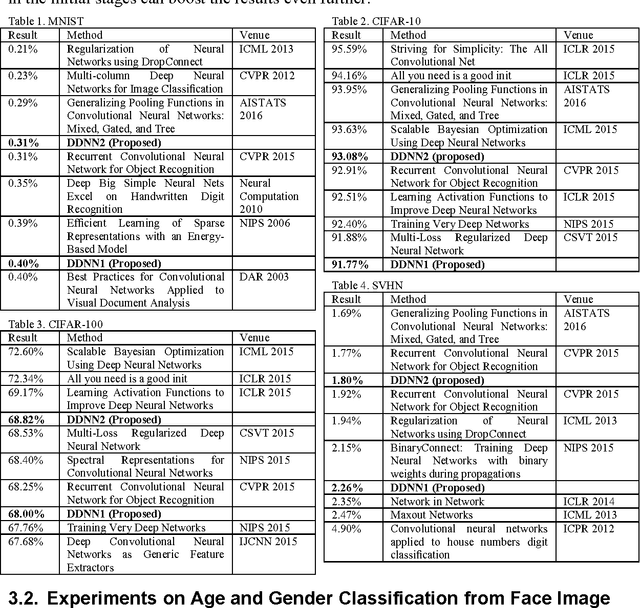

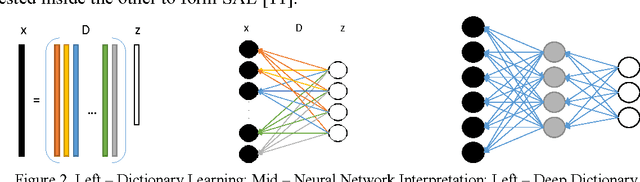

Abstract:Currently there are two predominant ways to train deep neural networks. The first one uses restricted Boltzmann machine (RBM) and the second one autoencoders. RBMs are stacked in layers to form deep belief network (DBN); the final representation layer is attached to the target to complete the deep neural network. Autoencoders are nested one inside the other to form stacked autoencoders; once the stcaked autoencoder is learnt the decoder portion is detached and the target attached to the deepest layer of the encoder to form the deep neural network. This work proposes a new approach to train deep neural networks using dictionary learning as the basic building block; the idea is to use the features from the shallower layer as inputs for training the next deeper layer. One can use any type of dictionary learning (unsupervised, supervised, discriminative etc.) as basic units till the pre-final layer. In the final layer one needs to use the label consistent dictionary learning formulation for classification. We compare our proposed framework with existing state-of-the-art deep learning techniques on benchmark problems; we are always within the top 10 results. In actual problems of age and gender classification, we are better than the best known techniques.

Deep Blind Compressed Sensing

Dec 22, 2016

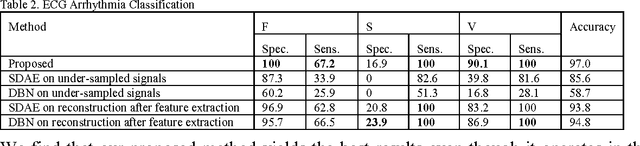

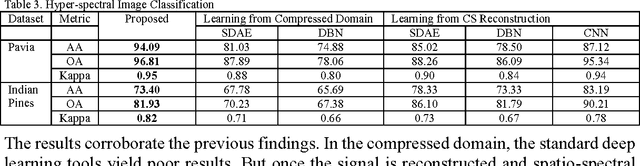

Abstract:This work addresses the problem of extracting deeply learned features directly from compressive measurements. There has been no work in this area. Existing deep learning tools only give good results when applied on the full signal, that too usually after preprocessing. These techniques require the signal to be reconstructed first. In this work we show that by learning directly from the compressed domain, considerably better results can be obtained. This work extends the recently proposed framework of deep matrix factorization in combination with blind compressed sensing; hence the term deep blind compressed sensing. Simulation experiments have been carried out on imaging via single pixel camera, under-sampled biomedical signals, arising in wireless body area network and compressive hyperspectral imaging. In all cases, the superiority of our proposed deep blind compressed sensing can be envisaged.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge