Vahid Pourahmadi

OFAL: An Oracle-Free Active Learning Framework

Aug 11, 2025Abstract:In the active learning paradigm, using an oracle to label data has always been a complex and expensive task, and with the emersion of large unlabeled data pools, it would be highly beneficial If we could achieve better results without relying on an oracle. This research introduces OFAL, an oracle-free active learning scheme that utilizes neural network uncertainty. OFAL uses the model's own uncertainty to transform highly confident unlabeled samples into informative uncertain samples. First, we start with separating and quantifying different parts of uncertainty and introduce Monte Carlo Dropouts as an approximation of the Bayesian Neural Network model. Secondly, by adding a variational autoencoder, we go on to generate new uncertain samples by stepping toward the uncertain part of latent space starting from a confidence seed sample. By generating these new informative samples, we can perform active learning and enhance the model's accuracy. Lastly, we try to compare and integrate our method with other widely used active learning sampling methods.

Parallel and Limited Data Voice Conversion Using Stochastic Variational Deep Kernel Learning

Sep 08, 2023

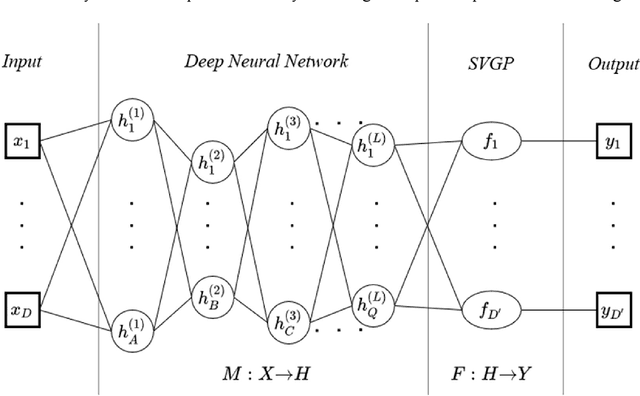

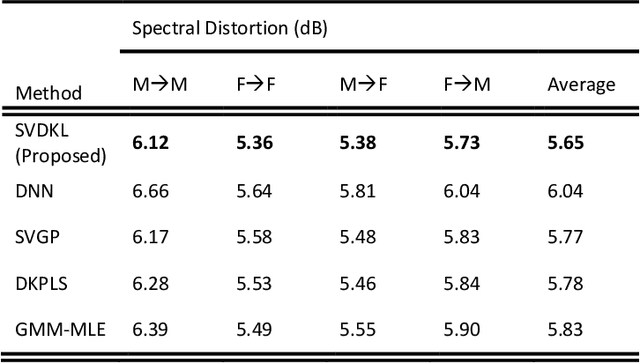

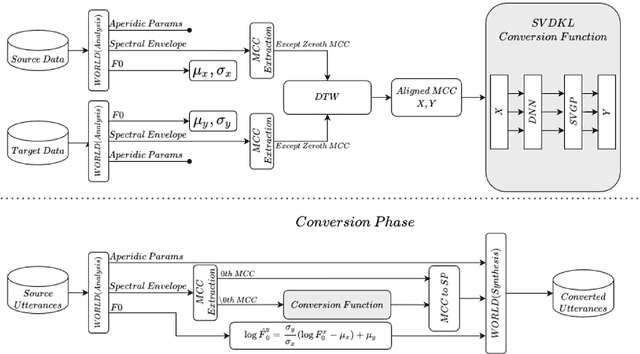

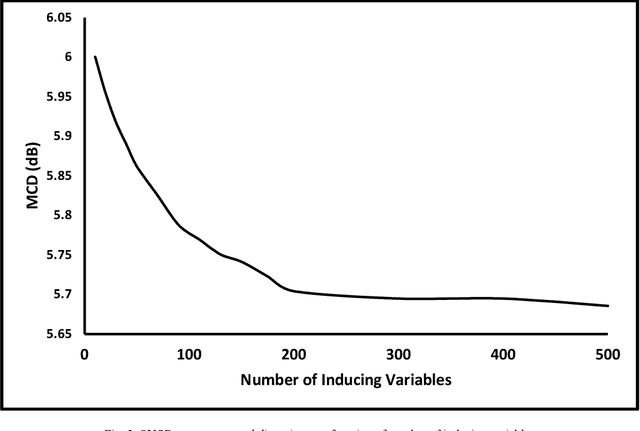

Abstract:Typically, voice conversion is regarded as an engineering problem with limited training data. The reliance on massive amounts of data hinders the practical applicability of deep learning approaches, which have been extensively researched in recent years. On the other hand, statistical methods are effective with limited data but have difficulties in modelling complex mapping functions. This paper proposes a voice conversion method that works with limited data and is based on stochastic variational deep kernel learning (SVDKL). At the same time, SVDKL enables the use of deep neural networks' expressive capability as well as the high flexibility of the Gaussian process as a Bayesian and non-parametric method. When the conventional kernel is combined with the deep neural network, it is possible to estimate non-smooth and more complex functions. Furthermore, the model's sparse variational Gaussian process solves the scalability problem and, unlike the exact Gaussian process, allows for the learning of a global mapping function for the entire acoustic space. One of the most important aspects of the proposed scheme is that the model parameters are trained using marginal likelihood optimization, which considers both data fitting and model complexity. Considering the complexity of the model reduces the amount of training data by increasing the resistance to overfitting. To evaluate the proposed scheme, we examined the model's performance with approximately 80 seconds of training data. The results indicated that our method obtained a higher mean opinion score, smaller spectral distortion, and better preference tests than the compared methods.

Fast Classification with Sequential Feature Selection in Test Phase

Jun 25, 2023Abstract:This paper introduces a novel approach to active feature acquisition for classification, which is the task of sequentially selecting the most informative subset of features to achieve optimal prediction performance during testing while minimizing cost. The proposed approach involves a new lazy model that is significantly faster and more efficient compared to existing methods, while still producing comparable accuracy results. During the test phase, the proposed approach utilizes Fisher scores for feature ranking to identify the most important feature at each step. In the next step the training dataset is filtered based on the observed value of the selected feature and then we continue this process to reach to acceptable accuracy or limit of the budget for feature acquisition. The performance of the proposed approach was evaluated on synthetic and real datasets, including our new synthetic dataset, CUBE dataset and also real dataset Forest. The experimental results demonstrate that our approach achieves competitive accuracy results compared to existing methods, while significantly outperforming them in terms of speed. The source code of the algorithm is released at github with this link: https://github.com/alimirzaei/FCwSFS.

Progressive Transmission using Recurrent Neural Networks

Aug 03, 2021

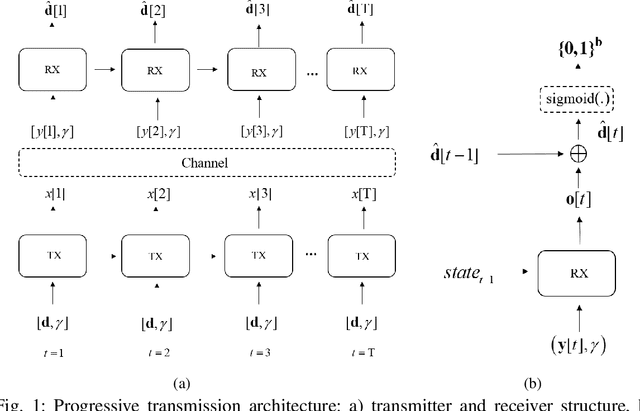

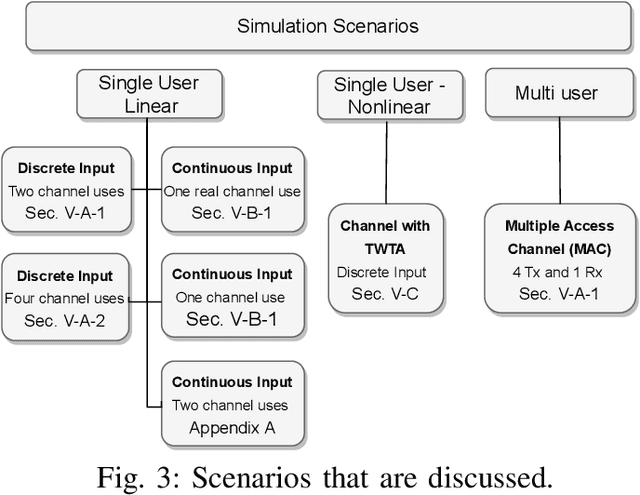

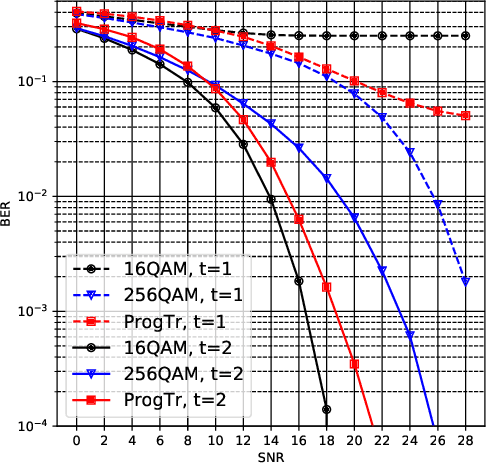

Abstract:In this paper, we investigate a new machine learning-based transmission strategy called progressive transmission or ProgTr. In ProgTr, there are b variables that should be transmitted using at most T channel uses. The transmitter aims to send the data to the receiver as fast as possible and with as few channel uses as possible (as channel conditions permit) while the receiver refines its estimate after each channel use. We use recurrent neural networks as the building block of both the transmitter and receiver where the SNR is provided as an input that represents the channel conditions. To show how ProgTr works, the proposed scheme was simulated in different scenarios including single/multi-user settings, different channel conditions, and for both discrete and continuous input data. The results show that ProgTr can achieve better performance compared to conventional modulation methods. In addition to performance metrics such as BER, bit-wise mutual information is used to provide some interpretation to how the transmitter and receiver operate in ProgTr.

Sleep Stage Scoring Using Joint Frequency-Temporal and Unsupervised Features

Apr 10, 2020

Abstract:Patients with sleep disorders can better manage their lifestyle if they know about their special situations. Detection of such sleep disorders is usually possible by analyzing a number of vital signals that have been collected from the patients. To simplify this task, a number of Automatic Sleep Stage Recognition (ASSR) methods have been proposed. Most of these methods use temporal-frequency features that have been extracted from the vital signals. However, due to the non-stationary nature of sleep signals, such schemes are not leading an acceptable accuracy. Recently, some ASSR methods have been proposed which use deep neural networks for unsupervised feature extraction. In this paper, we proposed to combine the two ideas and use both temporal-frequency and unsupervised features at the same time. To augment the time resolution, each standard epoch is segmented into 5 sub-epochs. Additionally, to enhance the accuracy, we employ three classifiers with different properties and then use an ensemble method as the ultimate classifier. The simulation results show that the proposed method enhances the accuracy of conventional ASSR methods.

Wi2Vi: Generating Video Frames from WiFi CSI Samples

Dec 30, 2019

Abstract:Objects in an environment affect electromagnetic waves. While this effect varies across frequencies, there exists a correlation between them, and a model with enough capacity can capture this correlation between the measurements in different frequencies. In this paper, we propose the Wi2Vi model for associating variations in the WiFi channel state information with video frames. The proposed Wi2Vi system can generate video frames entirely using CSI measurements. The produced video frames by the Wi2Vi provide auxiliary information to the conventional surveillance system in critical circumstances. Our implementation of the Wi2Vi system confirms the feasibility of constructing a system capable of deriving the correlations between measurements in different frequency spectrums.

SensorDrop: A Reinforcement Learning Framework for Communication Overhead Reduction on the Edge

Oct 03, 2019

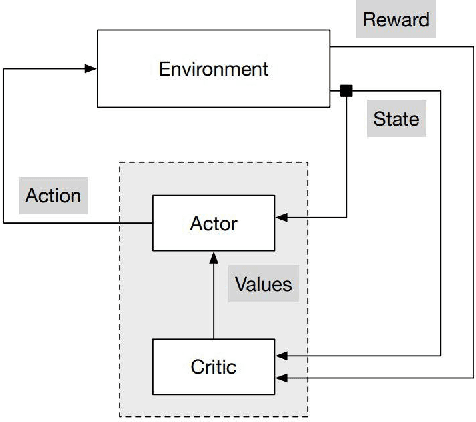

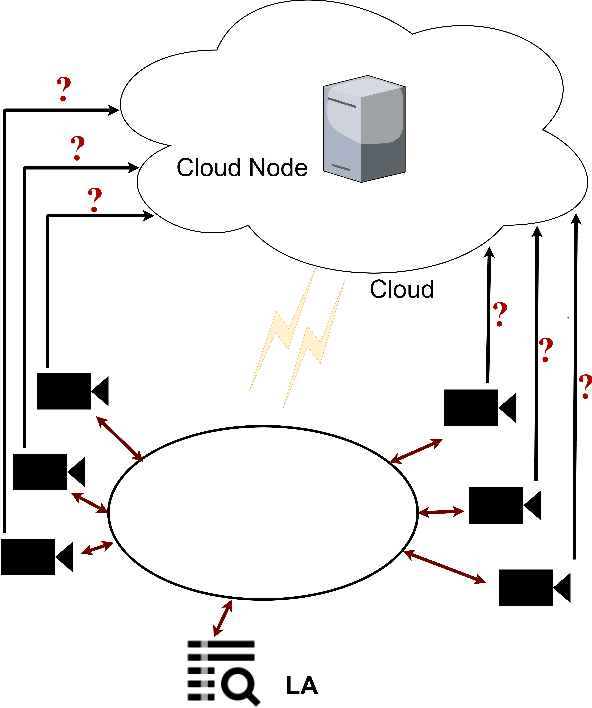

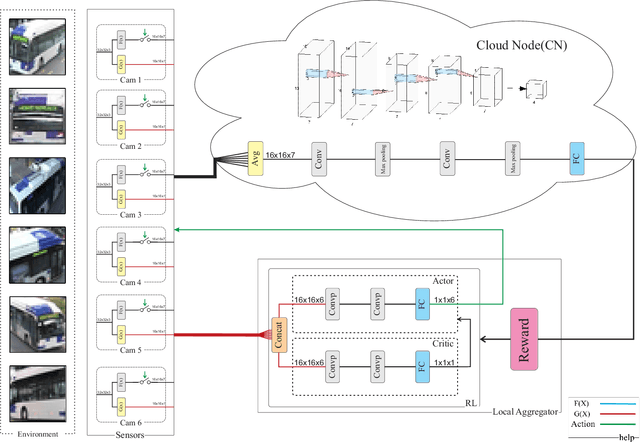

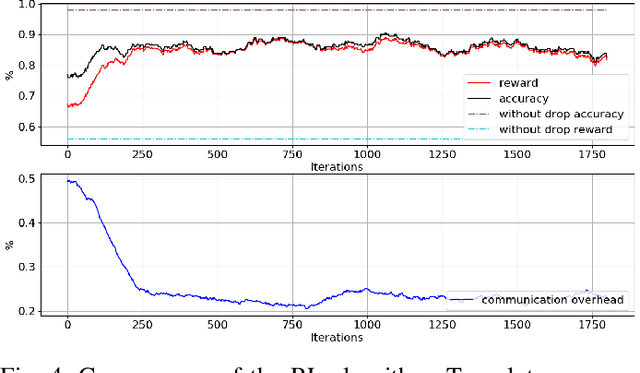

Abstract:In IoT solutions, it is usually desirable to collect data from a large number of distributed IoT sensors at a central node in the cloud for further processing. One of the main design challenges of such solutions is the high communication overhead between the sensors and the central node (especially for multimedia data). In this paper, we aim to reduce the communication overhead and propose a method that is able to determine which sensors should send their data to the central node and which to drop data. The idea is that some sensors may have data which are correlated with others and some may have data that are not essential for the operation to be performed at the central node. As such decisions are application dependent and may change over time, they should be learned during the operation of the system, for that we propose a method based on Advantage Actor-Critic (A2C) reinforcement learning which gradually learns which sensor's data is cost-effective to be sent to the central node. The proposed approach has been evaluated on a multi-view multi-camera dataset, and we observe a significant reduction in communication overhead with marginal degradation in object classification accuracy.

Propagation Channel Modeling by Deep learning Techniques

Aug 19, 2019

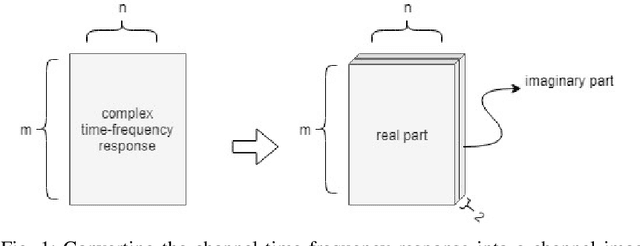

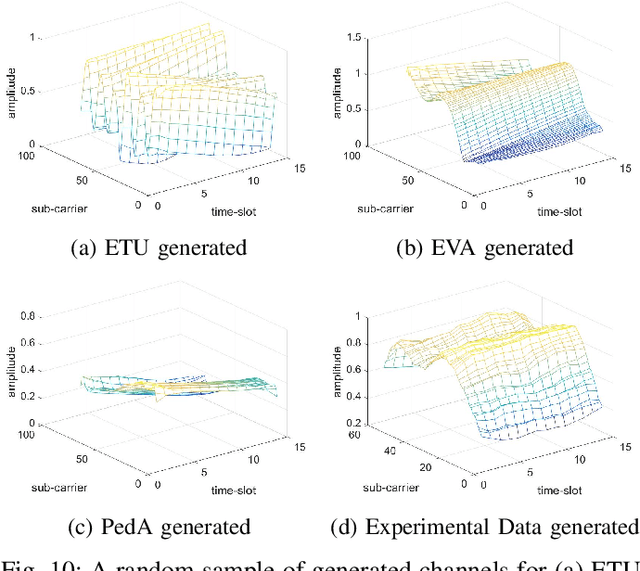

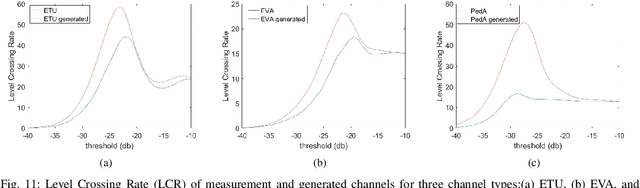

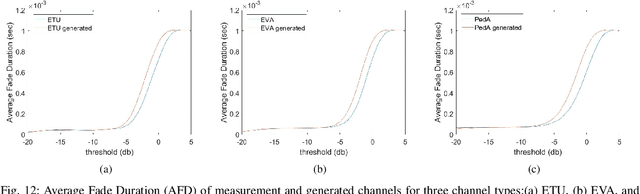

Abstract:Channel, as the medium for the propagation of electromagnetic waves, is one of the most important parts of a communication system. Being aware of how the channel affects the propagation waves is essential for designing, optimization and performance analysis of a communication system. For this purpose, a proper channel model is needed. This paper presents a novel propagation channel model which considers the time-frequency response of the channel as an image. It models the distribution of these channel images using Deep Convolutional Generative Adversarial Networks. Moreover, for the measurements with different user speeds, the user speed is considered as an auxiliary parameter for the model. StarGAN as an image-to-image translation technique is used to change the generated channel images with respect to the desired user speed. The performance of the proposed model is evaluated using existing metrics. Furthermore, to capture 2D similarity in both time and frequency, a new metric is introduced. Using this metric, the generated channels show significant statistical similarity to the measurement data.

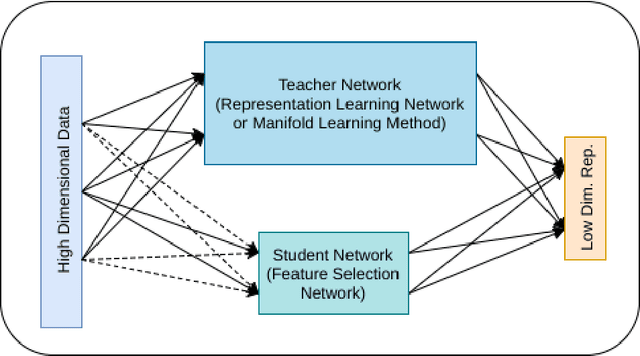

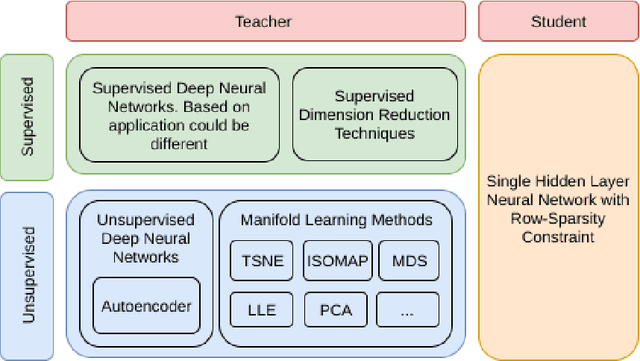

Deep Feature Selection using a Teacher-Student Network

Mar 17, 2019

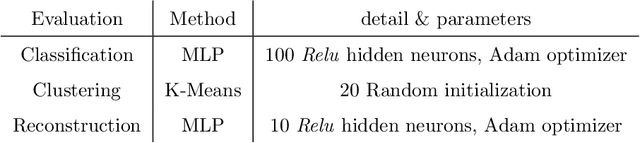

Abstract:High-dimensional data in many machine learning applications leads to computational and analytical complexities. Feature selection provides an effective way for solving these problems by removing irrelevant and redundant features, thus reducing model complexity and improving accuracy and generalization capability of the model. In this paper, we present a novel teacher-student feature selection (TSFS) method in which a 'teacher' (a deep neural network or a complicated dimension reduction method) is first employed to learn the best representation of data in low dimension. Then a 'student' network (a simple neural network) is used to perform feature selection by minimizing the reconstruction error of low dimensional representation. Although the teacher-student scheme is not new, to the best of our knowledge, it is the first time that this scheme is employed for feature selection. The proposed TSFS can be used for both supervised and unsupervised feature selection. This method is evaluated on different datasets and is compared with state-of-the-art existing feature selection methods. The results show that TSFS performs better in terms of classification and clustering accuracies and reconstruction error. Moreover, experimental evaluations demonstrate a low degree of sensitivity to parameter selection in the proposed method.

Deep UL2DL: Channel Knowledge Transfer from Uplink to Downlink

Dec 16, 2018

Abstract:Knowledge of the channel state information (CSI) at the transmitter side is one of the primary sources of information that can be used for efficient allocation of wireless resources. Obtaining Down-Link (DL) CSI in FDD systems from Up-Link (UL) CSI is not as straightforward as TDD systems, and so usually users feedback the DL-CSI to the transmitter. To remove the need for feedback (and thus having less signaling overhead), several methods have been studied to estimate DL-CSI from UL-CSI. In this paper, we propose a scheme to infer DL-CSI by observing UL-CSI in which we use two recent deep neural network structures: a) Convolutional Neural network and b) Generative Adversarial Networks. The proposed deep network structures are first learning a latent model of the environment from the training data. Then, the resulted latent model is used to predict the DL-CSI from the UL-CSI. We have simulated the proposed scheme and evaluated its performance in a few network settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge