Alireza Abdollahpourrostam

Fast Classification with Sequential Feature Selection in Test Phase

Jun 25, 2023Abstract:This paper introduces a novel approach to active feature acquisition for classification, which is the task of sequentially selecting the most informative subset of features to achieve optimal prediction performance during testing while minimizing cost. The proposed approach involves a new lazy model that is significantly faster and more efficient compared to existing methods, while still producing comparable accuracy results. During the test phase, the proposed approach utilizes Fisher scores for feature ranking to identify the most important feature at each step. In the next step the training dataset is filtered based on the observed value of the selected feature and then we continue this process to reach to acceptable accuracy or limit of the budget for feature acquisition. The performance of the proposed approach was evaluated on synthetic and real datasets, including our new synthetic dataset, CUBE dataset and also real dataset Forest. The experimental results demonstrate that our approach achieves competitive accuracy results compared to existing methods, while significantly outperforming them in terms of speed. The source code of the algorithm is released at github with this link: https://github.com/alimirzaei/FCwSFS.

Revisiting DeepFool: generalization and improvement

Mar 22, 2023

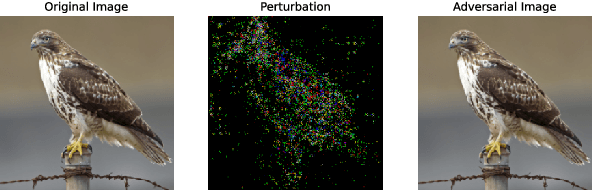

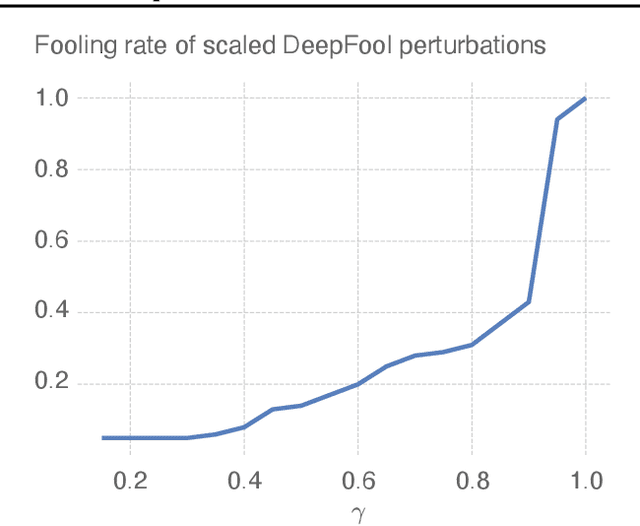

Abstract:Deep neural networks have been known to be vulnerable to adversarial examples, which are inputs that are modified slightly to fool the network into making incorrect predictions. This has led to a significant amount of research on evaluating the robustness of these networks against such perturbations. One particularly important robustness metric is the robustness to minimal l2 adversarial perturbations. However, existing methods for evaluating this robustness metric are either computationally expensive or not very accurate. In this paper, we introduce a new family of adversarial attacks that strike a balance between effectiveness and computational efficiency. Our proposed attacks are generalizations of the well-known DeepFool (DF) attack, while they remain simple to understand and implement. We demonstrate that our attacks outperform existing methods in terms of both effectiveness and computational efficiency. Our proposed attacks are also suitable for evaluating the robustness of large models and can be used to perform adversarial training (AT) to achieve state-of-the-art robustness to minimal l2 adversarial perturbations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge