Ulices Santa Cruz

Correct-by-Construction Vision-based Pose Estimation using Geometric Generative Models

Jan 24, 2026Abstract:We consider the problem of vision-based pose estimation for autonomous systems. While deep neural networks have been successfully used for vision-based tasks, they inherently lack provable guarantees on the correctness of their output, which is crucial for safety-critical applications. We present a framework for designing certifiable neural networks (NNs) for perception-based pose estimation that integrates physics-driven modeling with learning-based estimation. The proposed framework begins by leveraging the known geometry of planar objects commonly found in the environment, such as traffic signs and runway markings, referred to as target objects. At its core, it introduces a geometric generative model (GGM), a neural-network-like model whose parameters are derived from the image formation process of a target object observed by a camera. Once designed, the GGM can be used to train NN-based pose estimators with certified guarantees in terms of their estimation errors. We first demonstrate this framework in uncluttered environments, where the target object is the only object present in the camera's field of view. We extend this using ideas from NN reachability analysis to design certified object NN that can detect the presence of the target object in cluttered environments. Subsequently, the framework consolidates the certified object detector with the certified pose estimator to design a multi-stage perception pipeline that generalizes the proposed approach to cluttered environments, while maintaining its certified guarantees. We evaluate the proposed framework using both synthetic and real images of various planar objects commonly encountered by autonomous vehicles. Using images captured by an event-based camera, we show that the trained encoder can effectively estimate the pose of a traffic sign in accordance with the certified bound provided by the framework.

NNLander-VeriF: A Neural Network Formal Verification Framework for Vision-Based Autonomous Aircraft Landing

Mar 29, 2022

Abstract:In this paper, we consider the problem of formally verifying a Neural Network (NN) based autonomous landing system. In such a system, a NN controller processes images from a camera to guide the aircraft while approaching the runway. A central challenge for the safety and liveness verification of vision-based closed-loop systems is the lack of mathematical models that captures the relation between the system states (e.g., position of the aircraft) and the images processed by the vision-based NN controller. Another challenge is the limited abilities of state-of-the-art NN model checkers. Such model checkers can reason only about simple input-output robustness properties of neural networks. This limitation creates a gap between the NN model checker abilities and the need to verify a closed-loop system while considering the aircraft dynamics, the perception components, and the NN controller. To this end, this paper presents NNLander-VeriF, a framework to verify vision-based NN controllers used for autonomous landing. NNLander-VeriF addresses the challenges above by exploiting geometric models of perspective cameras to obtain a mathematical model that captures the relation between the aircraft states and the inputs to the NN controller. By converting this model into a NN (with manually assigned weights) and composing it with the NN controller, one can capture the relation between aircraft states and control actions using one augmented NN. Such an augmented NN model leads to a natural encoding of the closed-loop verification into several NN robustness queries, which state-of-the-art NN model checkers can handle. Finally, we evaluate our framework to formally verify the properties of a trained NN and we show its efficiency.

Provably Safe Model-Based Meta Reinforcement Learning: An Abstraction-Based Approach

Sep 03, 2021

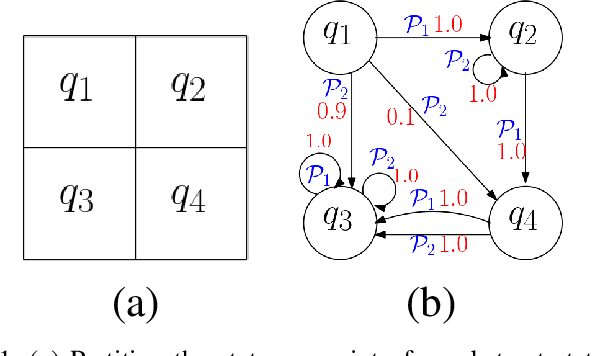

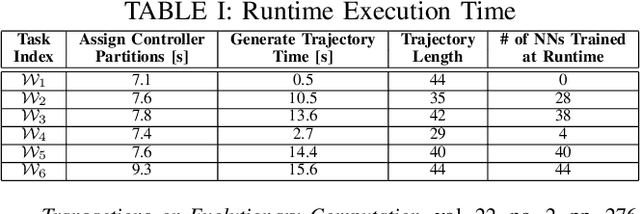

Abstract:While conventional reinforcement learning focuses on designing agents that can perform one task, meta-learning aims, instead, to solve the problem of designing agents that can generalize to different tasks (e.g., environments, obstacles, and goals) that were not considered during the design or the training of these agents. In this spirit, in this paper, we consider the problem of training a provably safe Neural Network (NN) controller for uncertain nonlinear dynamical systems that can generalize to new tasks that were not present in the training data while preserving strong safety guarantees. Our approach is to learn a set of NN controllers during the training phase. When the task becomes available at runtime, our framework will carefully select a subset of these NN controllers and compose them to form the final NN controller. Critical to our approach is the ability to compute a finite-state abstraction of the nonlinear dynamical system. This abstract model captures the behavior of the closed-loop system under all possible NN weights, and is used to train the NNs and compose them when the task becomes available. We provide theoretical guarantees that govern the correctness of the resulting NN. We evaluated our approach on the problem of controlling a wheeled robot in cluttered environments that were not present in the training data.

Safe-by-Repair: A Convex Optimization Approach for Repairing Unsafe Two-Level Lattice Neural Network Controllers

Apr 06, 2021

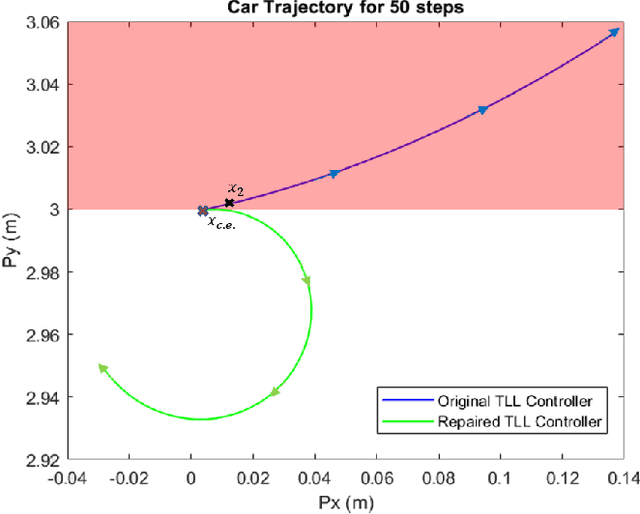

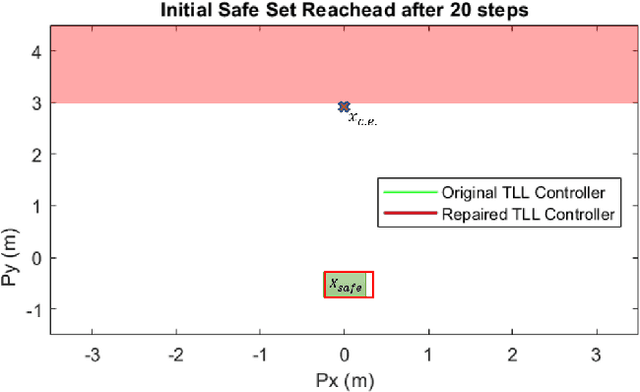

Abstract:In this paper, we consider the problem of repairing a data-trained Rectified Linear Unit (ReLU) Neural Network (NN) controller for a discrete-time, input-affine system. That is we assume that such a NN controller is available, and we seek to repair unsafe closed-loop behavior at one known "counterexample" state while simultaneously preserving a notion of safe closed-loop behavior on a separate, verified set of states. To this end, we further assume that the NN controller has a Two-Level Lattice (TLL) architecture, and exhibit an algorithm that can systematically and efficiently repair such an network. Facilitated by this choice, our approach uses the unique semantics of the TLL architecture to divide the repair problem into two significantly decoupled sub-problems, one of which is concerned with repairing the un-safe counterexample -- and hence is essentially of local scope -- and the other of which ensures that the repairs are realized in the output of the network -- and hence is essentially of global scope. We then show that one set of sufficient conditions for solving each these sub-problems can be cast as a convex feasibility problem, and this allows us to formulate the TLL repair problem as two separate, but significantly decoupled, convex optimization problems. Finally, we evaluate our algorithm on a TLL controller on a simple dynamical model of a four-wheel-car.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge