Tomer Peleg

Make the Most Out of Your Net: Alternating Between Canonical and Hard Datasets for Improved Image Demosaicing

Mar 28, 2023Abstract:Image demosaicing is an important step in the image processing pipeline for digital cameras, and it is one of the many tasks within the field of image restoration. A well-known characteristic of natural images is that most patches are smooth, while high-content patches like textures or repetitive patterns are much rarer, which results in a long-tailed distribution. This distribution can create an inductive bias when training machine learning algorithms for image restoration tasks and for image demosaicing in particular. There have been many different approaches to address this challenge, such as utilizing specific losses or designing special network architectures. What makes our work is unique in that it tackles the problem from a training protocol perspective. Our proposed training regime consists of two key steps. The first step is a data-mining stage where sub-categories are created and then refined through an elimination process to only retain the most helpful sub-categories. The second step is a cyclic training process where the neural network is trained on both the mined sub-categories and the original dataset. We have conducted various experiments to demonstrate the effectiveness of our training method for the image demosaicing task. Our results show that this method outperforms standard training across a range of architecture sizes and types, including CNNs and Transformers. Moreover, we are able to achieve state-of-the-art results with a significantly smaller neural network, compared to previous state-of-the-art methods.

One scalar is all you need -- absolute depth estimation using monocular self-supervision

Mar 15, 2023

Abstract:Self-supervised monocular depth estimators can be trained or fine-tuned on new scenes using only images and no ground-truth depth data, achieving good accuracy. However, these estimators suffer from the inherent ambiguity of the depth scale, significantly limiting their applicability. In this work, we present a method for transferring the depth-scale from existing source datasets collected with ground-truth depths to depth estimators that are trained using self-supervision on a newly collected target dataset consisting of images only, solving a significant limiting factor. We show that self-supervision based on projective geometry results in predicted depths that are linearly correlated with their ground-truth depths. Moreover, the linearity of this relationship also holds when jointly training on images from two different (real or synthetic) source and target domains. We utilize this observed property and model the relationship between the ground-truth and the predicted up-to-scale depths of images from the source domain using a single global scalar. Then, we scale the predicted up-to-scale depths of images from the target domain using the estimated global scaling factor, performing depth-scale transfer between the two domains. This suggested method was evaluated on the target KITTI and DDAD datasets, while using other real or synthetic source datasets, that have a larger field-of-view, other image style or structural content. Our approach achieves competitive accuracy on KITTI, even without using the specially tailored vKITTI or vKITTI2 datasets, and higher accuracy on DDAD, when using both real or synthetic source datasets.

You Better Look Twice: a new perspective for designing accurate detectors with reduced computations

Aug 03, 2021

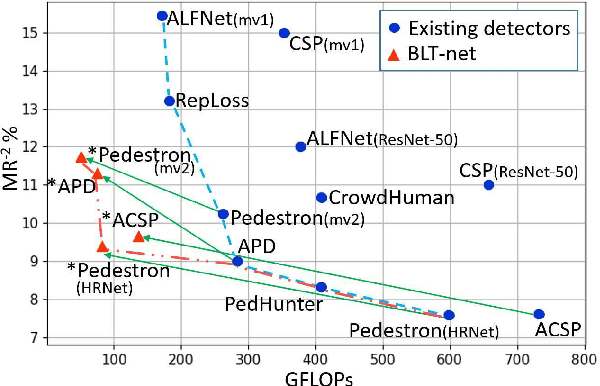

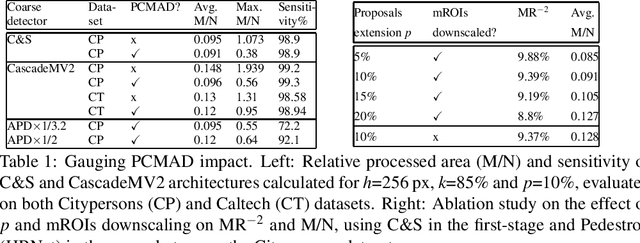

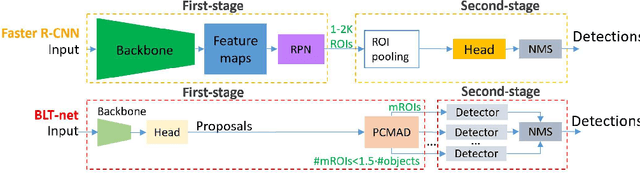

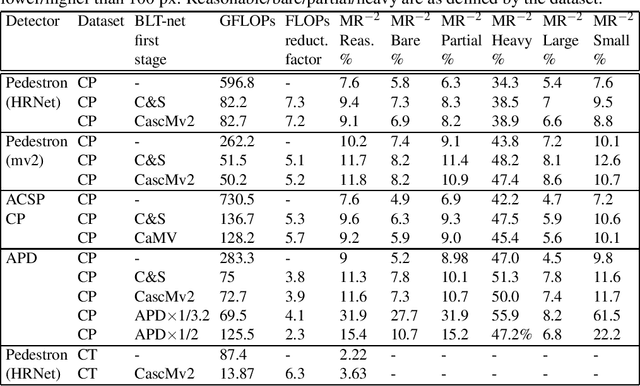

Abstract:General object detectors use powerful backbones that uniformly extract features from images for enabling detection of a vast amount of object types. However, utilization of such backbones in object detection applications developed for specific object types can unnecessarily over-process an extensive amount of background. In addition, they are agnostic to object scales, thus redundantly process all image regions at the same resolution. In this work we introduce BLT-net, a new low-computation two-stage object detection architecture designed to process images with a significant amount of background and objects of variate scales. BLT-net reduces computations by separating objects from background using a very lite first-stage. BLT-net then efficiently merges obtained proposals to further decrease processed background and then dynamically reduces their resolution to minimize computations. Resulting image proposals are then processed in the second-stage by a highly accurate model. We demonstrate our architecture on the pedestrian detection problem, where objects are of different sizes, images are of high resolution and object detection is required to run in real-time. We show that our design reduces computations by a factor of x4-x7 on the Citypersons and Caltech datasets with respect to leading pedestrian detectors, on account of a small accuracy degradation. This method can be applied on other object detection applications in scenes with a considerable amount of background and variate object sizes to reduce computations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge