Tobias Reitmaier

Semi-Supervised Active Learning for Support Vector Machines: A Novel Approach that Exploits Structure Information in Data

Oct 14, 2016

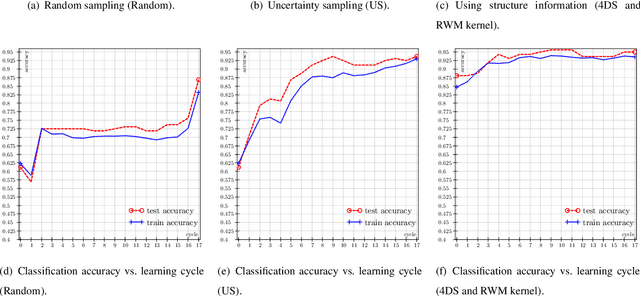

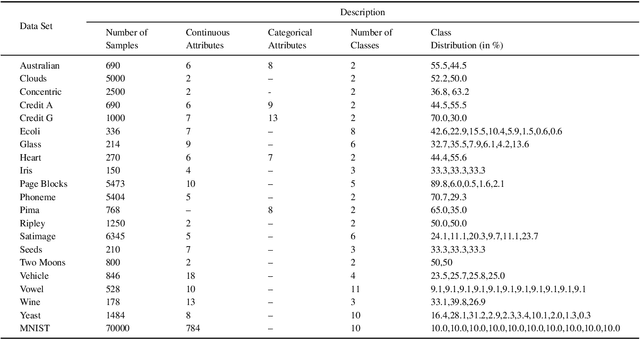

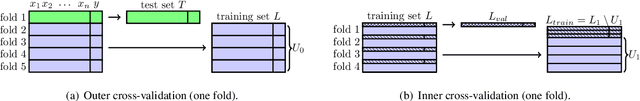

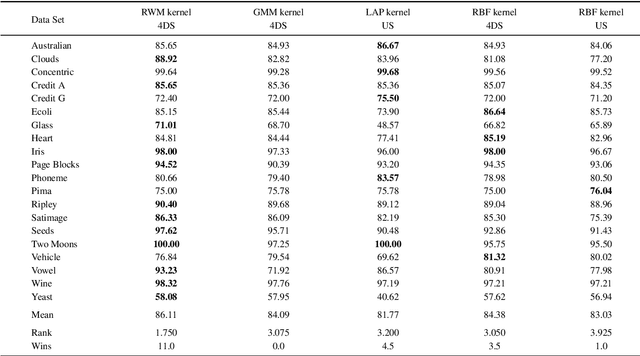

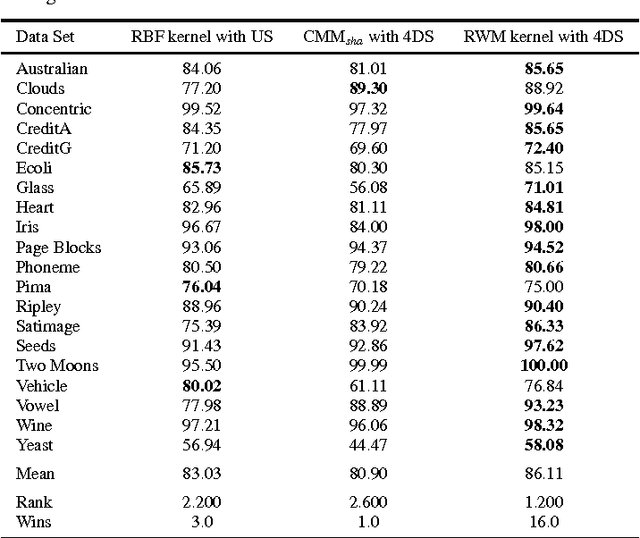

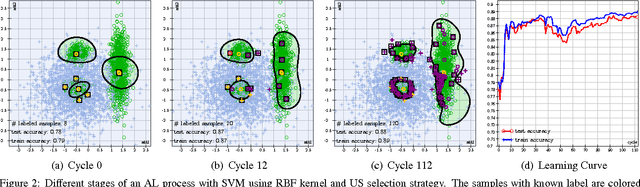

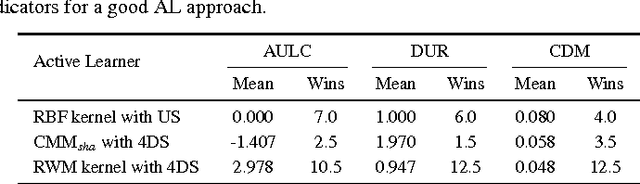

Abstract:In our today's information society more and more data emerges, e.g.~in social networks, technical applications, or business applications. Companies try to commercialize these data using data mining or machine learning methods. For this purpose, the data are categorized or classified, but often at high (monetary or temporal) costs. An effective approach to reduce these costs is to apply any kind of active learning (AL) methods, as AL controls the training process of a classifier by specific querying individual data points (samples), which are then labeled (e.g., provided with class memberships) by a domain expert. However, an analysis of current AL research shows that AL still has some shortcomings. In particular, the structure information given by the spatial pattern of the (un)labeled data in the input space of a classification model (e.g.,~cluster information), is used in an insufficient way. In addition, many existing AL techniques pay too little attention to their practical applicability. To meet these challenges, this article presents several techniques that together build a new approach for combining AL and semi-supervised learning (SSL) for support vector machines (SVM) in classification tasks. Structure information is captured by means of probabilistic models that are iteratively improved at runtime when label information becomes available. The probabilistic models are considered in a selection strategy based on distance, density, diversity, and distribution (4DS strategy) information for AL and in a kernel function (Responsibility Weighted Mahalanobis kernel) for SVM. The approach fuses generative and discriminative modeling techniques. With 20 benchmark data sets and with the MNIST data set it is shown that our new solution yields significantly better results than state-of-the-art methods.

A New Vision of Collaborative Active Learning

Dec 22, 2015

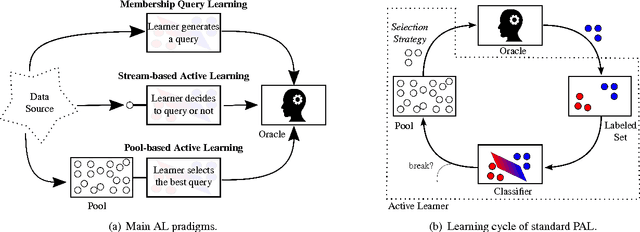

Abstract:Active learning (AL) is a learning paradigm where an active learner has to train a model (e.g., a classifier) which is in principal trained in a supervised way, but in AL it has to be done by means of a data set with initially unlabeled samples. To get labels for these samples, the active learner has to ask an oracle (e.g., a human expert) for labels. The goal is to maximize the performance of the model and to minimize the number of queries at the same time. In this article, we first briefly discuss the state of the art and own, preliminary work in the field of AL. Then, we propose the concept of collaborative active learning (CAL). With CAL, we will overcome some of the harsh limitations of current AL. In particular, we envision scenarios where an expert may be wrong for various reasons, there might be several or even many experts with different expertise, the experts may label not only samples but also knowledge at a higher level such as rules, and we consider that the labeling costs depend on many conditions. Moreover, in a CAL process human experts will profit by improving their own knowledge, too.

The Responsibility Weighted Mahalanobis Kernel for Semi-Supervised Training of Support Vector Machines for Classification

Feb 16, 2015

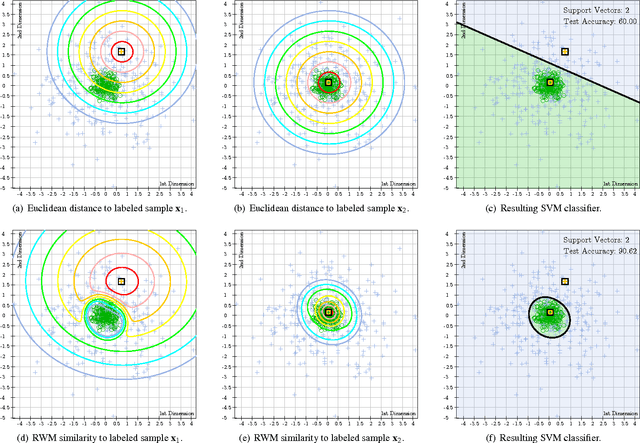

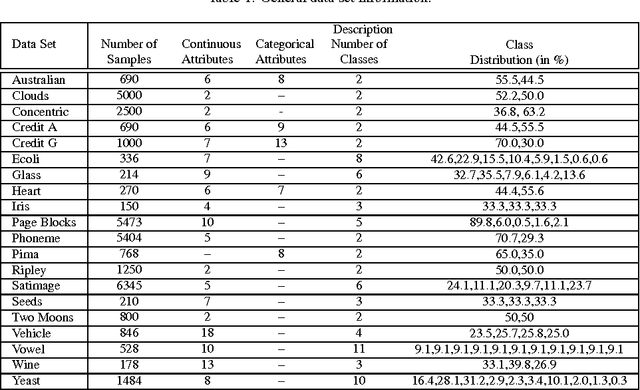

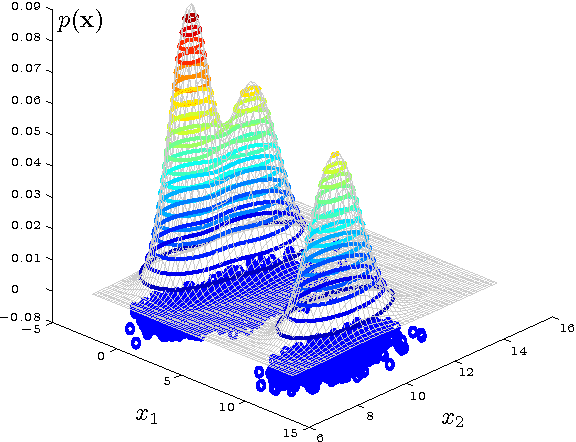

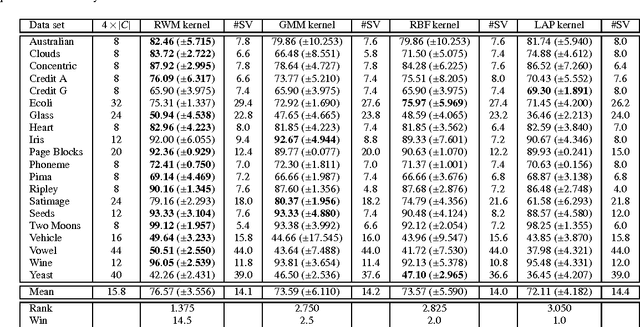

Abstract:Kernel functions in support vector machines (SVM) are needed to assess the similarity of input samples in order to classify these samples, for instance. Besides standard kernels such as Gaussian (i.e., radial basis function, RBF) or polynomial kernels, there are also specific kernels tailored to consider structure in the data for similarity assessment. In this article, we will capture structure in data by means of probabilistic mixture density models, for example Gaussian mixtures in the case of real-valued input spaces. From the distance measures that are inherently contained in these models, e.g., Mahalanobis distances in the case of Gaussian mixtures, we derive a new kernel, the responsibility weighted Mahalanobis (RWM) kernel. Basically, this kernel emphasizes the influence of model components from which any two samples that are compared are assumed to originate (that is, the "responsible" model components). We will see that this kernel outperforms the RBF kernel and other kernels capturing structure in data (such as the LAP kernel in Laplacian SVM) in many applications where partially labeled data are available, i.e., for semi-supervised training of SVM. Other key advantages are that the RWM kernel can easily be used with standard SVM implementations and training algorithms such as sequential minimal optimization, and heuristics known for the parametrization of RBF kernels in a C-SVM can easily be transferred to this new kernel. Properties of the RWM kernel are demonstrated with 20 benchmark data sets and an increasing percentage of labeled samples in the training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge