Toan N. Nguyen

Batch Clipping and Adaptive Layerwise Clipping for Differential Private Stochastic Gradient Descent

Jul 21, 2023Abstract:Each round in Differential Private Stochastic Gradient Descent (DPSGD) transmits a sum of clipped gradients obfuscated with Gaussian noise to a central server which uses this to update a global model which often represents a deep neural network. Since the clipped gradients are computed separately, which we call Individual Clipping (IC), deep neural networks like resnet-18 cannot use Batch Normalization Layers (BNL) which is a crucial component in deep neural networks for achieving a high accuracy. To utilize BNL, we introduce Batch Clipping (BC) where, instead of clipping single gradients as in the orginal DPSGD, we average and clip batches of gradients. Moreover, the model entries of different layers have different sensitivities to the added Gaussian noise. Therefore, Adaptive Layerwise Clipping methods (ALC), where each layer has its own adaptively finetuned clipping constant, have been introduced and studied, but so far without rigorous DP proofs. In this paper, we propose {\em a new ALC and provide rigorous DP proofs for both BC and ALC}. Experiments show that our modified DPSGD with BC and ALC for CIFAR-$10$ with resnet-$18$ converges while DPSGD with IC and ALC does not.

Generalizing DP-SGD with Shuffling and Batching Clipping

Dec 12, 2022Abstract:Classical differential private DP-SGD implements individual clipping with random subsampling, which forces a mini-batch SGD approach. We provide a general differential private algorithmic framework that goes beyond DP-SGD and allows any possible first order optimizers (e.g., classical SGD and momentum based SGD approaches) in combination with batch clipping, which clips an aggregate of computed gradients rather than summing clipped gradients (as is done in individual clipping). The framework also admits sampling techniques beyond random subsampling such as shuffling. Our DP analysis follows the $f$-DP approach and introduces a new proof technique which allows us to also analyse group privacy. In particular, for $E$ epochs work and groups of size $g$, we show a $\sqrt{g E}$ DP dependency for batch clipping with shuffling. This is much better than the previously anticipated linear dependency in $g$ and is much better than the previously expected square root dependency on the total number of rounds within $E$ epochs which is generally much more than $\sqrt{E}$.

Differential Private Hogwild! over Distributed Local Data Sets

Feb 17, 2021

Abstract:We consider the Hogwild! setting where clients use local SGD iterations with Gaussian based Differential Privacy (DP) for their own local data sets with the aim of (1) jointly converging to a global model (by interacting at a round to round basis with a centralized server that aggregates local SGD updates into a global model) while (2) keeping each local data set differentially private with respect to the outside world (this includes all other clients who can monitor client-server interactions). We show for a broad class of sample size sequences (this defines the number of local SGD iterations for each round) that a local data set is $(\epsilon,\delta)$-DP if the standard deviation $\sigma$ of the added Gaussian noise per round interaction with the centralized server is at least $\sqrt{2(\epsilon+ \ln(1/\delta))/\epsilon}$.

Hogwild! over Distributed Local Data Sets with Linearly Increasing Mini-Batch Sizes

Oct 27, 2020

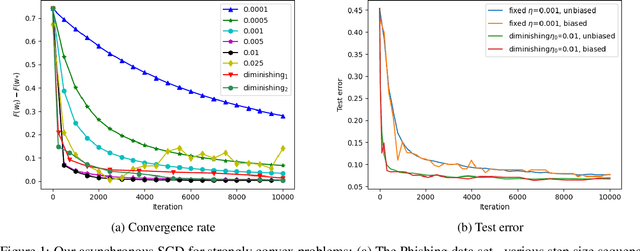

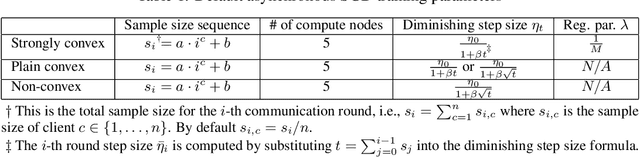

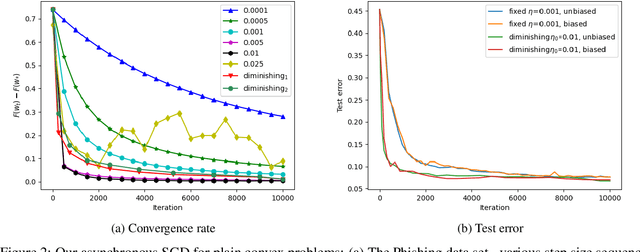

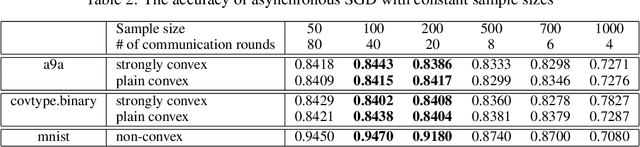

Abstract:Hogwild! implements asynchronous Stochastic Gradient Descent (SGD) where multiple threads in parallel access a common repository containing training data, perform SGD iterations and update shared state that represents a jointly learned (global) model. We consider big data analysis where training data is distributed among local data sets -- and we wish to move SGD computations to local compute nodes where local data resides. The results of these local SGD computations are aggregated by a central "aggregator" which mimics Hogwild!. We show how local compute nodes can start choosing small mini-batch sizes which increase to larger ones in order to reduce communication cost (round interaction with the aggregator). We prove a tight and novel non-trivial convergence analysis for strongly convex problems which does not use the bounded gradient assumption as seen in many existing publications. The tightness is a consequence of our proofs for lower and upper bounds of the convergence rate, which show a constant factor difference. We show experimental results for plain convex and non-convex problems for biased and unbiased local data sets.

Asynchronous Federated Learning with Reduced Number of Rounds and with Differential Privacy from Less Aggregated Gaussian Noise

Jul 17, 2020

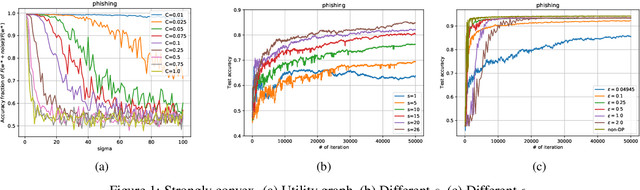

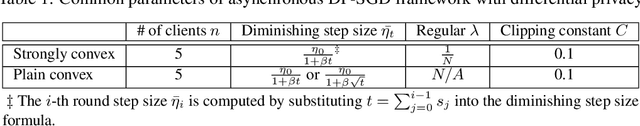

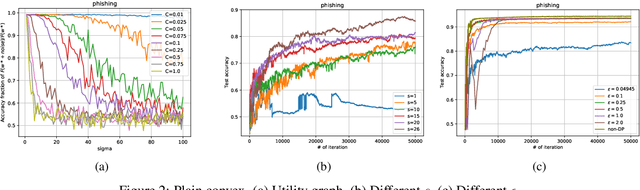

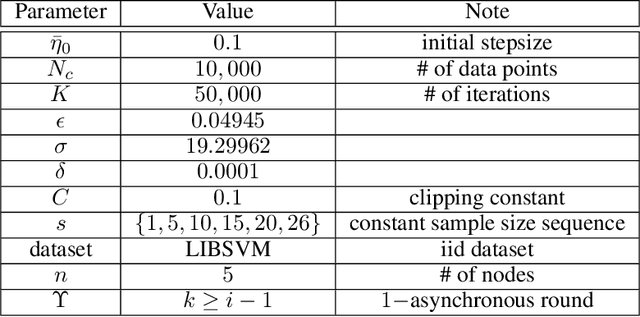

Abstract:The feasibility of federated learning is highly constrained by the server-clients infrastructure in terms of network communication. Most newly launched smartphones and IoT devices are equipped with GPUs or sufficient computing hardware to run powerful AI models. However, in case of the original synchronous federated learning, client devices suffer waiting times and regular communication between clients and server is required. This implies more sensitivity to local model training times and irregular or missed updates, hence, less or limited scalability to large numbers of clients and convergence rates measured in real time will suffer. We propose a new algorithm for asynchronous federated learning which eliminates waiting times and reduces overall network communication - we provide rigorous theoretical analysis for strongly convex objective functions and provide simulation results. By adding Gaussian noise we show how our algorithm can be made differentially private -- new theorems show how the aggregated added Gaussian noise is significantly reduced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge