Till Mossakowski

Advancing Natural Language Formalization to First Order Logic with Fine-tuned LLMs

Sep 26, 2025Abstract:Automating the translation of natural language to first-order logic (FOL) is crucial for knowledge representation and formal methods, yet remains challenging. We present a systematic evaluation of fine-tuned LLMs for this task, comparing architectures (encoder-decoder vs. decoder-only) and training strategies. Using the MALLS and Willow datasets, we explore techniques like vocabulary extension, predicate conditioning, and multilingual training, introducing metrics for exact match, logical equivalence, and predicate alignment. Our fine-tuned Flan-T5-XXL achieves 70% accuracy with predicate lists, outperforming GPT-4o and even the DeepSeek-R1-0528 model with CoT reasoning ability as well as symbolic systems like ccg2lambda. Key findings show: (1) predicate availability boosts performance by 15-20%, (2) T5 models surpass larger decoder-only LLMs, and (3) models generalize to unseen logical arguments (FOLIO dataset) without specific training. While structural logic translation proves robust, predicate extraction emerges as the main bottleneck.

A semantic loss for ontology classification

May 03, 2024Abstract:Deep learning models are often unaware of the inherent constraints of the task they are applied to. However, many downstream tasks require logical consistency. For ontology classification tasks, such constraints include subsumption and disjointness relations between classes. In order to increase the consistency of deep learning models, we propose a semantic loss that combines label-based loss with terms penalising subsumption- or disjointness-violations. Our evaluation on the ChEBI ontology shows that the semantic loss is able to decrease the number of consistency violations by several orders of magnitude without decreasing the classification performance. In addition, we use the semantic loss for unsupervised learning. We show that this can further improve consistency on data from a distribution outside the scope of the supervised training.

Ontology Pre-training for Poison Prediction

Jan 20, 2023

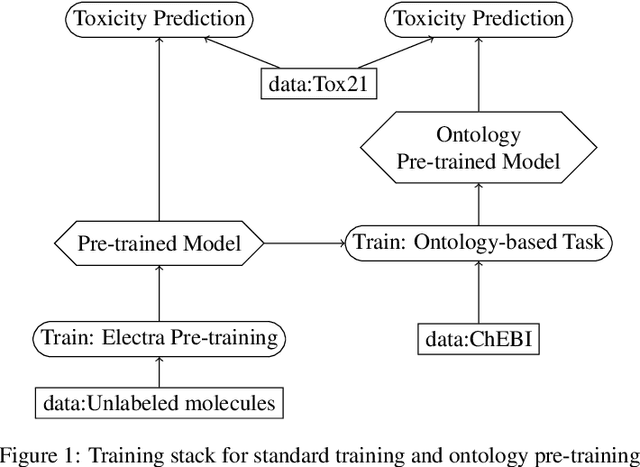

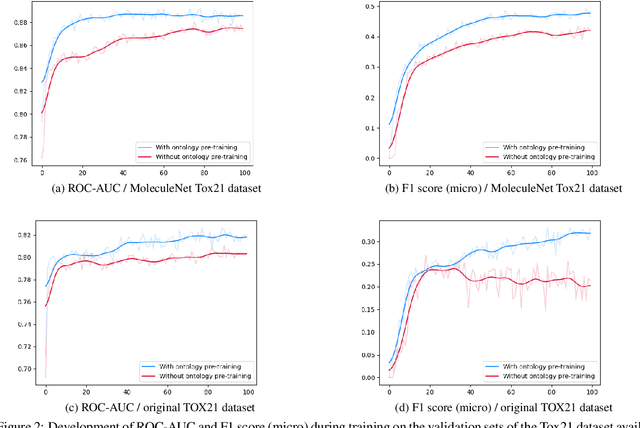

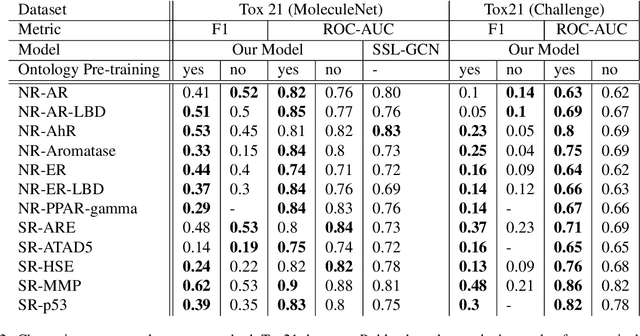

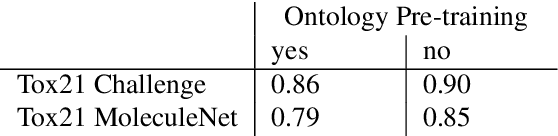

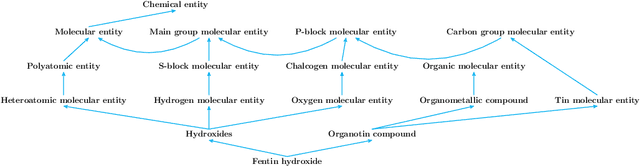

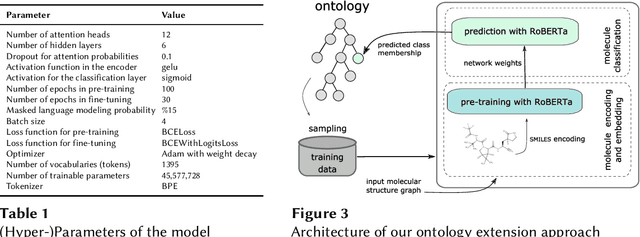

Abstract:Integrating human knowledge into neural networks has the potential to improve their robustness and interpretability. We have developed a novel approach to integrate knowledge from ontologies into the structure of a Transformer network which we call ontology pre-training: we train the network to predict membership in ontology classes as a way to embed the structure of the ontology into the network, and subsequently fine-tune the network for the particular prediction task. We apply this approach to a case study in predicting the potential toxicity of a small molecule based on its molecular structure, a challenging task for machine learning in life sciences chemistry. Our approach improves on the state of the art, and moreover has several additional benefits. First, we are able to show that the model learns to focus attention on more meaningful chemical groups when making predictions with ontology pre-training than without, paving a path towards greater robustness and interpretability. Second, the training time is reduced after ontology pre-training, indicating that the model is better placed to learn what matters for toxicity prediction with the ontology pre-training than without. This strategy has general applicability as a neuro-symbolic approach to embed meaningful semantics into neural networks.

Modular design patterns for neural-symbolic integration: refinement and combination

Jun 09, 2022

Abstract:We formalise some aspects of the neural-symbol design patterns of van Bekkum et al., such that we can formally define notions of refinement of patterns, as well as modular combination of larger patterns from smaller building blocks. These formal notions are being implemented in the heterogeneous tool set (Hets), such that patterns and refinements can be checked for well-formedness, and combinations can be computed.

Automated and Explainable Ontology Extension Based on Deep Learning: A Case Study in the Chemical Domain

Sep 19, 2021

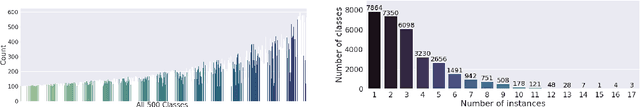

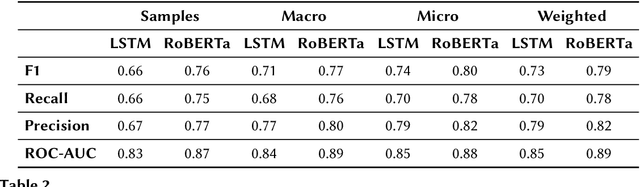

Abstract:Reference ontologies provide a shared vocabulary and knowledge resource for their domain. Manual construction enables them to maintain a high quality, allowing them to be widely accepted across their community. However, the manual development process does not scale for large domains. We present a new methodology for automatic ontology extension and apply it to the ChEBI ontology, a prominent reference ontology for life sciences chemistry. We trained a Transformer-based deep learning model on the leaf node structures from the ChEBI ontology and the classes to which they belong. The model is then capable of automatically classifying previously unseen chemical structures. The proposed model achieved an overall F1 score of 0.80, an improvement of 6 percentage points over our previous results on the same dataset. Additionally, we demonstrate how visualizing the model's attention weights can help to explain the results by providing insight into how the model made its decisions.

Generic Ontology Design Patterns: Roles and Change over Time

Nov 18, 2020

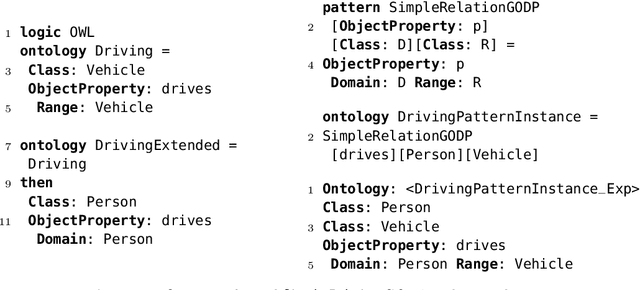

Abstract:In this chapter we propose Generic Ontology Design Patterns, GODPs, as a methodology for representing and instantiating ontology design patterns in a way that is adaptable, and allows domain experts (and other users) to safely use them without cluttering their ontologies.

Generic Ontology Design Patterns at Work

Jun 20, 2019

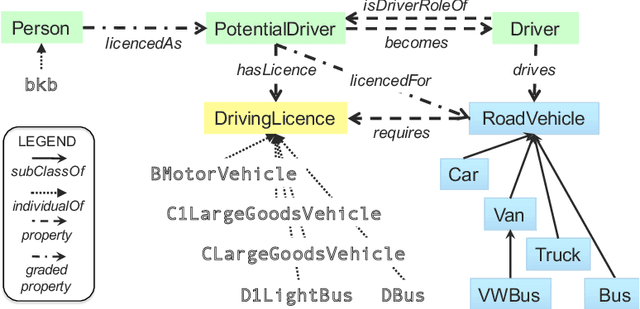

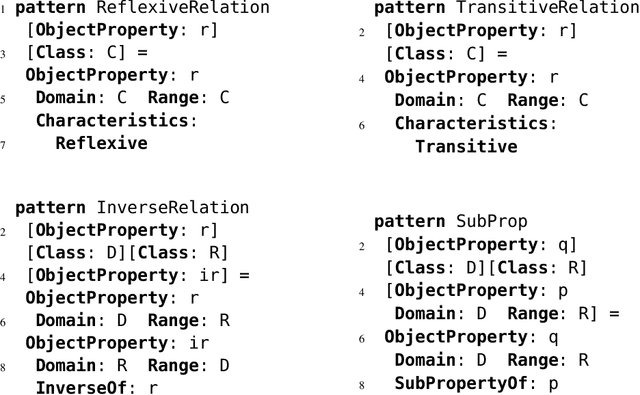

Abstract:Generic Ontology Design Patterns, GODPs, are defined in Generic DOL, an extension of DOL, the Distributed Ontology, Model and Specification Language, and implemented using Heterogeneous Tool Set. Parameters such as classes, properties, individuals, or whole ontologies may be instantiated with arguments in a host ontology. The potential of Generic DOL is illustrated with GODPs for an example from the literature, namely the Role design pattern. We also discuss how larger GODPs may be composed by instantiating smaller GODPs.

Extensions of Generic DOL for Generic Ontology Design Patterns

Jun 14, 2019

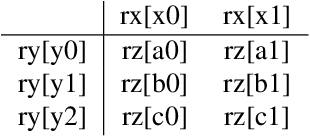

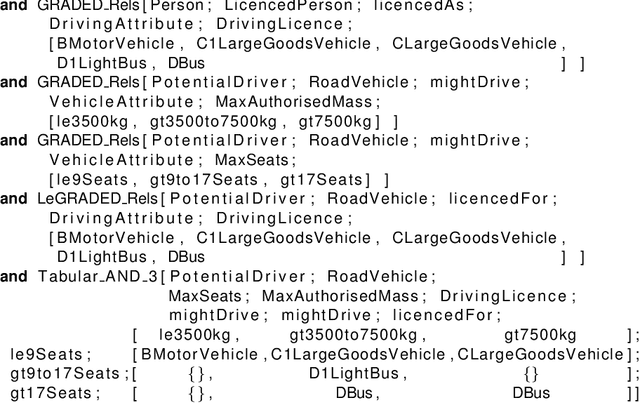

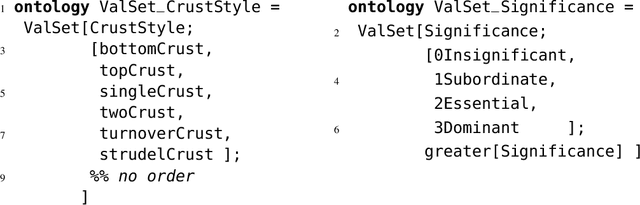

Abstract:Generic ontologies were introduced as an extension (Generic DOL) of the Distributed Ontology, Modeling and Specification Language, DOL, with the aim to provide a language for Generic Ontology Design Patterns. In this paper we present a number of new language constructs that increase the expressivity and the generality of Generic DOL, among them sequential and optional parameters, list parameters with recursion, and local sub-patterns. These are illustrated with non-trivial patterns: generic value sets and (nested) qualitatively graded relations, demonstrated as definitional building blocks in an application domain.

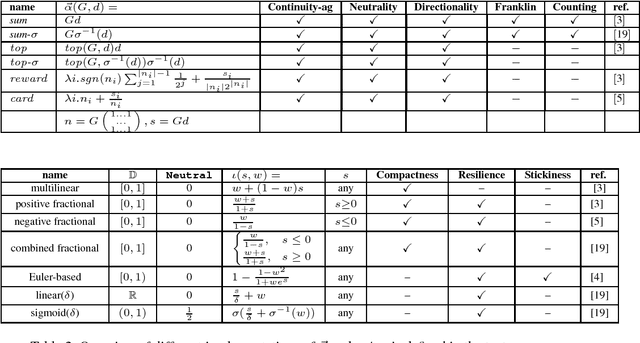

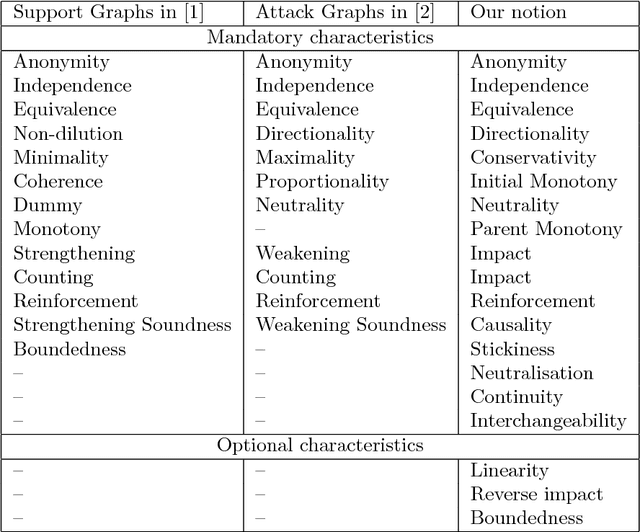

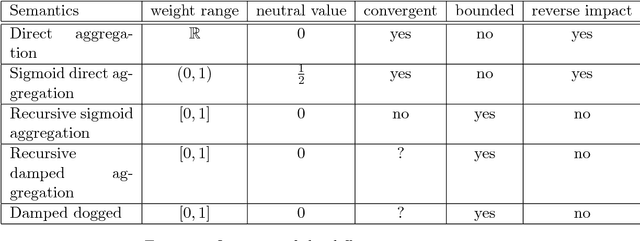

Modular Semantics and Characteristics for Bipolar Weighted Argumentation Graphs

Sep 26, 2018

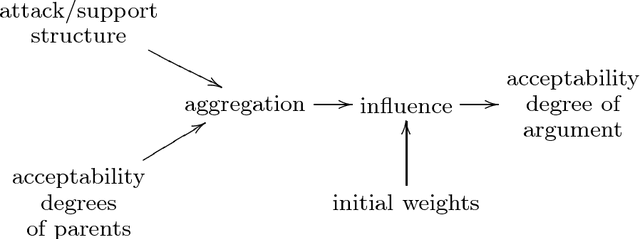

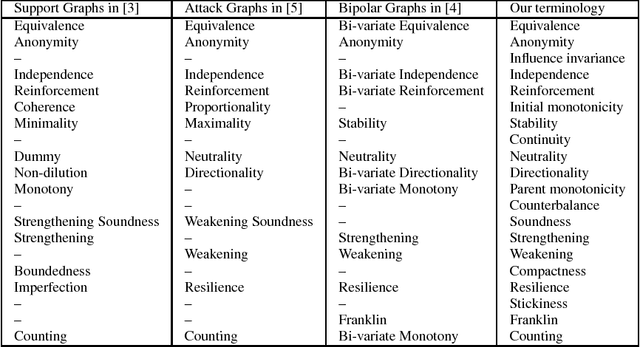

Abstract:This paper addresses the semantics of weighted argumentation graphs that are bipolar, i.e. contain both attacks and supports for arguments. It builds on previous work by Amgoud, Ben-Naim et. al. We study the various characteristics of acceptability semantics that have been introduced in these works, and introduce the notion of a modular acceptability semantics. A semantics is modular if it cleanly separates aggregation of attacking and supporting arguments (for a given argument $a$) from the computation of their influence on $a$'s initial weight. We show that the various semantics for bipolar argumentation graphs from the literature may be analysed as a composition of an aggregation function with an influence function. Based on this modular framework, we prove general convergence and divergence theorems. We demonstrate that all well-behaved modular acceptability semantics converge for all acyclic graphs and that no sum-based semantics can converge for all graphs. In particular, we show divergence of Euler-based semantics (Amgoud et al.) for certain cyclic graphs. Further, we provide the first semantics for bipolar weighted graphs that converges for all graphs.

Bipolar Weighted Argumentation Graphs

Dec 23, 2016

Abstract:This paper discusses the semantics of weighted argumentation graphs that are biplor, i.e. contain both attacks and support graphs. The work builds on previous work by Amgoud, Ben-Naim et. al., which presents and compares several semantics for argumentation graphs that contain only supports or only attacks relationships, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge